Alchemy is a collection of independent library components that specifically relate to efficient low-level constructs used with embedded and network programming.

The latest version of Embedded Alchemy[^] can be found on GitHub.The most recent entries as well as Alchemy topics to be posted soon:

- Steganography[^]

- Coming Soon: Alchemy: Data View

- Coming Soon: Quad (Copter) of the Damned

What Is a Software Architect?

general, leadership, communication, CodeProject, engineering Send feedback »There is so much confusion surrounding the purpose of a Software Architect and the value they provide and what they are supposed to do. So much so, that it seems the title is being used less and less by companies and replaced with a different title such as principal or staff. I assume this is due to the perception there must be a way to distinguish a level above senior, which is handed out after only about three years of experience.

Introduction

This has been a topic that I have wanted to discuss for quite some time. However, up until I read this question "Are there any Software Architects here?[^]" at CodeProject.com, I had been more compelled to focus on the more technical aspects of software development.

This is an excellent post that has multiple questions. If you were to take a quick peek at the question (it's ok, just come back), you would see about 80 responses in the discussion; with a wide variety of facts, opinions, and jokes related to software architecture and software development in general. Furthermore, many of the posts differ in opinion and even contradict each other.

I define the role of Software Architect in this entry. The definition is based upon my nearly two decades of experience developing software. Up to this point in my career, I have worked for small companies where I was the only software developer; all of the way up to companies where I was one of hundreds of developers and about one-thousand engineers of many engineering disciplines. Through the years I have performed just about every role found in the Software Development Lifecycle (SDLC) including a software architect.

The Basics of the Role

The Software Architect is not another stepping stone above Senior Software Engineer or Software Engineer V. They do work closely with people in these other roles, and in smaller companies they may also function as a Software Engineer V. However, the role of Software Architect is an entirely different position, and it has different responsibilities that are so much different than the task of writing software.

The software architect is a technical leader for the development team, and they are also the figurehead of the product for which they are responsible. One reason they become a figurehead for the product, is because the organization should make them responsible for the technical aspects of the product.

This makes them a good point of contact for information regarding the product, especially for personnel in the organization that may not be closely tied with the product. They may not always be the best person to answer a question, but they most certainly know who is.

As a leader, the architect is responsible for foster unity and trust among their team for success. The technical aspect requires the architect to mentor and guide the team along the way toward maintainable product with high quality. Moreover, they provide technical guidance and recommendations to the customer, which quite often is their own management team.

The Value

The architect adds value to the product and the organization by providing structure to both the design and implementation of the system, as well as the flow of information. If you are a fan of Fred Brooks and his book, The Mythical Man Month, you will recall that he states the implementation of a system tends to mimic the communication structure of your organization. In my experience, this has always proven to be true.

Improved Quality and Stability

Design by committee can work. However, you often need a group of like-minded individuals that do not differ in opinion too drastically for this succeed. In the extreme circumstances, if there are wildly divergent opinions, the group must be willing to forego their egos and accept the decisions that are made even when the decision is the polar opposite of what they believe to be the best decision. When all else fails, there is a final decision-maker at the top.

Thus enters the architect. They are after all, responsible for the product that is ultimately designed and built. They will help the team make decisions that are aligned with the macro-scale goals for the organization. While the development team themselves focus on the design decisions that are most beneficial at the micro-scale of the feature or system for which they are responsible.

Communication

A good flow of information is often required for harmony and success among a group. This can lead to other desirable benefits such as a high-level of morale and greater productivity. Effective communication is essential. Technical endeavors especially suffer when teams do not communicate effectively.

Development may move along steadily during the initial stages. However, if the integration of the components was not planned well in advance, progress will screech to a halt. Adjustments will need to be made for each component to be joined correctly. The architect can monitor the progress and direction for each developer or team, and provide guidance early on to correct course for the intended target.

The management team and key stake-holders communicate with the architect to remain in touch with the technical aspects of the system that require their attention. Such as clarifications on requirements, or missing resources that are necessary to continue to make progress on the project.

One problem that I see talented engineers struggle with, is to be able to adjust the language and content of their message to match their audience. Generally, they tend to be too technical and provide too many extraneous details for the important message to be properly conveyed. A good architect helps bridge this communication gap. They are able to effectively use the best style of communication to convey the details and properly persuade their audience.

Roles

At this point I would like to diverge and briefly and discuss the different roles that are required or exist for a software development project. Primarily because I often run across the question "What does an architect do that a developer doesn't?" This will also provide some context to refer to for the final section in which I will list desirable qualities you should look for in a software architect.

I mentioned there are many roles in software development. Each role is responsible for performing certain tasks. The problem is, the responsibilities for each role differ based on the organization. The size, culture and industry can affect how responsibilities are organized.

A company with a small staff may assign multiple tasks to its employees. To be able to complete all of the work in a timely fashion, the different roles may be responsible for overlapping tasks. While a large organization and development team may have many people that focus on performing a single task. All of the while, both companies may use the same titles to describe the roles of their employees.

It is important to know what your role and responsibilities are for any organization to be able to succeed at your own job; not to forget the success of the project and company as well. Therefore, I think it makes more sense to discuss the basic tasks that must be accomplished or commonly are included with a companies SDLC.

Tasks

Here is a non-comprehensive list of the technical tasks that are required to develop software.

- Analysis: Determining what you want, need, or should build

- Requirements Gathering: Create a definition of what is to be built.

- Planning: Create the budget and the schedule. This task is not entirely technical, but the schedule and budget will be more accurate with technical guidance.

- Coordination: This is a bit abstract, however, it basically covers all communication and resource scheduling.

- Design: Create plans for the structure and operation of your product.

- Development: Build the product.

- Verification: Verify that it meets requirements, specifications and quality levels.

- Source Control Management: Organization of the resources required to build the product.

- Documentation: Useful or necessary information to the creators and users.

The only task listed above that is actually necessary is development. However, the process of development and the quality of the product suffers when the other tasks are omitted. Also, this same effect can occur when the level of effort is reduced for any of the other tasks.

Example: Analysis

How difficult is it to build a jigsaw puzzle when you do not even know what the final image is? It is possible. However, it is much more difficult without knowing what you are aiming for.

Let's complicate the example further. How much more difficult does it become to assemble the puzzle when:

- Extraneous pieces from another puzzle are added to your pile of loose pieces

- The number of pieces in the puzzle is not known

- No corner pieces exist

- No edge pieces exist

Example: Coordination

When you run in a three-legged race, the team that is most coordinated will have a great advantage. As the amount coordination increases, the less amount of time the team-members will spend fighting each other trying to find a rhythm.

The same concept holds true when you have more than a single person performing tasks on a project. These are the types of things that should be targeted through communication and coordination:

- Agreement between the groups for how their systems will interoperate.

- Scheduling project tasks to minimize conflicts and dependencies. If each task is dependent linearly upon another, it will not make sense to have more than one person on the project at a time.

- Good communication throughout the project helps spot potential issues and address them before they are too difficult to tackle.

- If the schedule requires your software team to develop at the same time the hardware is being developed, some alternate and temporary solution will be required for the developers. Virtual machines or reference hardware maybe acquired for software to use until the hardware is available. This is also an effective solution when there is a shortage of real hardware compared to the number of developers on your team.

Return to Roles

I will describe the set of tasks the software architect may perform for a small to moderately sized team. We will define this as about 20 people, with 8 or so are developers. The remainder of the team includes product managers, personnel management, marketing, sales, and quality control.

Analysis, Requirements. Planning and Documentation

The architect should be involved from the beginning. Once you know there may be a product to create, or a new engineering cycle on an existing project, bring the architect in. They can assist with the analysis, requirements gathering, and planning tasks. They can spend a fraction of their time acting as a consultant for these tasks. They can help advise and make sure the right questions get asked, the proper information is gathered, and only realistic promises are made. When it comes to creating documentation, the architect and development team should be accessible to answer questions for the technical writers.

Coordination

As a leadership role, one of the primary responsibilities of the developer will be to communicate. More so listening than speaking. One of the most valuable things that an architect, or a software lead and manager for that matter, can do is to make sure their team understands what they are building. Then make sure each individual understands how their work will contribute to the final project.

I have seen projects go from extremely behind schedule, to finishing on time, after a new software lead was put into place. On one particular project, the developers had a vague idea what they were building, but on the surface it only seemed like another version of the five things they had built before. The new lead spent the first few days going over the project, its goals, what made it so cool, and verified each member of the team knew what they were responsible for. At that point, they moved forward with excitement and a new understanding. When some engineers finished their work, they jumped in and helped in other areas where possible. Do not underestimate the value of proper team coordination.

Design

Design is definitely something that the software architect will be involved with. However, I think that most people start to misunderstand the responsibility of the architect when the task of design is mentioned. The perception that I see the most often is that the architect designs the entire system; and this can cause some angst among developers that believe this is the case.

The cause of any angst may be because the developers believe their control and creative freedom will be limited; hopefully that is not the case. I have a bit more to say about this later in the essay.

The architect should take responsibility for the overall structure of the program. High-level behavior, control-flow and technologies to be utilized will all be determined by the architect. Other software developers are given the responsibility to design smaller sections of the program at a higher level of detail. Their design should be guided by the structure the architect puts in place.

Development

Any work performed up to this point is mostly focused on making this stage run smoothly. Hopefully all of the assumptions are correct, and the requirements are fixed. If not, the team must adapt. This is where the architect's responsibilities really become important. A foundation designed to be flexible with logically separated modules will have a better chance of adapting to any surprises that appear during development.

The architect is most likely to play more of a support role during this phase than a primary producer. That is because their time will be spent on guiding, mentoring and guiding the developers, as well as inspecting the results, and providing support where it will be most valuable. Moreover, they will keep the technical teams aligned with one and other, as well as the business and management teams informed of progress.

Furthermore, any surprises or new discoveries that appear along the way can also be adequately managed by the architect. On the other hand, any events occur that require the architects attention could cause further delays if their time is scheduled nearly 100% towards a development work items.

Verification

This is one phase where I believe the architect should have as little to do with this as possible. The reason being, you want an independently verified product. QA teams usually get the raw end of the deal when it comes to verifying and shipping software. If the programmers overrun on their schedule, QA is often tasked with finding creative ways of making the quality of the software better, faster.

If the architect is involved, the integrity of the verification process could be compromised. QA is a control gate to verify that things work correctly before they are released to the customer. The last thing you want is the architect and developers influencing QA's findings; making excuses for why something is not a bug, or at least why it should be classified as a level 4 superficial bug, rather than a level 2 major.

The architect should only coordinate with QA in order to get QA the resources they need to properly perform this task.

Source Control Management

There is so much that can be said about software SCM, but I am not going to. that is because it is a complicated task that deserves an entire essay of its own. The bottom line is the architect must be involved in the SCM for the product. It is crucial during development. It is damn near critical that SCM is handled according to a policy the architect defines (based upon the guidelines and strategies of the organization of course.)

Some products live a single existence, and slowly evolve with each release. Others spring into existence, and core components are reused to build other projects, which the process is then repeated ad infinitum. If there is no one managing the source code appropriately, or the way it is managed does not work for everyone, you may just get forked[^].

An Effective Software Architect

I was going to write a section titled Qualities. However, qualities are subjective, and you could probably guess the items on the list. It would look like every job ad for a software architect, and nearly the same for every software engineer. Just fill in the blank for the desired number of years of experience.

Because that list of qualities is so predictable and repeated everywhere, it would not add any more value to conveying the purpose and value of a software architect. Therefore, I thought it would be better to describe a few actions an Effective Software Architect would perform and the potential benefits that can be realized.

Focus on the Future

Jargon litters our lives. When job ads say "work in a fast-paced environment", that's a nice way of saying, "We want it right now!" No matter how quickly a piece of software can be developed, it still isn't quick enough. That is where many projects go awry; they are too focused on just for today. Tomorrow always becomes another today. Next thing you know you're programming in a swamp of code.

The software architect should focus on the long-term direction of a product, and execute towards the short-term goals of the business. It is good to have goals, they help provide direction. Every step forward may not lead directly to the goal. However, if the goal is always kept in mind when design decisions are made, the goal will become more attainable.

Shortcuts will be taken, and technical debt will accrue on the product. There never seems to be enough time to go back and correct all of those blemishes. However, the design can be made to mitigate short-term decisions and still provide a stable path towards the goals of the product.

Management and Business Development need to support the Software Architect by providing them with goals and information regarding the product. This will help the architect to develop a vision for the project and help guide it towards long-term goals; despite all of the rash and short-term decisions that are usually made in software development.

Create Unity

This is a more focused description of the coordination task above. This action is focused on building and maintaining a level of trust between all of the development team, as well as the management and other stake-holders that are involved with the project. As I mentioned earlier, the software product tends to mimic the communication style of the organization. Therefore a more unified team is more likely to develop a program that is unified in structure and quality. There is more to unity than the final structure of the program.

Trust

You may think that it is odd that I started an essay discussing computer programming, and I now I am on the topic of trust. For a team to work well together, they must trust each other. They must also trust their leaders that are directing them. The software architect is in a perfect position to help foster trust.

They are a technical leader, which means that they focus their attention on the technology and the way it is used. The role itself does not manage people. However, some organizations make the architect the manager of the developers as well. I believe this is a mistake because it throws away this opportunity to have a role to mediate situations of mistrust between development and management. This is especially true with respect to the technical aspect of the project.

The list below briefly describes how trust can be fostered with the other roles in the organization:

- Developers:

Listen to the development team. Incorporate their ideas into the design where they may fit, and be sure that they get credit for their ideas and work. When someone shares ideas, then implements them or sees someone else implement them and get the credit, they will tend to share less.

That's unfortunate, because the variety of experience and ideas that come from a collective group potentially provides more ideas to choose from when searching for a solution.

- Management:

A software architect needs the trust of management to succeed for two simple reasons:

- Funding:

- Support:

The managers control the purse strings of the business, and they decide where money is invested. If the trust of management is lost, they may be less likely to invest in your product. Developers may be peeled off and moved to another project that is deemed a higher priority. In the worst case, they decide they no longer want to fund you or your position.

The software architect is the steward of the product; they take all of the responsibility yet do not own it. In many cases, you will simply do the research, and present the facts, possibly providing a few options for management to choose from. However, for the topics that matter the most, persuasion of management to support your initiatives may be crucial. It is much easier to persuade someone when you are in good favor with them. Moreover, you may find yourself in a conflict with the development team, and without the support of management, you may lose that battle.

- Business Development and Marketing:

This group if interested parties becomes important to the architect if their software is a product that is owned and sold by the company. Having a good line of communication with the groups that drive the business's growth is extremely important. Information is the most valuable thing in our industry; a lack of information leaves us to speculate. It's better to in line with the initiatives set by the company, if for no other reason than you may spot problems before too many resources have been invested. Change becomes much more difficult at that point.

One other potential benefit of creating a strong relationship with these groups is they can gain a better grasp of what your product does. This is important because it allows them to consider the existing capabilities of your product when they are looking to convert a business opportunity into a sale. You flow information upstream, they flow information and potentially more sales back downstream.

- Customers:

The customer is the reason we write the software in the first place. The best way to earn trust with the customer is to listen to them. Sometimes it may seem like they don't know what they're talking about. However, it could simply be because you two are using different terms to mean the same things.

Therefore, it is important to clarify what you understand the customer is telling you. A quick way to lose their trust, as well as their confidence in you, is to ignore the advice or requests of the customer. If you can't do something or decide that you are not going to do it, have that discussion with them rather than avoid them.

Let's compare trust to technology, or a software codebase. You need to maintain relationships, otherwise that bond of trust starts to weaken. I am sure there already is a fancy term to describe this like trustfulness debt. If not, there you go. Open and transparent communication will help develop trust. Returning and visiting with your contacts periodically is another way to help maintain that bond. When trust is lost, it is very difficult to earn back.

Utilize the Teams Abilities

In a way, this is the Software Architect showing trust in their development team. People expect the Software Architect to be the smartest person on the team, or the most knowledgeable. That does not have to be the case, and usually it depends on the question or topic that you are referring to. Architects are good at seeing the Big Picture. In most cases I would expect there to be a number of developers with finer skills when referring to a deep domain topic, such as device drivers.

Say the architect is responsible for the development of an operating system kernel. There are many generalizations and design decisions that can be made to create structure for the kernel. Then a bit more thought would go towards a driver model and its implementation to simplify that task. When you reach the implementation for each particular type of driver, there are different nuances between file-system drivers, network drivers, and port I/O drivers. At some point, you will reach the limit of the architect's knowledge and expertise, and you will reach the realm of the domain expert.

Some engineers like to learn every minute detail about a topic, no matter how abstruse; that, is the domain expert. Typically they do not do well in the role of architect because they tend to get caught up in the low-level details when it is not necessary; think, Depth-first Search. Nonetheless this is the perfect candidate for the architect to trust and depend on when knowledge and advice is required on the expert's domain of specialty.

Summary

When the word architect is used, it is usually associated with design, and every programmer already does design (whether it is formal or on-the-fly is another discussion.) I think it would be best to help clarify the role of the position if effort was made to emphasize structure when discussing the software architect, with regards to both team and the software product. This may help disambiguate the purpose and value a software architect provides and distinguish it from the next step up after senior engineer.

There is much more to this role that dictating how the program should be built, which if you read the entire article you now know that isn't even one of the architect's responsibilities.

This post will focus on the concept of SFINAE, Substitution Failure Is Not An Error. This is a core concept that is one of the reasons templates are even possible. This concept is related exclusively to the processing of templates. It is referred to as SFINAE by the community, and this entry focuses on the two important aspects of SFINAE:

- Why it is crucial to the flexibility of C++ templates and the programming of generics

- How you can use it to your advantage

What the hell is it?

This is a term exclusively used in C++, which specifies that an invalid template parameter substitution itself is not an error. This is a situation specifically related to overload resolution of the considered candidates. Let me restate this without the official language jargon.

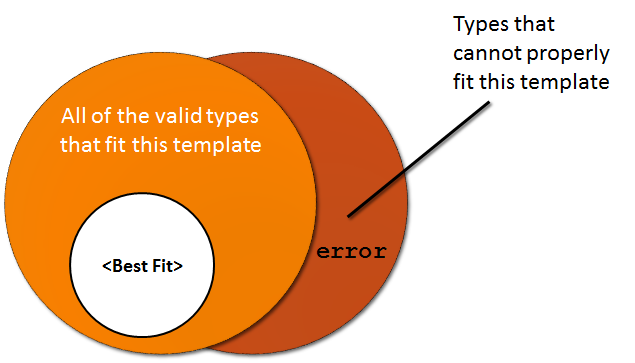

If there exists a collection of potential candidates for template substitution, even if a candidate is not a valid substitution, this will not trigger an error. It is simply eliminated from the list of potential candidates. However, if there are no candidates that can successfully meet the substitution criteria, then an error will be triggered.

SFINAE < Example >

I gave a vague description that somewhat resembled a statement in set theory. I also added a the Venn-diagram to hopefully add more clarity. However, there is nothing like seeing a demonstration in action to illustrate a vague concept. This concept is valid for class templates as well.

Below I have created a few overloaded function definitions. I have also created two template types that use the same name (overloaded), but have completely different structures. This example demonstrates the reason for the original rule:

C++

struct Field | |

{ | |

typedef double type; | |

}; | |

| |

template <typename T> | |

typename T::type Scalar(typename T::type value) | |

{ | |

return value * 4; | |

} | |

| |

template <typename T> | |

T Scalar(T value) | |

{ | |

return value * 3; | |

} |

The first case below, is the simpler example. It only requires, and accepts type where the value can be extracted implicitly from the type passed in; such as the intrinsic types, or types that provide a value conversion operator. More details have been annotated above each function.

C++

int main() | |

{ | |

// The version that requires a sub-field called "type" | |

// will be excluded as a possibility for this version. | |

cout << "Field type: " << Scalar<int> (5) <<"\n"; | |

| |

// In this case, the version that contains that | |

// sub-field is the only valid type. | |

cout << "Field type: " << Scalar<Field>(5) << "\n"; | |

} |

Output:

Field type: 15 Field type: 20

Curiosity

SFINAE was added to the language to make templates usable for fundamental purposes. It was envisioned that a string class may want to overload the operator+ function or something similar for an unordered collection object. However, it did not take long for the programmers to discover some hidden powers.

The power that was unlocked by SFINAE was the ability to programmatically determine the type of an object, and force a particular overload based on the type in use. This means that a template implementation is capable of querying for information about it's type and qualifiers at compile-time. This is similar to the feature that many languages have called reflection. Although, reflection occurs at run-time (and also incurs a run-time penalty).

Rumination

I am not aware of a name for this static-form of reflection. If there is could someone comment and le me know what it is called. If it hasn't been name I think it should be something similar to reflection, but it is still a separate concept.

When I think of static, I think of "fixed-in-place" or not moving. Meditation would fit quite well, it's just not that cool. Very similar to that is ponder. I thought about using introspection, but that is just a more pretentious form of reflection.

Then it hit me. Rumination! That would be perfect. It's a verb that means to meditate or muse; ponder. There is also a secondary meaning for ruminate: To chew the cud; much like the compiler does. Regardless, it's always fun to create new buzzwords. Remember, Rumination.

Innovative Uses

I make heavy use of SFINAE in my implementation of Network Alchemy. Mostly the features provided by the < type_traits > header. The construct std::enable_if is built upon the concept of SFINAE. I am ashamed to admit, that I have not been able to understand and successfully apply std::enable_if yet. I have crossed many situations that it seemed like it would be an elegant fit. When I figure it out, I will be sure to distill what I learn, and explain it so you can understand it too. (I understand enable_if[^] now.)

Useful applications of SFINAE

To read a book, an article or blog entry and find something genuinely new and useful that I have an immediate need for is fantastic. I find it extremely irritating when there is not enough effort put into the examples that usually accompany the brief explanation. This makes the information in the article nearly useless. This is even more irritating if the examples are less complicated than what I could create with my limited understanding of the topic to begin with.

A situation is extremely frustrating when I believe that I have found a good solution, yet I cannot articulate the idea to apply it. So unless you get extremely frustrated by useful examples applied to real-world problems, I hope these next few sections excite you.

Ruminate Idiom

We will create a meta-function that can make a decision based on the type of T. To start we will need to introduce the basis on which the idiom is built. A scenario is required where there are a set of choices, and only one of the choices is valid. Let's start with the sample, and continue to build until we reach a solution.

We will need two different types that are of a different size

C++

template < typename T > | |

struct yes_t | |

{ char buffer[2]; }; | |

| |

typedef char no_t; |

We will also need two component that are common in meta-programming:

- the

sizeofoperator - static-member constanst

We define a meta-function template, that will setup a test between the two types using the size of operator to determine which type was selected. This will give us the ability to make a binary decision in the meta-function.

C++

template < typename T > | |

struct conditional | |

{ | |

private: | |

template < typename U > | |

static yes_t < /* conditional on U */ > selector(U); | |

| |

static no_t selector(...); | |

| |

static T* this_t(); | |

| |

public: | |

static const bool value = | |

sizeof(selector(*this_t())) != sizeof(no_t); | |

}; |

We started with static declarations of the two types that I defined earlier. However, there is no defined conditional test for the yes_t template, yet. It is also important to understand that the template parameter name must be something different than the name used in the templates outer parameter. Otherwise the template parameter for the object would be used and SFINAE would not apply.

The lowest type in the order of precedence for C++ is .... At first glance this looks odd. However, think of it as the catch all type. If the conditional statement for yes_t produces an invalid type definition, the no_t type will be used for the declaration of the selector function.

It is important to note that it is not necessary to define the function implementations for selector because they will never actually be executed. Therefore, it is not required by the linker. We also use an arbitrary function, selector, that returns T, rather than a function that invokes T(), because T may not have a default constructor.

It is also possible to declare the selector function to take a pointer to T. However, a pointer type will allow void to become valid as a void*. Also, any type of reference will trigger an error because pointers to references are illegal. This is one area where there is no single best way to declare the types. You may need to add other specializations to cover any corner cases. Keep these alternatives in mind if you receive compiler warnings with the form I presented above.

More Detail

You were just presented a few facts, a bit of code, and another random mix of facts. Let's tie all of this information together to help you understand how it works.

- SFINAE will not allow a template substitution error halt the compiling process

- Inside of the meta-function we have created to specializations that accept

T - We have selected type definitions that will help us determine if a condition is true based upon the type. (An example condition will be shown next).

- We also added a catch-all declaration for the types that do not meet the conditional criteria (...)

- The stub function

this_t()has been created to be used in asizeofexpression. Thesizeofexpression compares the two worker types to theno_ttype to determine the result of our conditional.

The next section contains a concrete example conditional that is based on the type U.

is_class

Months ago I wrote about the standard header file, Type Traits[^]. This file contains some of the most useful templates for correctly creating templates that correctly support a wide range of types.

The classification of a type can be determined, such as differentiating between a Plain-old data (POD) struct and struct with classes. Determine if a type is const or volatile, if it's an lvalue, pointer or reference. Let me demonstrate how to tell if a type is a class type or not. Class types are the compound data structures, class, struct, and union.

What we need in the conditional template parameter is something that can differentiate these types from any other type. These types are the only types that it is legal to make a pointer to a scope operator ::*. The :: operator resolves to the global namespace.

Here is the definition of this template meta-function:

C++

template < typename T > | |

struct is_class | |

{ | |

private: | |

template < typename U > | |

static yes_t < int U::* > selector(U); | |

| |

static no_t selector(...); | |

| |

static T* this_t(); | |

| |

public: | |

static const bool value = | |

sizeof(selector(*this_t())) != sizeof(no_t); | |

}; |

Separate classes based on member declarations

Sometimes it becomes beneficial to determine if an object supports a certain function before you attempt to use that feature. An example would be the find() member function that is part of the associative containers in the C++ Standard Library. This is because it is recommended that you should prefer to call the member function of a container over the generic algorithm in the library.

Let's first present an example, then I'll demonstrate how you can take advantage and apply this call:

C++

template < typename T > | |

struct has_find | |

{ | |

private: | |

// Identify by using a pointer to a member | |

template < typename U > | |

static yes_t <U::find*> selector(U); | |

| |

static no_t selector(...); | |

| |

static T* this_t(); | |

| |

public: | |

static const bool value = | |

sizeof(selector(*this_t())) != sizeof(no_t); | |

}; |

Applying the meta-function

The call to std::find() is very generic, however, it can be inconvenient. Also, imagine we want to build a generic function ourselves that will allow any type of container to be used. We could encapsulate the std::find() call itself in a more convenient usage. Then build a single version of the generic function, as opposed to creating specializations of the implementation.

This type of approach will allow us to encapsulate the pain-point in our function that would cause the implementation of a specialization for each type our generic function is intended to support.

We will need to create one instance of our meta-function for each branch that exist in the final chain of calls. However, once this is done, the same meta-function can be combined in any number of generic ways to build bigger and more complex expressions.

C++

namespace CotD | |

{ | |

template < typename C, bool hasfind> | |

struct call_find | |

{ | |

bool operator()(C& container, C::value_type& value, C::iterator &result) | |

{ | |

result = container.find(container.begin(), | |

container.end(), | |

value) != container.end(); | |

return result != container.end(); | |

} | |

}; | |

| |

} // namespace CotD |

C++

namespace CotD | |

{ | |

template <typename C> | |

struct call_find <C, false> | |

{ | |

| |

bool operator()(C& container, C::value_type& value, C::iterator &result) | |

{ | |

result = std::find( container.begin(), | |

container.end(), | |

value); | |

| |

return result != container.end(); | |

} | |

}; | |

| |

} // namespace CotD |

This is a very simple function. In my experience, the small, generic, and cohesive functions and objects are the ones that are most likely to be reused. With this function, we can now use it in a more specific context, which should still remain generic for any type of std container:

C++

namespace CotD | |

{ | |

template < typename T > | |

void generic_call(T& container) | |

{ | |

T::value_type target; | |

// ... Code that determines the value ... | |

| |

T::iterator item; | |

if (!CotD::call_find<T, has_find<T>::value>(container, target, item)) | |

{ | |

return; | |

} | |

| |

// ... item is valid, continue logic ... | |

} | |

| |

} // namespace CotD |

C++

int main(int argc, _TCHAR* argv[]) | |

{ | |

bool res = has_find<SetInt>::value; | |

| |

call_find<SetInt, has_find<SetInt>::value> set_call; | |

| |

SetInt::iterator set_iter; | |

SetInt s; | |

set_call(s, 0, set_iter); | |

| |

call_find<VecInt, has_find<VecInt>::value> vec_call; | |

VecInt::iterator vec_iter; | |

VecInt v; | |

vec_call(v, 0, vec_iter); | |

} |

Output:

T::find() called std::find() called

We made it possible to create a more complex generic function with the creation of the small helper function , CotD::find(). The resulting CotD::generic_call is agnostic to the type of container that is passed to it.

This allowed us to avoid the duplication of code for the larger function, CotD::generic_call, due to template specializations.

There is also a great chance that the helper template will be optimized by the compiler to eliminate the unreachable branch due to the types being pre-determined and fixed when the template is instantiated.

Summary

Substitution Failure Is Not An Error (SFINAE), is a subtle addition that was added to C++ make the overload resolution possible with templates. Just like the other basic functions and classes. However, this subtle compiler feature opens the doors of possibility for many applications in generic C++ programming.

Are You Mocking Me?!

general, adaptability, portability, CodeProject, maintainability Send feedback »It seems that every developer has their own way of doing things. I know I have my own methodologies, and some probably are not the simplest or the best (that I am aware of). I have continued to refine me design, development, test and software support skills through my career. I recognize that everyone has their own experiences, so I usually do not question or try to change someone else's process. I will attempt to suggest if I think it might help. However, sometimes I just have to ask, "are you sure you know what you are doing?" For this entry I want to focus on unit testing, specifically with Mock Objects. |

|

What do you mean by "Mock"?

I want to seriously focus on a type of unit-test technique, one that is so misused, that I would even go so far as to call it an anti-technique. This is the use of Mock Objects.

Mock objects and functions can fill in an important gap when a unit-test is attempting to eliminate dependencies or to avoid the use of an expensive resource. Many mock libraries make it pretty damn simple to mock the behavior of your codes dependencies. This is especially true when the library is integrated into your development environment and will generate much of the code for you.

There are other approaches that exist, which I believe are a better first choice. Whatever method you ultimately chose should depend on the goal of your testing. I would like to ruminate on some of my experiences with Mock Objects as well as provide some alternate possibilities for clipping dependencies for unit tests.

Unit Testing

Mock Objects are only a small element of the larger topic of unit-testing. Therefore, I think it's prudent to provide a brief overview of unit testing to try to set the context of this discussion, as well as align our understanding. Unit testing is a small isolated test written by the programmer of a code unit, which I will universally refer to as a system.

You can find a detailed explanation of what a unit test is and how it provides value in this entry: The Purpose of a Unit Test[^].

It is very important to try to isolate your System Under Test (SUT) from as many dependencies as possible. This will help you differentiate between problems that are caused by your code and those caused by its dependencies. In the book xUnit Patterns, Gerrard Meszaros, introduces the concept of a Test Double used to stand-in for these dependencies. I have seen many different names used to describe test doubles, such as dummy, fake, mock, and stub. I think that it is important to clarify some vocabulary before we continue.

The best definitions that I have found and use today come from this excellent blog entry by, Martin Fowler, Mocks Aren't Stubs. Martin defines a set of terms that I will use to differentiate the individual types of test doubles.

- Dummy objects are passed around but never actually used. Usually they are just used to fill parameter lists.

- Fake objects actually have working implementations, but usually take some shortcut which makes them not suitable for production (an in memory database is a good example).

- Stubs provide canned answers to calls made during the test, usually not responding at all to anything outside what's programmed in for the test. Stubs may also record information about calls, such as an email gateway stub that remembers the messages it 'sent', or maybe only how many messages it 'sent'.

- Mocks are what we are talking about here: objects pre-programmed with expectations which form a specification of the calls they are expected to receive

Martin's blog entry above is also an excellent source for learning a deeper understanding between the two general types of testing that I will talk about next.

So a Mock is just a different type of test double?!

No, not really.

Besides replacing a dependency, mock objects add assertions to their implementation. This allows a test to report if a function was called, if a set of functions were called in order, or even if a function was call that should not have been called. Compare this to simple fake objects, and now fake objects look like cheap knock-offs (as opposed to high-end knock-offs). The result becomes a form of behavioral verification with the addition of these assertions.

Mock objects can be a very valuable tool for use with software unit tests. Many unit test frameworks now also include or support some form of mocking framework as well. These frameworks simplify the creation of mock objects for your unit tests. A few frameworks that I am aware of are easyMock and jMock for JAVA, nMock with .Net and GoogleMock if you use GoogleTest to verify C++ code.

Behavior verification

Mock objects verify the behavior of software. For a particular test you may expect your object to be called twice and you can specify the values that are returned for each call. Expectations can be set within the description of your mock declaration, and if those expectations are violated, the mock will trigger an error with the framework. The expected behavior is specified directly in the definition of the mock object. This in turn will most likely dictate how the actual system must be implemented in order to pass the test. Here is a simple example in which a member function of an object registers the object for a series of callbacks:

Code

// No, I'm not a Singleton. | |

// I'm an Emporium, so quit asking. | |

class CallbackEmporium | |

{ | |

// Provides functions to register callbacks | |

} | |

| |

TheCallbackEmporium& TheEmporium() | |

{ ... } | |

| |

// Target SUT | |

void Object::Register() | |

{ | |

TheEmporium().SetCallbackTypeA( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

} |

Clearly the only way to validate in this function is through verifying its behavior. There is no return value, so that cannot be verified. Therefore, a mock object will be required to verify the register function. With the syntax of a Mock framework, this will be a snap, because we add the assertion right in the declaration of the Mock for the test, and that's it!

Data verification

Ultimately the function object::Register() is interested to know if the two proper callbacks were registered with TheEmporium. So if you nodded your head in agreement in the previous section when I said "Clearly the only way...", I suggest you stop after you read sentences that read like that and challenge the author's statement. Most certainly there are other ways to verify, and here is one of them.

1 point if you paused after that trick sentence, 2 points if you are reserving judgment for evidence to back up my statement.

It would still be best if we have a stand-in object to replace TheEmporium. However, If there is some way for use to verify after the SUT call, that the expected callback functions were registered in the correct parameters of TheEmporium, then we do not need a mock object. We have verified the final data of the system was as expected, not that the program executed to a prescribed behavior.

Why does it matter?

Tight Coupling between the test and the implementation.

Suppose you wrote your mock object to verify the code in this way:

Code

// This is a mocked yet serious syntax | |

// for a mock-object statement to verify Register(). | |

Mocker.call( SetCallbackTypeA() | |

.with(Object::StaticCallA)).and() | |

.call( SetCallbackTypeB() | |

.with(Object::StaticCallB)); |

That will test the function as-is currently implemented. However, if the implementation of Object::Register were implemented like anyone of these, the test may report a failure, even though the intended and correct results were achieved by the SUT.

Code

void Object::Register() | |

{ | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

TheEmporium().SetCallbackTypeA( Object::StaticCallA, this ); | |

} |

Code

// Too many calls to one of the functions | |

void Object::Register() | |

{ | |

TheEmporium().SetCallbackTypeA( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

} |

Code

// Call each function twice: | |

// Assign incorrect values first. | |

// Then call a second time with the correct values. | |

void Object::Register() | |

{ | |

TheEmporium().SetCallbackTypeA( Object::StaticCallB, this ); | |

TheEmporium().SetCallbackTypeA( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

} |

Code

// Assign incorrect values first. | |

// Then call a second time with the correct values.void Object::Register() | |

{ | |

TheEmporium().SetCallbackTypeA( Object::StaticCallB, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeA( Object::StaticCallA, this ); | |

TheEmporium().SetCallbackTypeB( Object::StaticCallB, this ); | |

} |

All four of these implementations would have continued to remain valid for the data validation form of the test. Because the correct results were assigned to the proper values at the return of the SUT.

When the Mock Object Patronizes You

Irony. Don't you love it?!

Mock objects can get you very far successfully. In fact, you may get towards the very end of your development phase and you have unit tests around every object and function. You are wrapping up your component integration phase. Things are not working as expected. These are some that I have personally observed:

- The compiler complains about a missing definition

- The linker (for the C and C++ folks) complains about undefined symbols have been referenced

- This is a network application, everything compiles and links, the program loads and doesn't crash. It doesn't do anything else either. You connect a debugger, it is not sending traffic.

I have seen developers become so enthusiastic with how simple mock objects made developing tests for them, that they virtually created an entire mock implementation. When it compiled and was executed, critical core components had a minimal or empty implementation. All of the critical logic was complete and verified. However, the glue that binds the application together, the utility classes, had not been implemented. They remained stubs.

Summary

There are many ways to solve problems. Each type of solution provides value in its own way. Some are simple, others elegant, while others sit and spin in a tight loop to use up extra processing cycles because the program doesn't work properly on faster CPUs. Just be aware of what value you are gaining from the approaches that you take towards your solution. If it's the only way you know, that is a good first step. Remember to keep looking, because their may be better solutions in some way that you value more.

I Found "The Silver Bullet"!

general, leadership, communication, CodeProject, maintainability, knowledge Send feedback »I found the metaphorical Silver Bullet that everyone has been searching for in software development and it worked beautifully on my last project. Unfortunately, I only had one of them. I am pretty sure that I could create another one if I ever have to work with a beast that is similar to my last project. However, I don't think that my bullet would be as effective if the circumstances surrounding the project varies too much from my original one.

No Silver Bullet

To my knowledge, the source of this term is from. Fred Brooks, the author of The Mythical Man Month. He wrote a paper in 1986 called No Silver Bullet -- Essence and Accident in Software Engineering. Brooks posits:

There is no single development, in either technology or management technique, which by itself promises even one order-of-magnitude improvement within a decade in productivity, in reliability, in simplicity.

The concepts and thoughts that I present in this entry are deeply inspired by his thoughts and assertions that he states in his paper.

There have been a number of studies and papers that challenge the assertions that Brooks presents in this paper. To this day, the only way that programmers have increased their productivity by an order of magnitude has been by moving to a higher-level programming language. This comparison is done by comparing the productivity between programmers developing in assembly and C. Then comparing C to the next level, such as C# or JAVA, and then on to scripting languages.

The Silver Bullet

For thoroughness

I feel obligated to let you know where the term Silver Bullet originates. The mythical creature, the werewolf, is fabled to only be able to be killed by silver weapons. The modern day equivalent is a bullet made from silver. Depending on which mythology, author, video game or table-top gaming platform you are most fond of, other types of weapons may harm the creature, but only temporarily. Unless it is made from pure silver, it will not completely kill the creature.

For the uninitiated

I feel obligated to interpret this metaphor, which I think is a great metaphor. Your project is the werewolf. For decades, millions of programmers and project managers have been searching for a way to kill, reliably execute the development of a software project. Software development has been notoriously plagued by cost and schedule overruns due to the poor ability of estimating the costs required to develop a project. The silver bullet is that special tool, process, programming language, programmer food or sensory deprivation chamber that tames this beast and allows a project to be developed with some increased form of reliable predictability.

Software Engineering

I want to take a scenic detour from the primary topic of this post, to perform an exercise that may help demonstrate my point.

Every since I started programming I have heard debates and also wondered myself, "Is computer programming a science or an art?" I have never heard a convincing argument or definition that I could accept. Each year I find myself further away from what I believe to be a valid definition because I have stumbled upon many other titles that could classify where a computer programmer fits in the caste of our workforce. This is a non-exhaustive list of other labels that seem to have fit at one time or another:

- Form of Engineering

- Science

- Art

- Craft

- Trade

- Linguistic Translator

- Competitive Sport (both formally and informally)

- Modern day form of a Charlatan / Snake-oil salesman / Witch-doctor

- A way to get paid for working with my hobby

To create a precise definition for the practice of computer programming, is much like trying to nail JELLO to the wall. No matter where you go, it will be practiced differently. The culture will be different. The management will be different. The process will be different. The favored framework will be different. Experience can teach us, mold us, and jade us just to name a few ways in which we can change. I have collected a list of my experiences that span my career in an attempt to classify a computer programmer.

Experience

I can only speak from my personal research and experiences, and both of those have mostly been focused on the realm of software development. Here is a broad sampling of my experiences related to software development.

I have read scores of books that span many aspects of software development, such as...

- the programming languages du jour

- ...the programming processes du jour

- ...the programming styles du jour

- ...C++, lots of books related to C++

- ...books on the sociology of software development

- ...books only tangentially related to software development because they were right next to the software development section in the bookstore

I have worked for a variety of companies, which...

- ...had many intelligent people

- ...had people of much less intelligence

- ...had enthusiastic learners

- ...had people with passion for technology

- ...had people I always wondered how they passed the stringent hiring process

- ...had bosses with a variety of management styles

- ...aimed for completely different goals

- ...valued results that ranged from the next quarter to ten years from now

Over my career I have also...

- ...learned many things from colleagues

- ...spent time unlearning some things

- ...helped others understand and become better developers,

- ...written a lot of code

- ...learned that that amount of code I have written is too much, there are better ways

- ...deleted a lot of code (after my thumbs, my right pinky is the digit on my hand that I value the most)

- ...lost a lot of code due to power outages, workstation upgrades by IT, and I suspect from BigFoot

- ...repeated myself far too much

- ...gathered many requirements

- ...communicated and miscommunicated with many people

- ...rewritten someone else's code because my way is cleaner

- ...had my cleaner code rewritten back to its original form

- ...had many fantastic ideas

- ...created a fewer number of those fantastic designs

- ...implemented even fewer programs based on those designs

- ...implemented programs based on other peoples designs

- ...criticized the poor quality of untold amounts of code

- ...was humbled when I discovered I wrote some of that code

- ...was shocked when I looked at old code of mine and literally said out loud "This is my code?! When did I learn how to do that, and why don't I remember, because that's something I've always wanted to learn how to do?!"

- ...became too emotionally attached to projects (yes, multiple times)

- ...reached a point where I lost all motivation on a project and it was excruciating to watch how slowly the LEDs would take to blink 3600 (or if you prefer 0xE10) times each hour

- ...learned how to articulate most of my ideas

- ...become a better listener

- ...learned that no matter how similar a situation is, my next experience will always be different

To summarize my experiences

I have worked for a half-dozen companies in a variety of roles. Although there have been similarities, each of these companies still had completely unique talent level, cultures, goals, company values, management styles, reward systems and more. These factors played into how well the teams worked together, the quality of products the company produced, how pleased the customers were and in the end this affected how they defined the role of programmer or software engineer.

Instincts

There is a very strong observation that I have silently mulled over for a few years now. The digital world has turned the world we know upon its head. In true digital form, there are 10 types of people in this world, those that can grasp the abstract concepts encoded in digital technology, and those that can't.

It seems that most people develop some sort of intuition when it comes to the physical world. You can sense with possibly all five of your senses qualities about any physical object. Will it be soft and cuddly, heavy and smooth, squishy and sticky? Those of you old enough to remember TVs when they were large, heavy and thick; you know Cathode Ray Tube (CRT) technology. For some reason, we have this instinct to whack the TV on the side. One or two of those usually did the trick. Why? The mechanical components like the vacuum tubes were becoming unseated. A firm jolt let them settle correctly back into place.

Now consider a digital circuit. To all but experts even, it is not possible to tell if that circuit card is running properly. I had a flat-screen TV give out, and before I decided to throw it out, thought I would look online to see if there was a solution, and there was. It turned out to be cheap capacitors going bad on the power board. I opened the TV up to replace the bad capacitors, and luckily for me that is all that it was. I would have had no idea if something had gone wrong on the IC with more complex components. No amount of whacking on the side of a digital TV or computer monitor will resolve the issue.

Abstract Thought

The ability to grasp the abstract digital concepts is a valuable talent. Other engineering disciplines such as electrical, chemical, astro-physics are like magic to most people not in those fields. However, there is one sound basis on which they all are based upon, and that is physics. These fields are based on the laws and models that human-kind developed to approximate the best we can understand about our physical world.

What is computing based upon? The physics that allow the electrical circuits to switch at lightening speed in a defined pattern to create some effect, generally a calculation. It's time to consider how we define these patterns to compute.

Computer programming is an activity that articulates an amorphous abstract thought, in as precise of a manner as to be interpreted and executed by a computer. We are converting this thought pulsing in our minds into a language of sorts, to communicate with a digital creature. That is fascinating.

What is troubling, is that 8 developers (we'll go back to base 10) can be sitting in a room, listening to a set of requirements. Then independently recreate that list of requirements. Quite often the result is 9 distinct lists of requirements (remember the original requirements). If these 8 developers went off to converse with their digital pet, each of them will create very similar programs and results, yet they will all differ because of the programmers interpretation. That is not even considering programmers that do not quite understand all of the nuances of the language with which they are commanding their computer.

What was the question again?

What is Software Engineering?

It's mostly an engineering discipline and also has a strong foundation in science.

It can be a form of art, but mostly only appreciated by other programmers that understand the elegance and beauty of what the program does.

I think it is definitely a trade or a craft. The more you practice, the better you become and natural talent can sure help as well.

It was only recently that I considered the communication/linguistic/translation concept. Especially when abstract thoughts and concepts are factored into how those ideas are translated. Math is very similar in its abstract concepts and models that we have created. However, math is also much more well defined than computer programming.

To me, programming is very much like writing an essay on a book only using mathematical notations.

The digital nature and complexity of computer programs allow us to become charlatans. It's possible to tell your managers and customers that your program does the right thing, even though it's only good enough to appear to do the right thing. If they find the bug, we'll create a patch for that; maybe.

Software Engineering is many things, most of them great. However, it is still a relatively new discipline humans are attempting to master. It does not work very well to compare this profession to other engineering professions, because we are not bound by the laws of physics. Human creativity and stupidity are the forces that limit what can be done with computers.

Back to the Silver Bullet

I think we should continue to develop new tools, processes and energy drinks that will help developers write better code. I also think that communication is an aspect that really needs to be explored to further solidify the definition of this profession.

In order to improve our processes, everyone that has a stake in the project must consider the differences between the next project, and the previous project. A team that works well and communicates effectively at a company that is doing well and has a great culture, will out perform that exact same team at a company with layoffs looming in the near future. It would be interesting to take the great team working at the great company and scale the project and personnel size up by 5 times.

What will still be good?

What could go wrong?

What will need to change for the new team to succeed on this project?

Success through feedback

I think it's possible for the new team to succeed. However, they have to consider the differences of their new project compared to their past ones. They can't expect processes that worked well for a team of four, to work equally as well with a team of 20 without making adjustments. When the work is underway, the team will need to be observant and use the new information they receive to adjust their processes to ensure success.

Summary

There is no Silver Bullet. Well, maybe one or two. But not every project is a werewolf. If you run into BigFoot, don't waste your silver bullet, just protect your data.

Alchemy: Message Serialization

portability, reliability, CodeProject, C++, maintainability, Alchemy, design Send feedback »This is an entry for the continuing series of blog entries that documents the design and implementation process of a library. This library is called, Network Alchemy[^]. Alchemy performs data serialization and it is written in C++. This is an Open Source project and can be found at GitHub.

If you have read the previous Alchemy entries you know that I have now shown the structure of the Message host. I have also demonstrated how the different fields are pragmatically processed to convert the byte-order of the message. In the previous Alchemy post I put together the internal memory management object. All of the pieces are in place to demonstrate the final component to the core of Alchemy, serialization.

Serialization

Serialization is a mundane and error prone task. Generally, both a read and a write operation are required to provide any value. Serialization can occur on just about any medium including: files, sockets, pipes, and consoles to name a few. The primary purpose of a serialization task is to convert a locally represented object into a data stream. The data stream can then be stored or transferred to a remote location. The stream will be read back in, and converted to an implementation defined object.

It is possible to simply pass the object exactly as you created it, but only in special situations. You must be working on the same machine as the second process. Your system will require the proper security and resource configuration between processes, such as a shared memory buffer. Even then there are issues with how memory is allocated. Are the two programs developed with the same compiler? A lot of flexibility is lost when raw pointers to objects are shared between processes. In most cases I would recommend against doing that.

Serialization Types

There are two ways that data can be serialized:

- Text Serialization:

Text serialization works with basic text and symbols. This scenario often happens when editing a raw text file in Notepad. When the file is saved in Notepad, it writes out the text, in plain text. Configuration and XML files, are another example of files that are stored in plain text. This makes it convenient for users to be able to hand edit these files. Again, all data is serialized to a human readable format (usually). - Binary Serialization:

Binary serialization is simply that, a stream of binary bytes. As binary is only 1s and 0s, it is not human friendly for reading and manipulating. Furthermore, if your binary serialized data will be used on multiple systems, it is important to make sure the binary formats are compatible. If they are not compatible, adapter software can be used to translate the data into a compatible format for the new system. This is one of the primary reasons Alchemy was created.

Alchemy and Serialization

Alchemy serializes data in binary formats. The primary component in Alchemy is called ,Hg (Mercury - Messenger of the Gods). Hg is only focused on the correct transformation and serialization of data. On one end Hg provides a simple object interface that behaves similarly to a struct. On the other end, the data is serialized and you will receive a buffer that is packed according to the format that you have specified for the message. With this buffer, you will be able to send it directly to any transport medium. Hg is also capable of reading input streams and populating a Hg Message object.

Integrating the Message Buffer

The MsgBuffer will remain an internal detail of the Message object that the user interacts with. However, there is one additional definition that will need to be added to the Message template parameters. That is the StoragePolicy chosen by the user. This will allow the same message format implementation to be used to interact with many different types of mediums. Here is a list of potential storage policies that could be integrated with Alchemy:

- User-supplied buffer

- Alchemy managed

- Hardware memory maps

typedefs are shown below:

C++

template < class MessageT, | |

class ByteOrderT = Hg::HostByteOrder, | |

class StorageT = Hg::BufferedStoragePolicy | |

> | |

struct DemoTypeMsg | |

{ | |

// Define an alias to provide access to this parameterized type. | |

typedef MessageT format_type; | |

| |

typedef StorageT storage_type; | |

| |

typedef typename | |

storage_type::data_type data_type; | |

typedef data_type* pointer; | |

typedef const data_type* const_pointer; | |

| |

typedef MsgBuffer< storage_type > buffer_type; | |

typedef std::shared_ptr< buffer_type > buffer_sptr; | |

| |

// ... Field declarations | |

private: | |

buffer_type m_msgBuffer; | |

}; |

The Alchemy managed storage policy, Hg::BufferedStoragePolicy, is specified by default. I have also implemented a storage policy that allows the user to supply their own buffer called, Hg::StaticStoragePolicy. This is included with the Alchemy source.

Programmatic Serialization

The solution for serialization is very similar to the byte-order conversion logic that was demonstrated in post that I introduced the basic Alchemy: Prototype[^]. Once again we will use the ForEachType static for loop that I implemented to serialize the Hg::Messages. This will require a functor to be created for both input and output serialization.

Since I have already presented the detail that describe how this static for-loop processing works, I am going to present serialization from top to bottom. We will start with how the user interacts with the Hg::Message, and continue to step deeper into the processing until the programmatic serialization is performed.

User Interaction

C++

// Create typedefs for the message. | |

// A storage policy is provided by default. | |

typedef Message< DemoTypeMsg, HostByteOrder > DemoMsg; | |

typedef Message< DemoTypeMsg, NetByteOrder > DemoMsgNet; | |

| |

// Populate the data in Host order. | |

DemoMsg msg; | |

| |

msg.letter = 'A'; | |

msg.count = sizeof(short); | |

msg.number = 100; | |

| |

// The data will be transferred over a network connection. | |

DemoMsgNet netMsg = to_network(msg); | |

| |

// Serialize the data and transfer over our open socket. | |

// netMsg.data() initiates the serialization, | |

// and returns a pointer to the buffer. | |

send(sock, netMsg.data(), netMsg.size(), 0); |

This is the definition of the user accessible function. This code first converts the pointer to this to a non-const form, in order to call a private member-function that initiates the operation. This is required so the m_msgBuffer field can be modified and store the data. There are a few other options. The first is to remove the const qualifier from this function. This is not a good solution because it would make it impossible to get serialized data from objects declared const. The other option is to declare m_msgBuffer as mutable. However, this form provides the simplest solution, and limits the modification of m_msgBuffer to this function alone.

C++

// ********************************************************* | |

/// Returns a pointer to the memory buffer | |

/// that contains the packed message. | |

/// | |

const_pointer data() const | |

{ | |

Message *pThis = const_cast< Message* >(this); | |

pThis->pack_data(); | |

| |

return m_msgBuffer.data(); | |

} |

In turn, the private member-function calls a utility function that initiates the process:

C++

// ********************************************************** | |

void pack_data() | |

{ | |

m_msgBuffer = *pack_message < message_type, | |

buffer_type, | |

size_trait | |

>(values(), size()).get(); | |

} |

Message packing details

Now we are behind the curtain where the work begins. Again, you will notice that this first function is a global top-level parameterized function, which calls another function. The reason for this is the generality of the final implementation. When nested fields are introduced, processing will return to this point a specialized form of this function. This is necessary to allow nested message formats to also be used as independent top-level message formats.

C++

template< class MessageT, | |

class BufferT | |

> | |

std::shared_ptr< BufferT > | |

pack_message( MessageT& msg_values, | |

size_t size) | |

{ | |

return detail::pack_message < MessageT, | |

BufferT | |

>(msg_values, | |

size); | |

} |

... And just like the line at The Hollywood Tower Hotel ride at the California Adventure theme park, the ride has started and you weren't even aware. But, there's another sub-routine.

C++

template< typename MessageT, | |

typename BufferT | |

> | |

std::shared_ptr< BufferT > | |

pack_message( MessageT &msg_values, | |

size_t size) | |

{ | |

// Allocate a new buffer manager. | |

std::shared_ptr< BufferT > spBuffer(new BufferT); | |

// Resize the buffer. | |

spBuffer->resize(size); | |

// Create an instance of the | |

// functor for serializing to a buffer. | |

detail::PackMessageWorker | |

< 0, | |

Hg::length< typename MessageT::format_type >::value, | |

MessageT, | |

BufferT | |

> pack; // Note: Pack is the instantiated functor. | |

| |

// Call the function operator in pack. | |

pack(msg_values, *spBuffer.get()); | |

return spBuffer; | |

} |

Here is the implementation of the pack function object:

C++

template< size_t Idx, | |

size_t Count, | |

typename MessageT, | |

typename BufferT | |

> | |

struct PackMessageWorker | |

{ | |

void operator()(MessageT &message, | |

BufferT &buffer) | |

{ | |

// Write the current value, then move to | |

// the next value for the message. | |

size_t dynamic_offset = 0; | |

WriteDatum< Idx, MessageT, BufferT >(message, buffer); | |

| |

PackMessageWorker < Idx+1, Count, MessageT, BufferT> pack; | |

pack(message, buffer); | |

} |

This should start to look familiar of you read the Alchemy: Prototype entry. Hopefully repetition does not bother you because that is what recursion is all about. This function will first call a template function called, WriteDatum, which performs the serialization of the current data field. Then a new instance of the PackMessageWorker functor is created to perform serialization of the type at the next index. To satisfy your curiosity, here is the implementation for WriteDatum:

C++

template< size_t IdxT, | |

typename MessageT, | |

typename BufferT | |

> | |

struct WriteDatum | |

{ | |

void operator()(MessageT &msg, | |

BufferT &buffer) | |

{ | |

typedef typename | |

Hg::TypeAt | |

< IdxT, | |

typename MessageT::format_type | |

>::type value_type; | |

| |

value_type value = msg.template FieldAt< IdxT >().get(); | |

size_t offset = | |

Hg::OffsetOf< IdxT, typename MessageT::format_type >::value; | |

| |

buffer.set_data(value, offset); | |

} | |

}; |