Alchemy is a collection of independent library components that specifically relate to efficient low-level constructs used with embedded and network programming.

The latest version of Embedded Alchemy[^] can be found on GitHub.The most recent entries as well as Alchemy topics to be posted soon:

- Steganography[^]

- Coming Soon: Alchemy: Data View

- Coming Soon: Quad (Copter) of the Damned

My previous two entries have presented a mathematical foundation for the development and presentation of 3D computer graphics. Basic matrix operations were presented, which are used extensively with Linear Algebra. Understanding the mechanics and limitations of matrix multiplication is fundamental to the focus of this essay. This entry demonstrates three ways to manipulate the geometry defined for your scene, and also how to construct the projection transform that provides the three-dimensional effect for the final image.

Transforms

There are three types of transformations that are generally used to manipulate a geometric model, translate, scale, and rotate. Your models and be oriented anywhere in your scene with these operations. Ultimately, a single square matrix is composed to perform an entire sequence of transformations as one matrix multiplication operation. We will get to that in a moment.

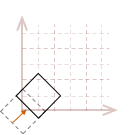

For the illustration of these transforms, we will use the two-dimensional unit-square shown below. However, I will demonstrate the math and algorithms for three dimensions.

| \( \eqalign{ pt_{1} &= (\matrix{0 & 0})\\ pt_{2} &= (\matrix{1 & 0})\\ pt_{3} &= (\matrix{1 & 1})\\ pt_{4} &= (\matrix{0 & 1})\\ }\) |

|

Translate

Translating a point in 3D space is as simple as adding the desired offset for the coordinate of each corresponding axes.

\( V_{translate}=V+D \)

For instance, to move our sample unit cube from the origin by \(x=2\) and \(y=3\), we simply add \(2\) to the x-component for every point in the square, and \(3\) to every y-component.

| \( \eqalign{ pt_{1D} &= pt_1 + [\matrix{2 & 3}] \\ pt_{2D} &= pt_2 + [\matrix{2 & 3}] \\ pt_{3D} &= pt_3 + [\matrix{2 & 3}] \\ pt_{4D} &= pt_4 + [\matrix{2 & 3}] \\ }\) |

|

There is a better way, though.

That will have to wait until after I demonstrate the other two manipulation methods.

Identity Matrix

The first thing that we need to become familiar with is the identity matrix. The identity matrix has ones set along the diagonal with all of the other elements set to zero. Here is the identity matrix for a \(3 \times 3\) matrix.

\( \left\lbrack \matrix{1 & 0 & 0\cr 0 & 1 & 0\cr 0 & 0 & 1} \right\rbrack\)

When any valid input matrix is multiplied with the identity matrix, the result will be the unchanged input matrix. Only square matrices can identity matrices. However, non-square matrices can be used for multiplication with the identity matrix if they have compatible dimensions.

For example:

\( \left\lbrack \matrix{a & b & c \\ d & e & f \\ g & h & i} \right\rbrack \left\lbrack \matrix{1 & 0 & 0\cr 0 & 1 & 0\cr 0 & 0 & 1} \right\rbrack = \left\lbrack \matrix{a & b & c \\ d & e & f \\ g & h & i} \right\rbrack \)

\( \left\lbrack \matrix{7 & 6 & 5} \right\rbrack \left\lbrack \matrix{1 & 0 & 0\cr 0 & 1 & 0\cr 0 & 0 & 1} \right\rbrack = \left\lbrack \matrix{7 & 6 & 5} \right\rbrack \)

\( \left\lbrack \matrix{1 & 0 & 0\cr 0 & 1 & 0\cr 0 & 0 & 1} \right\rbrack \left\lbrack \matrix{7 \\ 6 \\ 5} \right\rbrack = \left\lbrack \matrix{7 \\ 6 \\ 5} \right\rbrack \)

We will use the identity matrix as the basis of our transformations.

Scale

It is possible to independently scale geometry along each of the standard axes. We first start with a \(2 \times 2\) identity matrix for our two-dimensional unit square:

\( \left\lbrack \matrix{1 & 0 \\ 0 & 1} \right\rbrack\)

As we demonstrated with the identity matrix, if we multiply any valid input matrix with the identity matrix, the output will be the same as the input matrix. This is similar to multiplying by \(1\) with scalar numbers.

\( V_{scale}=VS \)

Based upon the rules for matrix multiplication, notice how there is a one-to-one correspondence between each row/column in the identity matrix and for each component in our point..

\(\ \matrix{\phantom{x} & \matrix{x & y} \\ \phantom{x} & \phantom{x} \\ \matrix{x \\ y} & \left\lbrack \matrix{1 & 0 \\ 0 & 1} \right\rbrack } \)

We can create a linear transformation to scale point, by simply multiplying the \(1\) in the identity matrix, with the desired scale factor for each standard axis. For example, let's make our unit-square twice as large along the \(x-axis\) and half as large along the \(y-axis\):

\(\ S= \left\lbrack \matrix{2 & 0 \\ 0 & 0.5} \right\rbrack \)

This is the result, after we multiply each point in our square with this transformation matrix; I have also broken out the mathematic calculations for one of the points, \(pt_3(1, 1)\):

| \( \eqalign{ pt_{3}S &= \\ &= [\matrix{1 & 1}] \left\lbrack\matrix{2 & 0 \\ 0 & 0.5} \right\rbrack\\ &= [\matrix{(1 \cdot 2 + 1 \cdot 0) & (1 \cdot 0 + 1 \cdot 0.5)}] \\ &= [\matrix{2 & 0.5}] }\) |

|

Here is the scale transformation matrix for three dimensions:

\(S = \left\lbrack \matrix{S_x & 0 & 0 \\ 0 & S_y & 0 \\ 0 & 0 & S_z }\right\rbrack\)

Rotate

A rotation transformation can be created similarly to how we created a transformation for scale. However, this transformation is more complicated than the previous one. I am only going to present the formula, I am not going to explain the details of how it works. The matrix below will perform a counter-clockwise rotation about a pivot-point that is placed at the origin:

\(\ R= \left\lbrack \matrix{\phantom{-} \cos \Theta & \sin \Theta \\ -\sin \Theta & \cos \Theta} \right\rbrack \)

\(V'=VR\)

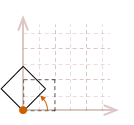

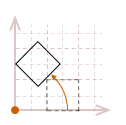

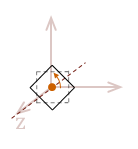

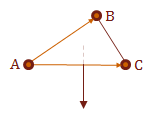

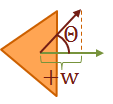

As I mentioned, the rotation transformation pivots the point about the origin. Therefore, if we attempt to rotate our unit square, the result will look like this:

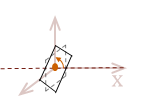

The effects become even more dramatic if the object is not located at the origin:

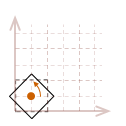

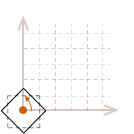

In most cases, this may not be what we would like to have happen. A simpler way to reason about rotation is to place the pivot point in the center of the object, so it appears to rotate in place.

To rotate an object in place, we will need to first translate the object so the desired pivot point of the rotation is located at the origin, rotate the object, then undo the original translation to move it back to its original position.

While all of those steps must be taken in order to rotate an object about its center, there is a way to combine those three steps to create a single matrix. Then only one matrix multiplication operation will need to be performed on each point in our object. Before we get to that, let me show you how we can expand this rotation into three dimensions.

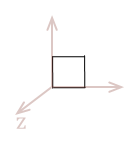

Imagine that our flat unit-square object existed in three dimensions. It is still defined to exist on the \(xy\) plane, but it has a third component added to each of its points to mark its location along the \(z-axis\). We simply need to adjust the definition of our points like this:

| \( \eqalign{ pt_{1} &= (\matrix{0 & 0 & 0})\\ pt_{2} &= (\matrix{1 & 0 & 0})\\ pt_{3} &= (\matrix{1 & 1 & 0})\\ pt_{4} &= (\matrix{0 & 1 & 0})\\ }\) |

|

So, you would be correct to surmise that in order to perform this same \(xy\) rotation about the \(z-axis\) in three dimensions, we simply need to begin with a \(3 \times 3\) identity matrix before we add the \(xy\) rotation.

| \( R_z = \left\lbrack \matrix{ \phantom{-}\cos \Theta & \sin \Theta & 0 \\ -\sin \Theta & \cos \Theta & 0 \\ 0 & 0 & 1 } \right\rbrack \) |

|

We can also adjust the rotation matrix to perform a rotation about the \(x\) and \(y\) axis, respectively:

| R_x = \( \left\lbrack \matrix{ 1 & 0 & 0 \\ 0 & \phantom{-}\cos \Theta & \sin \Theta \\ 0 & -\sin \Theta & \cos \Theta } \right\rbrack \) |

|

| \( R_y = \left\lbrack \matrix{ \cos \Theta & 0 & -\sin \Theta\\ 0 & 1 & 0 \\ \sin \Theta & 0 & \phantom{-}\cos \Theta } \right\rbrack \) |

|

Composite Transform Matrices

I mentioned that it is possible to combine a sequence of matrix transforms into a single matrix. This is highly desirable, considering that every point in an object model needs to be multiplied by the transform. What I demonstrated up to this point is how to individually create each of the transform types, translate, scale and rotate. Technically, the translate operation, \(V'=V+D\) is not a linear transformation because it is not composed within a matrix. Before we can continue, we must find a way to turn the translation operation from a matrix add to a matrix multiplication.

It turns out that we can create a translation operation that is a linear transformation by adding another row to our transformation matrix. However, a transformation matrix must be square because it starts with the identity matrix. So we must also add another column, which means we now have a \(4 \times 4\) matrix:

\(T = \left\lbrack \matrix{1 & 0 & 0 & 0\\ 0 & 1 & 0 & 0\\ 0 & 0 & 1 & 0\\ t_x & t_y & t_z & 1 }\right\rbrack\)

A general transformation matrix is in the form:

\( \left\lbrack \matrix{ a_{11} & a_{12} & a_{13} & 0\\ a_{21} & a_{22} & a_{23} & 0\\ a_{31} & a_{32} & a_{33} & 0\\ t_x & t_y & t_z & 1 }\right\rbrack\)

Where \(t_x\),\(t_y\) and \(t_z\) are the final translation offsets, and the sub-matrix \(a\) is a combination of the scale and rotate operations.

However, this matrix is not compatible with our existing point definitions because they only have three components.

Homogenous Coordinates

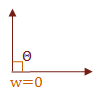

The final piece that we need to finalize our geometry representation and coordinate management logic is called Homogenous Coordinates. What this basically means, is that we will add one more parameter to our vector definition. We refer to this fourth component as, \(w\), and we initially set it to \(1\).

\(V(x,y,z,w) = [\matrix{x & y & z & 1}]\)

Our vector is only valid when \(w=1\). After we transform a vector, \(w\) has often changed to some other scale factor. Therefore, we must rescale our vector back to its original basis by dividing each component by \(w\). The equations below show the three-dimensional Cartesian coordinate representation of a vector, where \(w \ne 0\).

\( \eqalign{ x&= X/w \\ y&= Y/w \\ z&= Z/w }\)

Creating a Composite Transform Matrix

We now possess all of the knowledge required to compose a linear transformation sequence that is stored in a single, compact transformation matrix. So let's create the rotation sequence that we discussed earlier. We will a compose a transform that translates an object to the origin, rotates about the \(z-axis\) then translates the object back to its original position.

Each individual transform matrix is shown below in the order that it must be multiplied into the final transform.

\( T_c R_z T_o = \left\lbrack \matrix{ 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ -t_x & -t_y & -t_z & 1 \\ } \right\rbrack \left\lbrack \matrix{ \phantom{-}\cos \Theta & \sin \Theta & 0 & 0 \\ -\sin \Theta & \cos \Theta & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \\ } \right\rbrack \left\lbrack \matrix{ 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ t_x & t_y & t_z & 1 \\ } \right\rbrack \)

Here, values are selected and the result matrix is calculated:

\(t_x = 0.5, t_y = 0.5, t_z = 0.5, \Theta = 45°\)

\( \eqalign{ T_c R_z T_o &= \left\lbrack \matrix{ 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ -0.5 & -0.5 & -0.5 & 1 \\ } \right\rbrack \left\lbrack \matrix{ 0.707 & 0.707 & 0 & 0 \\ -0.707 & 0.707 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \\ } \right\rbrack \left\lbrack \matrix{ 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0.5 & 0.5 & 0.5 & 1 \\ } \right\rbrack \\ \\ &=\left\lbrack \matrix{ \phantom{-}0.707 & 0.707 & 0 & 0 \\ -0.707 & 0.707 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0.5 & -0.207 & 0 & 1 \\ } \right\rbrack }\)

It is possible to derive the final composite matrix by using algebraic manipulation to multiply the transforms with the unevaluated variables. The matrix below is the simplified definition for \(T_c R_z T_o\) from above:

\( T_c R_z T_o = \left\lbrack \matrix{ \phantom{-}\cos \Theta & \sin \Theta & 0 & 0 \\ -\sin \Theta & \cos \Theta & 0 & 0 \\ 0 & 0 & 1 & 0 \\ (-t_x \cos \Theta + t_y \sin \Theta + t_x) & (-t_x \sin \Theta - t_y \cos \Theta + t_y) & 0 & 1 \\ } \right\rbrack\)

Projection Transformation

The last thing to do, is to convert our 3D model into an image. We have three-dimensional coordinates, that must be mapped to a two-dimensional surface. To do this, we will project a view of our world-space onto a flat two-dimensional screen. This is known as the "projection transformation" or "projection matrix".

Coordinate Systems

It may be already be apparent, but if not I will state it anyway; a linear transformation is a mapping between two coordinate systems. Whether we translate, scale or rotate we are simply changing the location and orientation of the origin. We have actually used the origin as our frame of reference, so our transformations appear to be moving our geometric objects. This is a valid statement, because the frame of reference determines many things.

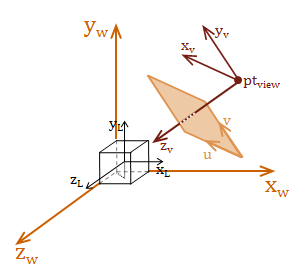

We need a frame of reference that can be used as the eye or camera. We call this frame of reference the view space and the eye is located at the view-point. In fact, up to this point we have also used two other vector-spaces, local space (also called model space and world space. To be able to create our projection transformation, we need to introduce one last coordinate space called screen space. Screen space is a 2D coordinate system defined by the \(uv-plane\), and it maps directly to the display image or screen.

The diagram below depicts all four of these different coordinate spaces:

The important concept to understand, is that all of these coordinate systems are relatively positioned, scaled, and oriented to each other. We construct our transforms to move between these coordinate spaces. To render a view of the scene, we need to construct a transform to go from world space to view space.

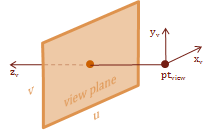

View Space

The view space is the frame of reference for the eye. Basically, our view of the scene. This coordinate space allows us to transform the world coordinates to a position relative to our view point. This makes it possible for us to create a projection of this visible scene onto our view plane.

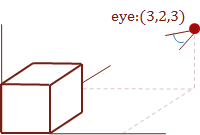

We need a point and two vectors to define the location and orientation of the view space. The method I demonstrate here is called the "eye, at, up" method. This is because we specify a point to be the location of the eye, a vector from the eye to the focal-point, at, and a vector that indicates which direction is up. I like this method because I think it is an intuitive model for visualizing where your view-point is located, how it is oriented, and what you are looking at.

Right or Left Handed?

The view space is a left-handed coordinate system, which differs from the right-handed coordinate systems that we have used up to this point. In math and physics, right-handed systems are the norm. This places the \(y-axis\) up, and the \(x-axis\) pointing to the right (same as the classic 2D Cartesian grid), and the resulting \(z-axis\) will point toward you. For a left-handed space, the positive \(z-axis\) points into the paper. This makes things very convenient in computer graphics, because \(z\) is typically used to measure and indicate depth.

You can tell which type of coordinate system that you have created by taking your open palm and pointing it perpendicularly to the \(x-axis\), and your palm facing the \(y-axis\). The positive \(z-axis\) will point in the direction of your thumb, based upon the direction your coordinate space is oriented.

Let's define a simple view space transformation.

\( \eqalign{ eye&= [\matrix{2 & 3 & 3}] \\ at&= [\matrix{0 & 0 & 0}] \\ up&= [\matrix{0 & 1 & 0}] }\)

Simple Indeed.

From this, we can create a view located at \([\matrix{2 & 3 & 3}]\) that is looking at the origin and is oriented vertically (no tilt to either side). We start by using the vector operations, which I demonstrated in my previous post, to define vectors for each of the three coordinate axes.

\( \eqalign{ z_{axis}&= \| at-eye \| \\ &= \| [\matrix{-2 & -3 & -3}] \| \\ &= [\matrix{-0.4264 & -0.6396 & -0.6396}] \\ \\ x_{axis}&= \| up \times z_{axis} \| \\ &=\| [\matrix{-3 & 0 & 2}] \|\\ &=[\matrix{-0.8321 & 0 & 0.5547}]\\ \\ y_{axis}&= \| z_{axis} \times x_{axis} \| \\ &= [\matrix{-0.3547 & -0.7687 & -0.5322}] }\)

We now take these three vectors, and plug them into this composite matrix that is designed to transform world space to a new coordinate space defined by axis-aligned unit vectors.

\( \eqalign { T_{view}&=\left\lbrack \matrix{ x_{x_{axis}} & x_{y_{axis}} & x_{z_{axis}} & 0 \\ y_{x_{axis}} & y_{y_{axis}} & y_{z_{axis}} & 0 \\ z_{x_{axis}} & z_{y_{axis}} & z_{z_{axis}} & 0 \\ -(x_{axis} \cdot eye) & -(y_{axis} \cdot eye) & -(z_{axis} \cdot eye) & 1 \\ } \right\rbrack \\ \\ &=\left\lbrack \matrix{ -0.8321 & -0.3547 & -0.4264 & 0 \\ 0 & -0.7687 & -0.6396 & 0 \\ -0.5547 & -0.5322 & -0.6396 & 0 \\ 3.3283 & 4.6121 & 4.6904 & 1 \\ } \right\rbrack }\)

Gaining Perspective

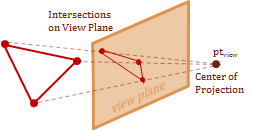

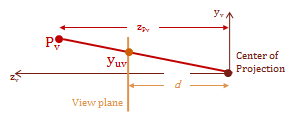

The final step is to project what we can see from the \(eye\) in our view space, onto the view-plane. As I mentioned previous, the view-plane is commonly referred to as the \(uv-plane\). The \(uv\) coordinate-system refers to the 2D mapping on the \(uv-plane\). The projection mapping that I demonstrate here places the \(uv-plane\) some distance \(d\) from the \(eye\), so that the view-space \(z-axis\) is parallel with the plane's normal.

The \(eye\) is commonly referred to by another name for the context of the projection transform, it is called the center of projection. That is because this final step, basically, projects the intersection of a projected vector onto the \(uv-plane\). The projected vector originates at the center of projection and points to each point in the view-space.

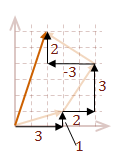

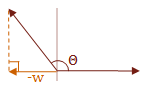

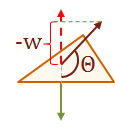

The cues that let us perceive perspective with stereo-scopic vision is called, fore-shortening. This effect is caused by objects appearing much smaller as they are further from our vantage-point. Therefore, we can recreate the foreshortening in our image by scaling the \(xy\) values based on their \(z\)-coordinate. Basically, the farther away an object is placed from the center of projection, the smaller it will appear.

The diagram above shows a side view of the \(y\) and \(z\) axes, and is parallel with the \(x-axis\). Notice the have similar triangles that are created by the view plane. The smaller triangle on the bottom is the distance \(d\), which is the distance from the center of projection to the view plane. The larger triangle on the top extends from the point \(Pv\) to the \(y-axis\), this distance is the \(z\)-component value for the point \(Pv\). Using the property of similar triangles we now have a geometric relationship that allows us to scale the location of the point for the view plane intersection.

|

\(\frac{x_s}d = \frac{x_v}{z_v}\) |

\(\frac{y_s}d = \frac{y_v}{z_v}\) |

|

\(x_s = \frac{x_v}{z_v/d}\) |

\(y_s = \frac{y_v}{z_v/d}\) |

With this, we can define a perspective-based projection transform:

\( T_{project}=\left\lbrack \matrix{ 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 1/d \\ 0 & 0 & 0 & 0} \right\rbrack \)

This is now where the homogenizing component \(w\) returns. This transform will have the affect of scaling the \(w\) component to create:

\( \eqalign{ X&= x_v \\ Y&= y_v \\ Z&= z_v \\ w&= z_v/d }\)

Which is why we need to access these values as shown below after the following transform is used:

\( \eqalign{ [\matrix{X & Y & Z & w}] &= [\matrix{x_v & y_v & z_v & 1}] T_{project}\\ \\ x_v&= X/w \\ y_v&= Y/w \\ z_v&= Z/w }\)

Scale the Image Plane

Here is one last piece of information that I think you will appreciate. The projection transform above defines a \(uv\) coordinate system that is \(1 \times 1\). Therefore, if you make the following adjustment, you will scale the unit-plane to match the size of your final image in pixels:

\( T_{project}=\left\lbrack \matrix{ width & 0 & 0 & 0 \\ 0 & height & 0 & 0 \\ 0 & 0 & 1 & 1/d \\ 0 & 0 & 0 & 0} \right\rbrack \)

Summary

We made it! With a little bit of knowledge from both Linear Algebra and Trigonometry, we have been able to construct and manipulate geometric models in 3D space, concatenate transform matrices into compact and efficient transformation definitions, and finally create a projection transform to create a two-dimensional image from a three-dimensional definition.

As you can probably guess, this is only the beginning. There is so much more to explore in the creation of computer graphics. Some of the more interesting topics that generally follow after the 3D projection is created are:

- Shading Models: Flat, Gouraud and Phong.

- Shadows

- Reflections

- Transparency and Refraction

- Textures

- Bump-mapping ...

The list goes on and on. I imagine that I will continue to write on this topic and address polygon fill algorithms and the basic shading models. However, I think things will be more interesting if I have code to demonstrate and possibly an interactive viewer for the browser. I have a few demonstration programs written in C++ for Windows, but I would prefer to create something that is a bit more portable.

Once I decide how I want to proceed I will return to this topic. However, if enough people express interest, I wouldn't mind continuing forward demonstrating with C++ Windows programs. Let me know what you think.

References

Watt, Alan, "Three-dimensional geometry in computer graphics," in 3D Computer Graphics, Harlow, England: Addison-Wesley, 1993

Foley, James, D., et al., "Geometrical Transformations," in Computer Graphics: Principles and Practice, 2nd ed. in C, Reading: Addison-Wesley, 1996

I previously introduced how to perform the basic matrix operations commonly used in 3D computer graphics. However, we haven’t yet reached anything that resembles graphics. I now introduce the concepts of geometry as it’s related to computer graphics. I intend to provide you with the remaining foundation necessary to be able to mathematically visualize the concepts at work. Unfortunately, we will still not be able to reach the "computer graphics" stage with this post; that must wait until the next entry.

This post covers a basic form of a polygonal mesh to represent models, points and Euclidean vectors. With the previous entry and this one, we will have all of the background necessary for me to cover matrix transforms in the next post. Specifically the 3D projection transform, which provides the illusion of perspective. This will be everything that we need to create a wireframe viewer. For now, let's focus on the topics of this post.

Modeling the Geometry

We will use models composed of a mesh of polygons. All of the polygons will be defined as triangles. It is important to consistently define the points of each triangle either clockwise or counter-clockwise. This is because the definition order affects the direction a surface faces. If a single model mixes the order of point definitions, your rendering will have missing polygons.

The order of point definition for the triangle can be ignored if a surface normal is provided for each polygon. However, to keep things simple, I will only be demonstrating with basic models that are built from an index array structure. This data structure contains two arrays. The first array is a list of all of the vertices (points) in the model. The second array contains a three-element structure with the index of the point that can be found in the first array. I define all of my triangles counter-clockwise while looking at the front of the surface.

Generally, your models should be defined at or near the origin. The rotation transformation that we will explore in the next post requires a pivot point, which is defined at the origin. Model geometries defined near the origin are also easier to work with when building models from smaller components and using instancing to create multiple copies of a single model.

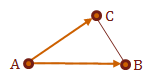

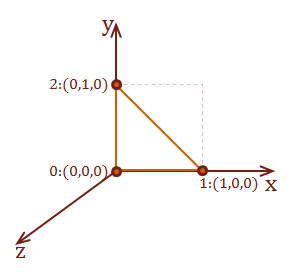

Here is a definition for a single triangle starting at the origin and adjacent to the Y plane. The final corner decreases for Y as it moves diagonally along the XZ plane:

|

|

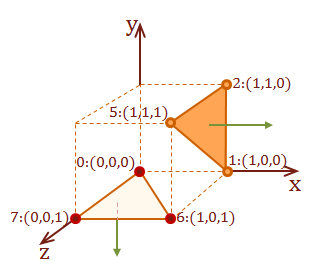

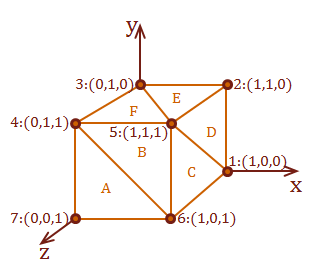

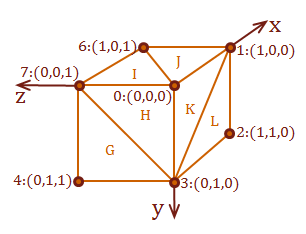

This definition represents a unit cube starting at the origin. A cube has 8 corners to hold six square-faces. It requires two triangles to represent each square. Therefore, there are 8 points used to define 12 triangles:

|

|

Vectors

Recall from my previous post that a vector is a special type of matrix, in which there is only a single row or column. You may be aware of a different definition for the term "vector"; something like

NOUN

A quantity having direction as well as magnitude, especially determining the position of one point in space relative to another.

Oxford Dictionary

From this point forward, when I use the term "vector", I mean a Euclidean vector. We will even use single-row and single-column matrices to represent these vectors; we’re just going to refer to those structures as matrices to minimize the confusion.

Notational Convention

It is most common to see vectors represented as single-row matrices, as opposed to the standard mathematical notation which uses column matrices to represent vectors. It is important to remember this difference in notation based on what type of reference you are following.

The reason for this difference, is the row-form makes composing the transformation matrix more natural as the operations are performed left-to-right. I discuss the transformation matrix in the next entry. As you will see, properly constructing transformation matrices is crucial to achieve the expected results, and anything that we can do to simplify this sometimes complex topic, will improve our chances of success.

Representation

As the definition indicates, a vector has a direction and magnitude. There is a lot of freedom in that definition for representation, because it is like saying "I don’t know where I am, but I know which direction I am heading and how far I can go." Therefore, if we think of our vector as starting at the origin of a Cartesian grid, we could then represent a vector as a single point.

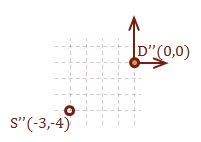

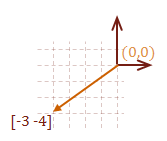

This is because starting from the origin, the direction of the vector is towards the point. The magnitude can be calculated by using Pythagoras' Theorem to calculate the distance between two points. All of this information can be encoded in a compact, single-row matrix. As the definition indicates, we derive a vector from two points, by subtracting one point from the other using inverse of matrix addition.

This will give us the direction to the destination point, relative to the starting point. The subtraction effectively acts as a translation of the vectors starting point to the origin.

For these first few vector examples, I am going to use 2D vectors. Because they are simpler to demonstrate and diagram, and the only difference to moving to 3D vectors is an extra field has been added.

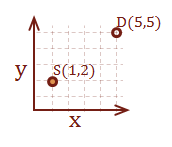

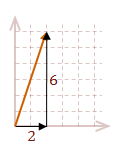

Here is a simple example with two points (2, 1) and (5, 5). The first step is to encode them in a matrix:

\( {\bf S} = \left\lbrack \matrix{2 & 1} \right\rbrack, {\bf D} = \left\lbrack \matrix{5 & 5} \right\rbrack \)

Let’s create two vectors, one that determines how to get to point D starting from S, and one to get to point S starting from D. The first step is to subtract the starting point from the destination point. Notice that if we were to subtract the starting point from itself, the result is the origin, (0, 0).

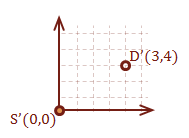

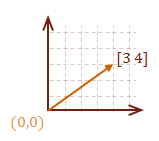

\( \eqalign{ \overrightarrow{SD} &= [ \matrix{5 & 5} ] - [ \matrix{2 & 1} ] \cr &= [ \matrix{3 & 4} ] } \)

\( \eqalign{ \overrightarrow{DS} &= [ \matrix{2 & 1} ] - [ \matrix{5 & 5} ] \cr &= [ \matrix{-3 & -4} ] } \)

Notice that the only difference between the two vectors is the direction. The magnitude of each vector is the same, as expected. Here is how to calculate the magnitude of a vector:

Given:

\( \eqalign{ v &= \matrix{ [a_1 & a_2 & \ldots & a_n]} \cr |v| &= \sqrt{\rm (a_1^2 + a_2^2 + \ldots + a_n^2)} } \)

Operations

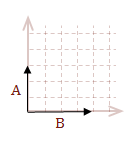

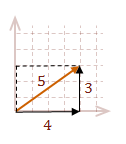

I covered scalar multiplication and matrix addition in my previous post. However, I wanted to briefly revisit the topic to demonstrate one way to think about vectors, which may help you develop a better intuition for the math. For the coordinate systems that we are working with, vectors are straight lines.

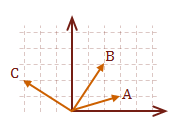

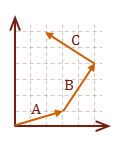

When you add two or more vectors together, you can think of them as moving the tail of each successive vector to the head of the previous vector. Then add the corresponding components.

\( \eqalign{ v &= A+B \cr |v| &= \sqrt{\rm 3^2 + 4^2} \cr &= 5 }\)

Regardless of the orientation of the vector, it can be decomposed into its individual contributions per axis. In our model for the vector, the contribution for each axis is the raw value defined in our matrix.

\( v = A+B+C \)

\( \eqalign{ |v| &= \sqrt{\rm (3+2-3)^2 + (1+3+2)^2} \cr &= \sqrt{\rm 2^2 + 6^2} \cr &= 2 \sqrt{\rm 10} }\)

Unit Vector

We can normalize a vector to create unit vector, which is a vector that has a length of 1. The concept of the unit vector is introduced to reduce a vector to simply indicate its direction. This type of vector can now be used as a unit of measure to which other vectors can be compared. This also simplifies the task of deriving other important information when necessary.

To normalize a vector, divide the vector by its magnitude. In terms of the matrix operations that we have discussed, this is simply scalar multiplication of the vector with the inverse of its magnitude.

\( u = \cfrac{v}{ |v| } \)

\(u\) now contains the information for the direction of \(v\). With algebraic manipulation we can also have:

\( v = u |v| \)

This basically says the vector \(v\) is equal to the unit vector that has the direction of \(u\) multiplied by the magnitude of \(v\).

Dot Product

The dot product is used for detecting visibility and shading of a surface. The dot product operation is shown below:

\( \eqalign{ w &= u \cdot v \cr &= u_1 v_1 + u_2 v_2 + \cdots + u_n v_n }\)

This formula may look familiar. That is because this is a special case in matrix multiplication called the inner product, which looks like this (remember, we are representing vectors as a row matrix):

\( \eqalign{ u \cdot v &= u v^T \cr &= \matrix {[u_1 & u_2 & \cdots & u_n]} \left\lbrack \matrix{v_1 \\ v_2 \\ \vdots \\ v_n } \right\rbrack }\)

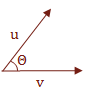

We will use the dot product on two unit vectors to help us determine the angle between these vectors; that’s right, angle. We can do this because of the cosine rule from Trigonometry. The equation that we can use is as follows:

\( \cos \Theta = \cfrac{u \cdot v} {|u||v|} \)

To convert this to the raw angle, \(\Theta\), you can use the inverse function of cosine, arccos(\( \Theta \)). In C and C++ the name of the function is acos().

\( \cos {\arccos \Theta} = \Theta \)

So what is actually occurring when we calculate the dot product that allows us to get an angle?

We are essentially calculating the projection of u onto v. This gives us enough information to manipulate the Trigonometric properties to derive \( \Theta \).

I would like to make one final note regarding the dot product. Many times it is only necessary to know the sign of the angle between two vectors. The following table shows the relationship between the dot product of two vectors and the value of the angle, \( \Theta \), which is in the range \( 0° \text{ to } 180° \). If you like radians, that is \( 0 \text{ to } \pi \).

\( w = u \cdot v = f(u,w) \)

\( f(u,w) = \cases{ w \gt 0 & \text { if } \Theta \lt 90° \cr w = 0 & \text { if } \Theta = 90° \cr w \lt 0 & \text { if } \Theta \gt 90° }\)

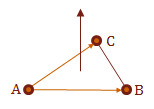

Cross Product

The cross product is used to calculate a vector that is perpendicular to a surface. The name for this vector is the surface normal. The surface normal indicates which direction the surface is facing. Therefore, it is used quite extensively for shading and visibility as well.

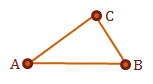

We have reached the point where we need to start using examples and diagrams with three dimensions. We will use the three points that define our triangular surface to create two vectors. Remember, it is important to use consistent definitions when creating models.

The first step is to calculate our planar vectors. What does that mean? We want to create two vectors that are coplanar (on the same plane), so that we may use them to calculate the surface normal. All that we need to be able to do this are three points that are non-collinear, and we have this with each triangle definition in our polygonal mesh model.

If we have defined a triangle \(\triangle ABC\), we will define two vectors, \(u = \overrightarrow{AB}\) and \(v = \overrightarrow{AC}\).

If we consistently defined the triangle surfaces in our model, we should now be able to take the cross product of \(u \times v\) to receive the surface normal, \(N_p\), of \(\triangle ABC\). Again, the normal vector is perpendicular to its surface.

In practice, this tells us which direction the surface is facing. We would combine this with the dot product to help us determine if are interested in this surface for the current view.

So how do you calculate the cross product?

Here is what you need to know in order to calculate the surface normal vector:

\(\eqalign { N_p &= u \times v \cr &= [\matrix{(u_2v_3 – u_3v_2) & (u_3v_1 – u_1v_3) & (u_1v_2 – u_2v_1)}] }\)

To help you remember how to perform the calculation, I have re-written it in parts, and used \(x\), \(y\), and \(z\) rather than the index values.

\(\eqalign { x &= u_yv_z – u_zv_y \cr y &= u_zv_x – u_xv_z \cr z &= u_xv_y – u_yv_x }\)

Let's work through an example to demonstrate the cross product. We will use surface A from our axis-aligned cube model that we constructed at the beginning of this post. Surface A is defined completely within the XY plane. How do we know this? Because all of the points have the same \(z\)-coordinate value of 1. Therefore, we should expect that our calculation for \(N_p\) of surface A will be an \(z\) axis-aligned vector pointing in the positive direction.

Step 1: Calculate our surface description vectors \(u\) and \(v\):

\( Surface_A = pt_7: (0,0,1), pt_6: (1,0,1), \text{ and } pt_4: (0,1,1) \)

|

\( \eqalign { u &= pt_6 - pt_7 \cr &= [\matrix{1 & 0 & 1}] - [\matrix{0 & 0 & 1}] \cr &=[\matrix{\color{red}{1} & \color{green}{0} & \color{blue}{0}}] }\) |

\( \eqalign { v &= pt_4 - pt_7 \cr &= [\matrix{0 & 1 & 1}] - [\matrix{0 & 0 & 1}] \cr &=[\matrix{\color{red}{0} & \color{green}{1} & \color{blue}{0}}] }\) |

Step 2: Calculate \(u \times v\):

\(N_p = [ \matrix{x & y & z} ]\text{, where:}\)

|

\( \eqalign { x &= \color{green}{u_y}\color{blue}{v_z} – \color{blue}{u_z}\color{green}{v_y} \cr &= \color{green}{0} \cdot \color{blue}{0} - \color{blue}{0} \cdot \color{green}{1} \cr &= 0 }\) |

\( \eqalign { y &= \color{blue}{u_z}\color{red}{v_x} – \color{red}{u_x}\color{blue}{v_z} \cr &= \color{blue}{0} \cdot \color{red}{0} - \color{red}{1} \cdot \color{blue}{0} \cr &= 0 }\) |

\( \eqalign { z &= \color{red}{u_x}\color{green}{v_y} – \color{green}{u_y}\color{red}{v_x} \cr &= \color{red}{1} \cdot \color{green}{1} - \color{green}{0} \cdot \color{red}{0} \cr &= 1 }\) |

\(N_p = [ \matrix{0 & 0 & 1} ]\)

This is a \(z\) axis-aligned vector pointing in the positive direction, which is what we expected to receive.

Notation Formality

If you look at other resources to learn more about the cross product, it is actually defined as a sum in terms of three axis-aligned vectors \(i\), \(j\), and \(k\), which then must be multiplied by the vectors \(i=[\matrix{1&0&0}]\), \(j=[\matrix{0&1&0}]\), and \(k=[\matrix{0&0&1}]\) to get the final vector. The difference between what I presented and the form with the standard vectors, \(i\), \(j\), and \(k\), is I omitted a step by placing the result calculations directly in a new vector. I wanted you to be aware of this discrepancy between what I have demonstrated and what is typically taught in the classroom.

Apply What We Have Learned

We have discovered quite a few concepts between the two posts that I have written regarding the basic math and geometry involved with computer graphics. Let's apply this knowledge and solve a useful problem. Detecting if a surface is visible from a specific point-of-view is a fundamental problem that is used extensively in this domain. Since we have all of the tools necessary to solve this, let's run through two examples.

For the following examples we will specify the view-point as \(pt_{eye} = [\matrix{3 & 2 & 3}]:\)

Also, refer to this diagram for the surfaces that we will test for visibility from the view-point. The side surface is surface \(D\) from the cube model, and the hidden surface is the bottom-facing surface \(J\).

Detect a visible surface

Step 1: Calculate our surface description vectors \(u\) and \(v\):

\( \eqalign{ Surface_D: &pt_5: (1,1,1), \cr &pt_1: (1,0,0), \cr &pt_2: (1,1,0) } \)

|

\( \eqalign { u &= pt_1 - pt_5 \cr &= [\matrix{1 & 0 & 0}] - [\matrix{1 & 1 & 1}] \cr &=[\matrix{0 & -1 & -1}] }\) |

\( \eqalign { v &= pt_2 - pt_5 \cr &= [\matrix{1 & 1 & 0}] - [\matrix{1 & 1 & 1}] \cr &=[\matrix{0 & 0 & -1}] }\) |

Step 2: Calculate \(u \times v\):

\(N_{pD} = [ \matrix{x & y & z} ]\text{, where:}\)

|

\( \eqalign { x &= -1 \cdot (-1) - (-1) \cdot 0 \cr &= 1 }\) |

\( \eqalign { y &= -1 \cdot 0 - (-1) \cdot 0 \cr &= 0 }\) |

\( \eqalign { z &= 0 \cdot 0 - (-1) \cdot 0 \cr &= 0 }\) |

\(N_pD = [ \matrix{1 & 0 & 0} ]\)

Step 3: Calculate vector to the eye:

To calculate a vector to the eye, we need to select a point on the target surface and create a vector to the eye. For this example, we will select \(pt_5\)

\( \eqalign{ \overrightarrow{view_D} &= pt_{eye} - pt_5\cr &= [ \matrix{3 & 2 & 3} ] - [ \matrix{1 & 1 & 1} ]\cr &= [ \matrix{2 & 1 & 2} ] }\)

Step 4: Normalize the view-vector:

Before we can use this vector in a dot product, we must normalize the vector:

\( \eqalign{ |eye_D| &= \sqrt{\rm 2^2 + 1^2 + 2^2} \cr &= \sqrt{\rm 4 + 1 + 4} \cr &= \sqrt{\rm 9} \cr &= 3 \cr \cr eye_u &= \cfrac{eye_D}{|eye_D|} \cr &= \cfrac{1}3 [\matrix{2 & 1 & 2}] \cr &= \left\lbrack\matrix{\cfrac{2}3 & \cfrac{1}3 & \cfrac{2}3} \right\rbrack }\)

Step 5: Calculate the dot product of the view-vector and surface normal:

\( \eqalign{ w &= eye_{u} \cdot N_{pD} \cr &= \cfrac{2}3 \cdot 1 + \cfrac{1}3 \cdot 0 + \cfrac{2}3 \cdot 0 \cr &= \cfrac{2}3 }\)

Step 6: Test for visibility:

|

\(w \gt 0\), therefore \(Surface_D\) is visible. |

|

Detect a surface that is not visible

Step 1: Calculate our surface description vectors \(u\) and \(v\):

\( \eqalign{ Surface_J: &pt_6: (1,1,1), \cr &pt_0: (0,0,0), \cr &pt_1: (1,0,0) } \)

|

\( \eqalign { u &= pt_0 - pt_6 \cr &= [\matrix{0 & 0 & 0}] - [\matrix{1 & 0 & 1}] \cr &=[\matrix{-1 & 0 & -1}] }\) |

\( \eqalign { v &= pt_1 - pt_6 \cr &= [\matrix{1 & 0 & 0}] - [\matrix{1 & 0 & 1}] \cr &=[\matrix{0 & 0 & -1}] }\) |

Step 2: Calculate \(u \times v\):

\(N_{pJ} = [ \matrix{x & y & z} ]\text{, where:}\)

|

\( \eqalign { x &= 0 \cdot (-1) - (-1) \cdot 0 \cr &= 0 }\) |

\( \eqalign { y &= -1 \cdot 0 - (-1) \cdot (-1) \cr &= -1 }\) |

\( \eqalign { z &= (-1) \cdot 0 - 0 \cdot 0 \cr &= 0 }\) |

\(N_pJ = [ \matrix{0 & -1 & 0} ]\)

Step 3: Calculate vector to the eye:

For this example, we will select \(pt_6\)

\( \eqalign{ \overrightarrow{view_J} &= pt_{eye} - pt_6\cr &= [ \matrix{3 & 2 & 3} ] - [ \matrix{1 & 0 & 1} ]\cr &= [ \matrix{2 & 2 & 2} ] }\)

Step 4: Normalize the view-vector:

Before we can use this vector in a dot product, we must normalize the vector:

\( \eqalign{ |eye_J| &= \sqrt{\rm 2^2 + 2^2 + 2^2} \cr &= \sqrt{\rm 4 + 4 + 4} \cr &= \sqrt{\rm 12} \cr &= 2 \sqrt{\rm 3} \cr \cr eye_u &= \cfrac{eye_J}{|eye_J|} \cr &= \cfrac{1}{2 \sqrt 3} [\matrix{2 & 2 & 2}] \cr &= \left\lbrack\matrix{\cfrac{1}{\sqrt 3} & \cfrac{1}{\sqrt 3} & \cfrac{1}{\sqrt 3}} \right\rbrack }\)

Step 5: Calculate the dot product of the view-vector and surface normal:

\( \eqalign{ w &= eye_{u} \cdot N_{pJ} \cr &= \cfrac{1}{\sqrt 3} \cdot 0 + \cfrac{1}{\sqrt 3} \cdot (-1) + \cfrac{1}{\sqrt 3} \cdot 0 \cr &= -\cfrac{1}{\sqrt 3} }\)

Step 6: Test for visibility:

|

\(w \lt 0\), therefore \(Surface_J\) is not visible. |

|

What has this example demonstrated?

Surprisingly, this basic task demonstrates every concept that we have learned from the two posts up to this point:

- Matrix Addition: Euclidean vector creation

- Scalar Multiplication: Unit vector calculation, multiplying by \(1 / |v| \).

- Matrix Multiplication: Dot Product calculation, square-matrix multiplication will be used in the next post.

- Geometric Model Representation: The triangle surface representation provided the points for our calculations.

- Euclidean Vector: Calculation of each surfaces planar vectors, as well as the view vector.

- Cross Product: Calculation of the surface normal, \(N_p\).

- Unit Vector: Preparation of vectors for dot product calculation.

- Dot Product: Used the sign from this calculation to test for visibility of a surface.

Summary

Math can be difficult sometimes, especially with all of the foreign notation. However, with a bit of practice the notation will soon become second nature and many calculations can be performed in your head, similar to basic arithmetic with integers. While many graphics APIs hide these calculations from you, don't be fooled because they still exist and must be performed to display the three-dimensional images on your screen. By understanding the basic concepts that I have already demonstrated, you will be able to better troubleshoot your graphics programs when something wrong occurs. Even if you rely solely on a graphics library to abstract the complex mathematics.

There is only one more entry to go, and we will have enough basic knowledge of linear algebra, Euclidean geometry, and computer displays to be able to create, manipulate and display 3D models programmatically. The next entry discusses how to translate, scale and rotate geometric models. It also describes what must occur to create the illusion of a three-dimensional image on a two-dimensional display with the projection transform.

References

Watt, Alan, "Three-dimensional geometry in computer graphics," in 3D Computer Graphics, Harlow, England: Addison-Wesley, 1993

Three-dimensional computer graphics is an exciting aspect of computing because of the amazing visual effects that can be created for display. All of this is created from an enormous number of calculations that manipulate virtual models, which are constructed from some form of geometric definition. While the math involved for some aspects of computer graphics and animation can become quite complex, the fundamental mathematics that is required is very accessible. Beyond learning some new mathematic notation, a basic three-dimensional view can be constructed with algorithms that only require basic arithmetic and a little bit of trigonometry.

I demonstrate how to perform some basic operations with two key constructs for 3D graphics, without the rigorous mathematic introduction. Hopefully the level of detail that I use is enough for anyone that doesn't have a strong background in math, but would very much like to play with 3D APIs. I introduce the math that you must be familiar with in this entry, and in two future posts I demonstrate how to manipulate geometric models and apply the math towards the display of a 3D image.

Linear Algebra

The type of math that forms the basis of 3D graphics is called linear algebra. In general, linear algebra is quite useful for solving systems of linear equations. Rather than go into the details of linear algebra, and all of its capabilities, we are going to simply focus on two related constructs that are used heavily within the topic, the matrix and the vertex.

Matrix

The Matrix provides an efficient mechanism to translate, scale, rotate, and convert between different coordinate systems. This is used extensively to manipulate the geometric models for environment calculations and display.

These are all examples of valid matrices:

\( \left\lbrack \matrix{a & b & c \cr d & e & f} \right\rbrack, \left\lbrack \matrix{10 & -27 \cr 0 & 13 \cr 7 & 17} \right\rbrack, \left\lbrack \matrix{x^2 & 2x \cr 0 & e^x} \right\rbrack\)

Square matrices are particularly important, that is a matrix with the same number of columns and rows. There are some operations that can only be performed on a square matrix, which I will introduce shortly. The notation for matrices, in general, uses capital letters as the variable names. Matrix dimensions can be specified as a shorthand notation, and to also identify an indexed position within the matrix. As far as I am aware, row-major indexing is always used for consistency, that is, the first index represents the row, and the second represents the column.

\( A= [a_{ij}]= \left\lbrack \matrix{a_{11} & a_{12} & \ldots & a_{1n} \cr a_{21} & a_{22} & \ldots & a_{2n} \cr \vdots & \vdots & \ddots & \vdots \cr a_{m1} & a_{m2} & \ldots & a_{mn} } \right\rbrack \)

Vector

The vector is a special case of a matrix, where there is only a single row or a single column; also a common practice is to use lowercase letters to represent vectors:

\( u= \left\lbrack \matrix{u_1 & u_2 & u_3} \right\rbrack \), \( v= \left\lbrack \matrix{v_1\cr v_2\cr v_3} \right\rbrack\)

Operations

The mathematical shorthand notation for matrices is very elegant. It simplifies these equations that would be quite cumbersome, otherwise. There are only a few operations that we are concerned with to allow us to get started. Each operation has a basic algorithm to follow to perform a calculation, and the algorithm easily scales with the dimensions of a matrix.

Furthermore, the relationship between math and programming is not always clear. I have a number of colleagues that are excellent programmers and yet they do not consider their math skills very strong. I think that it could be helpful to many to see the conversion of the matrix operations that I just described from mathematical notation into code form. Once I demonstrate how to perform an operation with the mathematical notation and algorithmic steps, I will also show a basic C++ implementation of the concept to help you understand how these concepts map to actual code.

The operations that I implement below assume a Matrix class with the following interface:

C++

class Matrix | |

{ | |

public: | |

// Ctor and Dtor omitted | |

| |

// Calculate and return a reference to the specified element. | |

double& element(size_t row, size_t column); | |

| |

// Resizes this Matrix to have the specified size. | |

void resize(size_t row, size_t column); | |

| |

// Returns the number rows. | |

size_t rows(); | |

| |

// Returns the number of columns. | |

size_t columns(); | |

private: | |

std::vector<double> data; | |

}; |

Transpose

Transpose is a unary operation that is performed on a single matrix, and it is represented by adding a superscript T to target matrix.

For example, the transpose of a matrix A is represented with AT.

The transpose "flips" the orientation of the matrix so that each row becomes a column, and each original column is transformed into a row. I think that a few examples will make this concept more clear.

\( A= \left\lbrack \matrix{a & b & c \cr d & e & f \cr g & h & i} \right\rbrack \)

\(A^T= \left\lbrack \matrix{a & d & g \cr b & e & h \cr c & f & i} \right\rbrack \)

The resulting matrix will contain the same set of values as the original matrix, only their position in the matrix changes.

\( B= \left\lbrack \matrix{1 & 25 & 75 & 100\cr 0 & 5 & -50 & -25\cr 0 & 0 & 10 & 22} \right\rbrack \)

\(B^T= \left\lbrack \matrix{1 & 0 & 0 \cr 25 & 5 & 0 \cr 75 & -50 & 10 \cr 100 & -25 & 22} \right\rbrack \)

It is very common to transpose a matrix, including vertices, before performing other operations. The reason will become clear for matrix multiplication.

\( u= \left\lbrack \matrix{u_1 & u_2 & u_3} \right\rbrack \)

\(u^T= \left\lbrack \matrix{u_1 \cr u_2 \cr u_3} \right\rbrack \)

Matrix Addition

Addition can only be performed between two matrices that are the same size. By size, we mean that the number of rows and columns are the same for each matrix. Addition is performed by adding the values of the corresponding positions of both matrices. The sum of the values creates a new matrix that is the same size as the original two matrices provided to the add operation.

If

\( A= \left\lbrack \matrix{0 & -2 & 4 \cr -6 & 8 & 0 \cr 2 & -4 & 6} \right\rbrack, B= \left\lbrack \matrix{1 & 3 & 5 \cr 7 & 9 & 11 \cr 13 & 15 & 17} \right\rbrack \)

Then

\(A+B=\left\lbrack \matrix{1 & 1 & 9 \cr 1 & 17 & 11 \cr 15 & 11 & 23} \right\rbrack \)

The size of these matrices do not match in their current form.

\(U= \left\lbrack \matrix{4 & -8 & 5}\right\rbrack, V= \left\lbrack \matrix{-1 \\ 5 \\ 4}\right\rbrack \)

However, if we take the transpose of one of them, their sizes will match and they can then be added together. The size of the result matrix depends upon which matrix we perform the transpose operation on from the original expression.:

\(U^T+V= \left\lbrack \matrix{3 \\ -3 \\ 9} \right\rbrack \)

Or

\(U+V^T= \left\lbrack \matrix{3 & -3 & 9} \right\rbrack \)

Matrix addition has the same algebraic properties as with the addition of two scalar values:

Commutative Property:

Associative Property:

Identity Property:

Inverse Property:

The code required to implement matrix addition is relatively simple. Here is an example for the Matrix class definition that I presented earlier:

C++

void Matrix::operator+=(const Matrix& rhs) | |

{ | |

if (rhs.data.size() == data.size()) | |

{ | |

// We can simply add each corresponding element | |

// in the matrix element data array. | |

for (size_t index = 0; index < data.size(); ++index) | |

{ | |

data[index] += rhs.data[index]; | |

} | |

} | |

} | |

| |

Matrix operator+( const Matrix& lhs, | |

const Matrix& rhs) | |

{ | |

Matrix result(lhs); | |

result += rhs; | |

| |

return result; | |

} |

Scalar Multiplication

Scalar multiplication allows a single scalar value to be multiplied with every entry within a matrix. The result matrix is the same size as the matrix provided to the scalar multiplication expression:

If

\( A= \left\lbrack \matrix{3 & 6 & -9 \cr 12 & -15 & -18} \right\rbrack \)

Then

\( \frac{1}3 A= \left\lbrack \matrix{1 & 2 & -3 \cr 4 & -5 & -6} \right\rbrack, 0A= \left\lbrack \matrix{0 & 0 & 0 \cr 0 & 0 & 0} \right\rbrack, -A= \left\lbrack \matrix{-3 & -6 & 9 \cr -12 & 15 & 18} \right\rbrack, \)

Scalar multiplication with a matrix exhibits these properties, where c and d are scalar values:

Distributive Property:

Identity Property:

The implementation for scalar multiplication is even simpler than addition.

Note: this implementation only allows the scalar value to appear before the Matrix object in multiplication expressions, which is how the operation is represented in math notation.:

C++

void Matrix::operator*=(const double lhs) | |

{ | |

for (size_t index = 0; index < data.size(); ++index) | |

{ | |

data[index] *= rhs; | |

} | |

} | |

| |

Matrix operator*( const double scalar, | |

const Matrix& rhs) | |

{ | |

Matrix result(rhs); | |

result *= scalar; | |

| |

return result; | |

} |

Matrix Multiplication

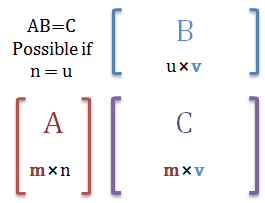

Everything seems very simple with matrices, at least once you get used to the new structure. Then you are introduced to matrix multiplication. The algorithm for multiplication is not difficult, however, it is much more labor intensive compared to the other operations that I have introduced to you. There are also a few more restrictions on the parameters for multiplication to be valid. Finally, unlike the addition operator, the matrix multiplication operator does not have all of the same properties as the multiplication operator for scalar values; specifically, the order of parameters matters.

Input / Output

Let's first address what you need to be able to multiply matrices, and what type of matrix you can expect as output. Once we have addressed the structure, we will move on to the process.

Given an the following two matrices:

\( A= \left\lbrack \matrix{a_{11} & \ldots & a_{1n} \cr \vdots & \ddots & \vdots \cr a_{m1} & \ldots & a_{mn} } \right\rbrack, B= \left\lbrack \matrix{b_{11} & \ldots & b_{1v} \cr \vdots & \ddots & \vdots \cr b_{u1} & \ldots & b_{uv} } \right\rbrack \)

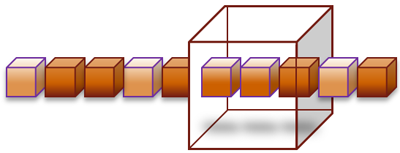

A valid product for \( AB=C \) is only possible if number of columns \( n \) in \( A \) is equal to the number of rows \( u \) in \( B \). The resulting matrix \( C \) will have the dimensions \( m \times v \).

\( AB=C= \left\lbrack \matrix{c_{11} & \ldots & c_{1v} \cr \vdots & \ddots & \vdots \cr c_{m1} & \ldots & c_{mv} } \right\rbrack, \)

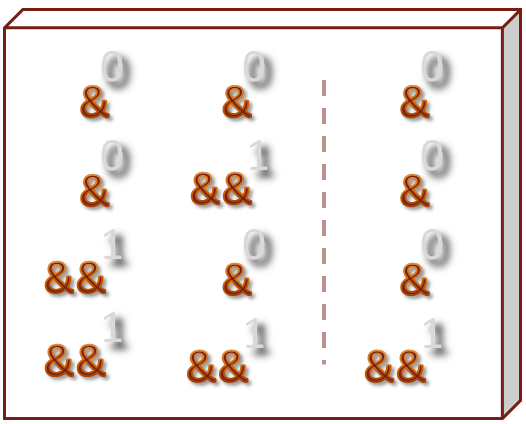

Let's summarize this in a different way, hopefully this arrangement will make the concept more intuitive:

One last form of the rules for matrix multiplication:

- The number of columns, \(n\), in matrix \( A \) must be equal to the number of rows, \(u\), in matrix \( B \):

\( n = u \)

- The output matrix \( C \) will have the number of rows, \(m\), in \(A\), and the number of columns, \(v\), in \(B\):

\( m \times v \)

- \(m\) and \(v\) do not have to be equal. The only requirement is that they are both greater-than zero:

\( m \gt 0,\)

\(v \gt 0 \)

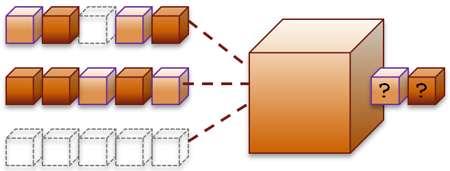

How to Multiply

To calculate a single entry in the output matrix, we must multiply the element from each column in the first matrix, with the element in the corresponding row in the second matrix, and add all of these products together. We use the same row in the first matrix, \(A\), for which we are calculating the row element in \(C\). Similarly, we use the column in the second matrix, \(B\) that corresponds with the calculating column element in \(C\).

More succinctly, we can say we are multiplying rows into columns.

For example:

\( A= \left\lbrack \matrix{a_{11} & a_{12} & a_{13} \cr a_{21} & a_{22} & a_{23}} \right\rbrack, B= \left\lbrack \matrix{b_{11} & b_{12} & b_{13} & b_{14} \cr b_{21} & b_{22} & b_{23} & b_{24} \cr b_{31} & b_{32} & b_{33} & b_{34} } \right\rbrack \)

The number of columns in \(A\) is \(3\) and the number of rows in \(B\) is \(3\), therefore, we can perform this operation. The size of the output matrix will be \(2 \times 4\).

This is the formula to calculate the element \(c_{11}\) in \(C\) and the marked rows used from \(A\) and the columns from \(B\):

\( \left\lbrack \matrix{\color{#B11D0A}{a_{11}} & \color{#B11D0A}{a_{12}} & \color{#B11D0A}{a_{13}} \cr a_{21} & a_{22} & a_{23}} \right\rbrack \times \left\lbrack \matrix{\color{#B11D0A}{b_{11}} & b_{12} & b_{13} & b_{14} \cr \color{#B11D0A}{b_{21}} & b_{22} & b_{23} & b_{24} \cr \color{#B11D0A}{b_{31}} & b_{32} & b_{33} & b_{34} } \right\rbrack = \left\lbrack \matrix{\color{#B11D0A}{c_{11}} & c_{12} & c_{13} & c_{14}\cr c_{21} & c_{22} & c_{23} & c_{24} } \right\rbrack \)

\( c_{11}= (a_{11}\times b_{11}) + (a_{12}\times b_{21}) + (a_{13}\times b_{31}) \)

To complete the multiplication, we need to calculate these other seven values \( c_{12}, c_{13}, c_{14}, c_{21}, c_{22}, c_{23}, c_{24}\). Here is another example for the element \(c_{23}\):

\( \left\lbrack \matrix{ a_{11} & a_{12} & a_{13} \cr \color{#B11D0A}{a_{21}} & \color{#B11D0A}{a_{22}} & \color{#B11D0A}{a_{23}} } \right\rbrack \times \left\lbrack \matrix{b_{11} & b_{12} & \color{#B11D0A}{b_{13}} & b_{14} \cr b_{21} & b_{22} & \color{#B11D0A}{b_{23}} & b_{24} \cr b_{31} & b_{32} & \color{#B11D0A}{b_{33}} & b_{34} } \right\rbrack = \left\lbrack \matrix{c_{11} & c_{12} & c_{13} & c_{14}\cr c_{21} & c_{22} & \color{#B11D0A}{c_{23}} & c_{24} } \right\rbrack \)

\( c_{23}= (a_{21}\times b_{13}) + (a_{22}\times b_{23}) + (a_{23}\times b_{33}) \)

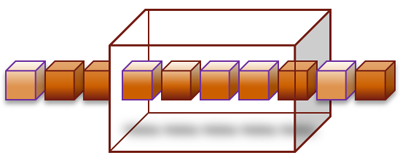

Notice how the size of the output matrix changes. Based on this and the size of the input matrices you can end up with some interesting results:

\( \left\lbrack \matrix{a_{11} \cr a_{21} \cr a_{31} } \right\rbrack \times \left\lbrack \matrix{ b_{11} & b_{12} & b_{13} } \right\rbrack = \left\lbrack \matrix{c_{11} & c_{12} & c_{13} \cr c_{21} & c_{22} & c_{23} \cr c_{31} & c_{32} & c_{33} } \right\rbrack \)

\( \left\lbrack \matrix{ a_{11} & a_{12} & a_{13} } \right\rbrack \times \left\lbrack \matrix{b_{11} \cr b_{21} \cr b_{31} } \right\rbrack = \left\lbrack \matrix{c_{11} } \right\rbrack \)

Tip:

To help you keep track of which row to use from the first matrix and which column from the second matrix, create your result matrix of the proper size, then methodically calculate the value for each individual element.

The algebraic properties for the matrix multiplication operator do not match those of the scalar multiplication operator. These are the most notable:

Not Commutative:

The order of the factor matrices definitely matters.

I think it is very important to illustrate this fact. Here is a simple \(2 \times 2 \) multiplication performed two times with the order of the input matrices switched. I have highlighted the only two terms that the two resulting answers have in common:

\( \left\lbrack \matrix{a & b \cr c & d } \right\rbrack \times \left\lbrack \matrix{w & x\cr y & z } \right\rbrack = \left\lbrack \matrix{(\color{red}{aw}+by) & (ax+bz)\cr (cw+dy) & (cx+\color{red}{dz}) } \right\rbrack \)

\( \left\lbrack \matrix{w & x\cr y & z } \right\rbrack \times \left\lbrack \matrix{a & b \cr c & d } \right\rbrack = \left\lbrack \matrix{(\color{red}{aw}+cx) & (bw+dx)\cr (ay+cz) & (by+\color{red}{dz}) } \right\rbrack \)

Product of Zero:

Scalar Multiplication is Commutative:

Associative Property:

Multiplication is associative, however, take note that the relative order for all of the matrices remains the same.

Transpose of a Product:

The transpose of a product is equivalent to the product of transposed factors multiplied in reverse order

Code

We are going to use a two-step solution to create a general purpose matrix multiplication solution. The first step is to create a function that properly calculates a single element in the output matrix:

C++

double Matrix::multiply_element( | |

const Matrix& rhs, | |

const Matrix& rhs, | |

const size_t i, | |

const size_t j | |

) | |

{ | |

double product = 0; | |

| |

// Multiply across the specified row, i, for the left matrix | |

// and the specified column, j, for the right matrix. | |

// Accumulate the total of the products | |

// to return as the calculated result. | |

for (size_t col_index = 1; col_index <= lhs.columns(); ++col_index) | |

{ | |

for (size_t row_index = 1; row_index <= rhs.rows(); ++row_index) | |

{ | |

product += lhs.element(i, col_index) | |

* rhs.element(row_index, j); | |

} | |

} | |

| |

return product; | |

} |

Now create the outer function that performs the multiplication to populate each field of the output matrix:

C++

// Because we may end up creating a matrix with | |

// an entirely new size, it does not make sense | |

// to have a *= operator for this general-purpose solution. | |

Matrix& Matrix::operator*( const Matrix& lhs, | |

const Matrix& rhs) | |

{ | |

if (lhs.columns() == rhs.rows()) | |

{ | |

// Resize the result matrix to the proper size. | |

this->resize(lhs.row(), rhs.columns()); | |

| |

// Calculate the value for each element | |

// in the result matrix. | |

for (size_t i = 1; i <= this->rows(); ++i) | |

{ | |

for (size_t j = 1; j <= this->columns(); ++j) | |

{ | |

element(i,j) = multiply_element(lhs, rhs, i, j); | |

} | |

} | |

} | |

| |

return *this; | |

} |

Summary

3D computer graphics relies heavily on the concepts found in the branch of math called, Linear Algebra. I have introduced two basic constructs from Linear Algebra that we will need to move forward and perform the fundamental calculations for rendering a three-dimensional display. At this point I have only scratched the surface as to what is possible, and I have only demonstrated how. I will provide context and demonstrate the what and why, to a degree, on the path helping you begin to work with three-dimensional graphics libraries, even if math is not one of your strongest skills.

References

Kreyszig, Erwin; "Chapter 7" from Advanced Engineering Mathematics, 7th ed., New York: John Wiley & Sons, 1993

The binary, octal and hexadecimal number systems pervade all of computing. Every command is reduced to a sequence of strings of 1s and 0s for the computer to interpret. These commands seem like noise, garbage, especially with the sheer length of the information. Becoming familiar with binary and other number systems can make it much simpler to interpret the data.

Once you become familiar with the relationships between the number systems, you can manipulate the data in more convenient forms. Your ability to reason, solve and implement solid programs will grow. Patterns will begin to emerge when you view the raw data in hexadecimal. Some of the most efficient algorithms are based on the powers of two. So do yourself a favor and become more familiar with hexadecimal and binary.

Number Base Conversion and Place Value

I am going to skip this since you can refer to my previous entry for a detailed review of number system conversions[^].

Continuous Data

Binary and hexadecimal are more natural number systems for use with computers because they both have a base of 2 raised to some exponent; binary is 21 and hexadecimal is 24. We can easily convert from binary to decimal. However, decimal is not always the most useful form. Especially when we consider that we don't always have a nice organized view of our data. Learning to effectively navigate between the formats, especially in your head, increases your ability to understand programs loaded into memory, as well as streams of raw data that may be found in a debugger or analyzers like Wireshark.

The basic unit of data is a byte. While it is true that a byte can be broken down into bits, we tend to work with these bundled collections and process bytes. Modern computer architectures process multiple bytes, specifically 8 for 64-bit computers. And then there's graphics cards, which are using 128, 192 and even 256-bit width memory accesses. While these large data widths could represent extremely large numbers in decimal, the values tend to have encodings that only use a fraction of the space.

Recognize Your Surroundings

What is the largest binary value that can fit in an 8-bit field?

It will be a value that has eight, ones: 1111 1111. Placing a space after every four bits helps with readability.

What is the largest hexadecimal value that can fit in an 8-bit field?

We can take advantage of the power of 2 relationship between binary and hexadecimal. Each hexadecimal digit requires four binary bits. It would be very beneficial to you to commit the following table to memory:

| Dec | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| Bin | 0000 | 0001 | 0010 | 0011 | 0100 | 0101 | 0110 | 0111 | 1000 | 1001 | 1010 | 1011 | 1100 | 1101 | 1110 | 1111 |

| Hex | 0x0 | 0x1 | 0x2 | 0x3 | 0x4 | 0x5 | 0x6 | 0x7 | 0x8 | 0x9 | 0xA | 0xB | 0xC | 0xD | 0xE | 0xF |

Now we can simply take each grouping of four bits, 1111 1111, and convert them into hex-digits, FF.

What is the largest decimal value that can fit in an 8-bit field? This isn't as simple, we must convert the binary value into decimal. Using the number base conversion algorithm, we know that the eighth bit is equal to 28, or 256. Since zero must be represented the largest value is 28 - 1, or 255.

Navigating the Sea of Data

Binary and hexadecimal (ok, and octal) are all number systems whose base is a power of two. Considering that computers work with binary, the representation of binary and other number systems that fit nicely into the logical data blocks, bytes, become much more meaningful. Through practice and the relatively small scale of the values that can be represented with 8-bits, converting a byte a to decimal value feels natural to me. However, when the data is represented with 2, 4 and 8 bytes, the values can grow quickly, and decimal form quickly loses its meaning and my intuition becomes useless as to what value I am dealing with.

For example:

What is the largest binary value that can fit in an 16-bit field?

It will be a value that has sixteen, ones: 1111 1111 1111 1111.

What is the largest hexadecimal value that can fit in an 16-bit field? Again, let's convert each of the blocks of four-bits into a hex-digit, FFFF.

What is the largest decimal value that can fit in an 16-bit field?

Let's see, is it 216 - 1, so that makes it 65355, 65535, or 65555, it's somewhere around there.

Here's a real-world example that I believe many people are familiar with is the RGB color encoding for pixels. You could add a fourth channel to represent an alpha channel an encode RGBA. If we use one-byte per channel, we can encode all four channels in a single 32-bit word, which can be processed very efficiently.

Imagine we are looking at pixel values in memory and the bytes appear in this format: RR GG BB. It takes two hexadecimal digits to represent a single byte. Therefore, the value of pure green could be represented as 00 FF 00. To view this same value as a 24-bit decimal, is much less helpful, 65,280.

If we were to change the value to this, 8,388,608, what has changed? We can tell the value has changed by roughly 8.3M. Since a 16-bit value can hold ~65K, we know that the third byte has been modified, and we can guess that it has been increased to 120 or more (8.3M / 65K). But what is held in the lower two bytes now? Our ability to deduce information is not much greater than an estimate. The value in hexadecimal is 80 00 00.

The difference between 8,388,608 and 8,388,607 are enormous with respect to data encoded at the byte-level:

8,388,608 |

8,388,607 |

|||||

| 00 | 80 | 00 | 00 | 7F | FF | |

Now consider that we are dealing with 24-bit values in a stream of pixels. For every 12-bytes, we will have encoded 4 pixels. Here is a representation of what we would see in the data as most computer views of memory are grouped into 32-bit groupings:

4,260,948,991 |

2,568,312,378 |

3,954,253,066 |

|||||||||||

| FD | F8 | EB | FF | 99 | 15 | 56 | 3A | 8C | B1 | 1D | 0A | ||

Binary

I typically try to use binary only up to 8-bits. Anything larger than that, I simply skip the first 8-bits (1-byte), and focus on the next 8-bits. For example: 1111 1111 1111 1111. As I demonstrated with the RGB color encoding, values do not always represent a single number. In fact, it is a stream of data. So whether there is one byte, four bytes, or a gigabytes worth of data, we usually process it either one byte or one word at a time. We actually break down decimal numbers into groups by using a comma (or some other regional punctuation) to separate thousands, e.g. 4,294,967,295, 294 of what? Millions.

Binary manipulation can be found in many contexts. One of the most common is storing a collection of flags in an unsigned buffer. These flags can be flipped on and off with the Boolean flag operations of your programming language. Using a mask with multiple bits allows an enumerated value with more than two options to be encoded within the binary buffer. I'm not going to go to describe the mechanics here, I simply want to demonstrate that data is encoded in many ways, and there are many reasons for you to become proficient at picking apart byte-streams down to the bit.

Hexadecimal

It is just as beneficial to be able to convert between hexadecimal and decimal in your head from 1 to 255, especially if you ever deal with selecting colors for web-pages or you edit images in programs like Photoshop. It only takes two hex-digits to represent an 8-bit byte. If you memorize the values that are defined by the high-order hex-digit, reading hexadecimal byte values becomes almost as easy as reading decimal values. There are two tables listed below. The table on the left indicates the value mapping for the high-order and low-order hex-digits of a byte. The table on the right contains a set of landmark values that you will most certainly encounter and find useful:

|

|

Some of the numbers listed in the table on the right are more obvious than others for why I chose to included them in the map. For example, 100, that's a nice round number that we commonly deal with daily. When you run into 0x64, now you can automatically map that in your mind to 100 and have a reference-point for its value. Alternatively, if you have a value such as 0x6C, you could start by calculating the difference: 0x6C - 0x64 = 8; add that to 100 to know the decimal value is 108.

Some of you will recognize the values 127, 168, 192, 224 and 238. For those that do not see the relevance of these values, they are common octets found in landmark network IP addresses. The table below shows the name and common dotted-decimal for as well as the hexadecimal form of the address in both big-endian and little-endian format:

| Name | IPv4 Address | ||||

| big-endian | little-endian | ||||

| local-host | 127.0.0.1 | 0x7F000001 | 0x0100007F | ||

| private range | 192.168.x.x | 0xC0A80000 | 0x0000A8C0 | ||

| multicast base address | 224.0.0.0 | 0xE0000000 | 0x000000E0 | ||

| multicast last address | 239.255.255.255 | 0xEFFFFFFF | 0xFFFFFFEF | ||

One additional fact related to the IPv4 multicast address range, is the official definition declares any IPv4 address with the leading four bits set to 1110 to be a multicast address. 0xE is the hex-digit that maps to binary 1110. This explains why the full multicast range of addresses is from 0xE0000000 to 0xEFFFFFFF, or written in decimal dotted notation as 224.0.0.0 to 239.255.255.255.

Octal

I'm sure octal has uses, but I have never ran into a situation that I have used it.

Actually, there is one place, which is to demonstrate this equality:

Oct 31 = Dec 25

Landmark Values

Binary has landmark values similar to the way decimal does. For example, 1's up to 10's, 10's through 100, then 1000, 1M, 1B ... These values are focused on powers of 10, and arranged in groups that provide a significant range to be meaningful when you round-off or estimate. Becoming proficient with the scale of each binary bit up to 210, which is equal to 1024, or 1K. At this point, we can use the powers of two in multiple contexts; 1) Simple binary counting, 2) measuring data byte lengths.

I have constructed a table below that shows landmark values with the power of 2. On the left I have indicated the name of the unit if we are discussing bits and the size of a single value; for example, 8-bits is a byte. I haven't had the need to explore data values larger than 64-bits yet, but it's possible that some of you have. To the right I have indicated the units of measure used in computers when we discuss sizes. At 1024 is a kilobyte, and 1024 kilobytes is officially a megabyte (not if you're a hard drive manufacturer...). I continued the table up through 280, which is known as a "yotta-byte." Becoming familiar with the landmarks up to 32-bits is probably enough for most people. To the far right I converted the major landmark values into decimal to give you a sense of size and scale for these numbers.

| Unit (bits) | Binary Exponent | Unit (bytes) | Place Value | Largest Value | ||

| Bit (b) | 20 | Byte (B) | 1 | |||

| 21 | Word | 2 | ||||

| 22 | Double-word | 4 | ||||

| Nibble | 23 | Quad-word | 8 | |||

| 24 | 16 | |||||

| 25 | 32 | |||||

| 26 | 64 | |||||

| Byte (B) | 27 | 128 | 255 | |||

| 28 | 256 | |||||

| 29 | 512 | |||||

| 210 | Kilobyte (KB) | 1024 | ||||

| 220 | Megabyte (MB) | 10242 | 1,048,576 | |||

| 230 | Gigabyte (GB) | 10243 | 1,073,741,824 | |||

| Double-word | 231 | 10243·2 | 4,294,967,295 | |||

| 232 | 10243·22 | |||||

| 240 | Terabyte (TB) | 10244 | ||||

| 250 | Petabyte (PB) | 10245 | ||||

| 260 | Exabyte (EB) | 10246 | ||||

| Quad-word | 263 | 10246·23 | 9,223,372,036,854,775,807 | |||

| 270 | Zettabyte (ZB) | 10247 | ||||

| 280 | Yottabyte (YB) | 10248 |

Summary

Looking at a large mass of symbols, such as a memory dump from a computer, can appear to be overwhelming. We do have tools that we use to develop and debug software help us organize and make sense of this data. However, these tools cannot always display this data in formats that are helpful for particular situations. In these cases, understanding the relationships between the numbers and their different representations can be extremely helpful. This is especially true when the data is encoded at irregular offsets. I presented a few common forms that you are likely to encounter when looking at the values stored in computer memory. Hopefully you can use these identity and navigation tips to improve your development and debugging abilities.

I have started writing an entry that discusses the value of becoming familiar with the binary (base-2) and hexadecimal (base-16) number systems because they are generally more useful to a programmer than decimal (base-10). My daughter is currently in high-school and she is taking a programming course. One of the things that she is currently learning how to do is count in binary. So I decided to expand my explanation of conversion between number systems as a reference for her and all of those who would like a refresher. The entry following this one will describe how binary and hexadecimal will make you a more effective programmer.

Place Value: coefficient·radixplace

To be able to convert numbers between different numerical bases, it is important to review the concept of place value. Each place, or column, in a number represents a value equal to number system base raised to the power of its place index starting at 0. The official name of the base-value is the radix. For example, consider the first three place values for a number system that has a radix of b.

b2+b1+b0

If we are dealing with decimal (base-10), the radix = 10 and we would have place values of:

102 + 101 + 100

Now we have the 1's column, 10's column, and 100's column:

100 + 10 + 1

The number stored at each column in the number is called the coefficient. To construct the final number, we multiply the coefficient by its place-value and add the results at each place together. Here is the decimal number 237 broken down by place value:

2·b2 +3·b1 +7·b0

2·102 + 3·101 + 7·10

200 + 30 + 7

237

Hopefully decimal form is so natural to you that 237 seems like a single number, rather than the sum of place values that are multiplied by their coefficient.

The Binary Number System

There are 10 types of people, those that understand binary and those that don't

If you are wondering what the eight other types of people are, continue reading.

Binary is a base-2 number system. This means that each column in a binary number represents a value of 2 raised to the power of its place index. A number system requires a number of symbols to represent each place value that is equal to its Base value, and zero is always the first symbol to include in this set. For instance, decimal (base ten) requires ten symbols: {0, 1, 2, 3, 4, 5, 6, 7, 8, 9}. Therefore, binary (base two) only requires two symbols: {0, 1}.

Adding the radix as a subscript after a number is a common notation to indicate the base-value of a number when there is a possibility for confusion. Unless the context specifically indicates a different number system, decimal form is assumed. For example, the value 2 in binary:

102 = 210

If we had used that notation in the joke at the beginning... well, it just wouldn't have been as funny.

Another place that you will see the subscript notation is when you study logarithms:

logax = logbx / logba

I'll leave the details for logarithms for another time.

Counting in Binary

When learning anything new, it can be helpful to map something that you already know to the new topic. For a new number system, counting with both number systems can be a helpful exercise. Counting in all number systems uses the same process:

- Start with zero in the least significant column

- Count up until you have used all of the symbols in increasing order in the least significant, 1's, column

- When the limit is reached, increment the value in the next column, and reset the current column to zero.

- If the next column has used all of the symbols, increment the column after that and reset the current column.

- Once no further columns reach their limit, return to step 2 to continue counting.

Starting with decimal, if we count up from zero to 9, we get:

0 - 1 - 2 - 3 - 4 - 5 - 6 - 7 - 8 - 9 ...roll over

We are now at step 3, we have reached the limit, so we increment the next column from an implied 0 to 1, and reset the current column for the result of:

10