Alchemy is a collection of independent library components that specifically relate to efficient low-level constructs used with embedded and network programming.

The latest version of Embedded Alchemy[^] can be found on GitHub.The most recent entries as well as Alchemy topics to be posted soon:

- Steganography[^]

- Coming Soon: Alchemy: Data View

- Coming Soon: Quad (Copter) of the Damned

If you ask a group of 10 software engineers to develop unit tests for the same object, you will end up with 10 unique approaches to testing that object. Now imagine each engineer was given a different object. This was my experience with unit testing before I discovered how useful and how much more valuable your unit tests become when they are developed with a test framework. A unit test framework provides a consistent foundation to build all of your tests upon. The method to setup and run each and every test will be the same. Most importantly, the output provided by each test will be the same, no matter who developed the test.

In a previously posted an entry called, The Purpose of a Unit Test, I clarified the benefits of writing and maintaining the tests, who does the work, and why you should seriously consider developing unit tests as well. I mentioned unit test frameworks and test harnesses, but I did not provide any details. In this entry I give a high-level overview of unit test frameworks and a quick start sample with the test framework that I use.

Unit Test Frameworks

Similar to most any tool in our industry, unit test frameworks can incite heated debates about why "My tool is better than yours." For the first half of my career I used simple driver programs to exercise functionality of a new object I was developing. As development progressed, I depended on my test driver less and less, and eventually the driver failed to compile successfully. I had always considered writing a driver framework to make things easier, however, testing was not something I was extremely interested in up to that point in my career.

Once I discovered a few great books written about unit testing, I quickly learned of better approaches to testing software. Some of the new knowledge I had gained taught me that writing and maintaining unit tests does not have to be that much extra work. When I knew what to look for, I found that a plethora of high quality test frameworks already existed. xUnit is the style of test framework that I use. To my knowledge SUnit is the first framework of this type, which was written for Smalltalk by Kent Beck. JUnit seems to be the most well known framework that tests JAVA.

A very detailed list of test frameworks can be found at Wikipedia. The number of frameworks listed is enormous, and it appears there are for frameworks to choose from for C++ than any other language. I assume this is due to the variety of different C++ compilers, platforms and environments C/C++ is used in. Some of the frameworks are commercial products, most are released as open source tools. Here are a few of the frameworks that I am familiar with or I have at least inspected to see if I like them or not:

- Boost Test Library

- CppUnit / CppUnitLite

- CxxTest

- GoogleTest

- Visual Studio

Qualities

There are many factors could play into what will make a unit test policy successful with your development team; some of these factors are the development platform, the type of project and even the culture of the company. These factors affect which qualities will be valuable when selecting a software unit test frameworks. I am sure there are many more than I list below. However, this is the short list of qualities that I considered when I selected a test framework, and it has served me well.

xUnit Format

There is more literature written on the xUnit test frameworks than any other style that exists. In fact, it appears that frameworks are either classified as xUnit or not. This is important because this test paradigm is propagated across many languages. Therefore, if you program in more than one language, or would like to choose a tool that new developers to your company will be able to come up to speed the quickest you should select an xUnit-style framework.

Portability

Even though Visual Studio is my editor of choice and some really nice test tools have been integrated into it with the latest versions, I have chosen a framework that is not dependent on any one platform. I prefer to write portable code for the majority of my work. Moreover, C++ itself is a portable language. There is no reason I should bound all of my tests to a Windows platform that requires Visual Studio.

Simplicity

Any tool that you try to introduce into a development process should be relatively easy to use. Otherwise, developers will get fed up, and create a new process of their own. Since unit tests are thought of by many as extra work, setting up a new test harness and writing tests should not feel like work. Ease of use will lower the resistance for your team to at least try the tool, and increase the chances of successful adoption.

Flexibility

Any type of framework is supposed to give you a basis to build upon. Many frameworks can meet your needs as long as you develop the way the authors of the framework want you to. Microsoft Foundation Classes (MFC) is a good example of this. If you stick with their document/view model you should have no problems. The further your application moves away from that model, the more painful it becomes to use MFC. When selecting a unit test framework you will want flexibility to be able to write tests however you want. Figuring out how to test some pieces of software is very challenging. A framework that requires tests to be written a certain way could make certain types of tests damn near impossible.

I suggest that you do a little bit of research of your own, and weigh the pros and cons for each framework before deciding upon one. They all have strengths and weaknesses, therefore I don't believe that any one of these is better than the others for all situations.

CxxTest

I have built my test environment and process around the CxxTest framework. When I chose this one, I worked with a wide variety of compilers, which many had poor support for some of C++'s features. CxxTest had the least compiler requirements and the framework is only a handful of header files so there are no external libraries that need to be linked or distributed with the tests. Many of the test harnesses developed for C++ early on required RTTI; this feature definitely wasn't supported on the compiler that I started using CxxTest. The syntax for creating unit tests is natural looking C++, which I think makes the tests much easier to read and maintain. Finally, it is one of the frameworks that does not require any special registration lists to be created to run tests that are written; this eliminates some of the work that would make testing more cumbersome.

The one thing that I consider a drawback is that Python is required to use CxxTest. Adding tools to a build environment makes it more complex, and one of my goals has always been to keep things as simple as possible. Fortunately, Python is easily accessible for a variety of platforms, so this has never been a problem. Python is used to generate the test runner with the registered tests, which eliminates the extra work to register the tests manually. When you work on large projects and have thousands of tests, this feature is priceless.

CxxTest is actively maintained at SourceForge.net/CxxTest

Quick start guide

I want to give you a quick summary for how to get started using CxxTest. You can access the full documentation online at http://cxxtest.com/guide.html. The documentation is also available in PDF format. There are four basic things to know when starting with CxxTest.

1. Include the CxxTest header

All of the features from CxxTest can be accessed with the header file:

C++

#include < cxxtest/TestSuite.h> |

2. Create a test fixture class

A test fixture, or harness, is a class that contains all of the logic to pragmatically run your unit tests. All of the test framework functionality is in the class CxxTest::TestSuite. You must derive your test fixture publicly from the TestSuite class. You are free to name your class however you prefer.

C++

class TestFixture : public CxxTest::TestSuite | |

{ | |

// ... | |

}; |

3. Test functions

All functions in your fixture that are to be a unit test must start with the word test. This is a case-insensitive name, therefore you can use CamelCase, snake_case, or even _1337_Ca53 as long as the function name starts with "Test" and it is a valid symbol name in C++. Also, test functions must take no parameters and return void. Finally, all test functions must be publicly accessible:

C++

public: | |

void Test(void); | |

| |

// Examples | |

void TestMethod(void); | |

void test_method(void); | |

void TeSt_1337_mEtH0D(void); |

4. (Optional) Setup / Teardown

As with the xUnit test philosophy, there exists a pair of virtual functions that you can override in your test suite, setUp and tearDown. Setup is called before each and every test to perform environment initialization that must occur for every test in your test suite. Its counterpart, Teardown, is called after every test to cleanup before the next test. I highly encourage you to factor code out from your tests into utility functions when you see duplication. When all of your tests require the same initialization/shutdown sequences, move the code into these two member functions. It may only be two or three lines per test, however, when you reach 20, 50, 100 and more a change in the initialization of each test becomes significant.

C++

void setUp(void); | |

void tearDown(void); |

Short Example

I have written a short example to demonstrate what a complete test would look like. Reference the implementation for this function that indicates if a positive integer is a leap year or not:

C++

bool IsLeapYear(size_t year) | |

{ | |

// 1. Leap years are divisible by 4 | |

if (year % 4) | |

return true; | |

| |

// 3. Except century mark years. | |

if (year % 100) | |

{ | |

// 4. However, every 4th century | |

// is also a leap year. | |

return !(year % 400); | |

} | |

| |

// 2. | |

return true; | |

} |

Each individual unit test should aim to verify a single code path or piece of logic. I have marked each of the code paths with a numbered comment in the IsLeapYear function. I have identified four unique paths through this function, and implemented four functions to test each of the paths.

C++

// IsLeapYearTestSuite.h | |

| |

#include < cxxtest/TestSuite.h> | |

class IsLeapYearTestSuite : public CxxTest::TestSuite | |

{ | |

public: | |

// Test 1 | |

void TestIsLeapYear_False_NotMod() | |

{ | |

// Verify 3 instances of this case. | |

TS_ASSERT_EQUALS(false, IsLeapYear(2013)); | |

TS_ASSERT_EQUALS(false, IsLeapYear(2014)); | |

TS_ASSERT_EQUALS(false, IsLeapYear(2015)); | |

} | |

| |

// Test 2 | |

void TestIsLeapYear_True() | |

{ | |

TS_ASSERT_EQUALS(true, IsLeapYear(2016)); | |

} | |

| |

// Test 3 | |

void TestIsLeapYear_False_Mod100() | |

{ | |

// These century marks do not qualify. | |

TS_ASSERT_EQUALS(false, IsLeapYear(700)); | |

TS_ASSERT_EQUALS(false, IsLeapYear(1800)); | |

TS_ASSERT_EQUALS(false, IsLeapYear(2900)); | |

} | |

| |

// Test 4 | |

void TestIsLeapYear_True_Mod400() | |

{ | |

// Verify 3 instances of this case. | |

TS_ASSERT_EQUALS(true, IsLeapYear(2000)); | |

TS_ASSERT_EQUALS(true, IsLeapYear(2400)); | |

} | |

}; |

Before this test can be compiled, the python script must be run against the test suite header file to generate the test runner:

python.exe --runner=ParenPrinter

-o IsLeapYearTestRunner.cpp

IsLeapYearTestSuite.h

Now you can compile the output cpp file that you specify, along with any other dependency files that your test may require. For this example simply put the IsLeapYear function in the test suite header file. This is the output you should expect to see when all of the tests pass:

Running 4 tests....OK!

If the second test were to fail, the output would appear like this:

Running 4 tests.

In IsLeapYearTestSuite::TestIsLeapYear_True:

IsLeapYearTestSuite.h(19):

Error: Expected (true == IsLeapYear(2016)),

found (true != false)

..

Failed 1 of 4 tests

Success rate: 75%

When I execute the tests within Visual Studio, if any errors occur I can double click on the error and I am taken to the file and line the error occurred, much like is done for compiler errors. The output printers can be customized to display the output however you prefer. One of the output printers that comes with CxxTest is an XmlPrinter that is compatible with the Continuous Integration tools that are common, such as Jenkins.

Summary

I believe that unit tests are almost as misunderstood as how to effectively apply object-oriented design principles, which I will tackle another time. It seems that most everyone you ask will agree that testing is important, a smaller group will agree that unit tests are important, and even fewer put this concept to practice. Select a unit test framework that meets the needs of your organization's development practices to maximize the value of any tests that are developed for your code.

Introduction to Network Alchemy

general, portability, reliability, CodeProject, C++, maintainability, Alchemy Send feedback »While many of the principles of developing robust software are easy to explain, it is much more difficult to know how and when to apply these principles. Practice and learning from mistakes is generally the most productive way to understand these principles. However, it is much more desirable to understand the principles before a flawed system is built; an example from the physical world is the Tacoma Narrows bridge. Therefore I am starting a journey to demonstrate how to create robust software. This is not a simple task that can be summarized in a magazine article, a chapter in a book or a reference application with full source code.

This journey starts now, and I expect to continue over the next few months in a series of entries. I will demonstrate and document the design and development of a small library intended to improve the quality of network communication software. Developing code for network data transmission is very cumbersome and error prone. The bugs that appear are generally subtle defects that may only appear once you use a new compiler or move to a new operating system or hardware platform. As with any type of software, there are also the bugs that can be introduced by changes. So let's begin by first identifying what we need and problems we are trying to avoid.

Inter-process Communication

The core topic of discussion is Inter-process Communication (IPC). IPC is required when to processes do not share the same memory pool by default. IPC mechanisms are used to communicate between the two separate processes, which takes the form of message transmissions and data transfers. There are many mediums that are used to communicate between two programs such as:

- Shared Memory Pools

- Pipes

- Network Sockets

- Files

Regardless of the medium used to transfer data, the process remains the same for preparing the data. This process is repetitive, mundane, and very error prone. This is the portion of the IPC process that I will focus on first. Let's breakdown the typical tasks that are required to communicate with IPC.

Serialize Structured Data

Data has many forms. A set of numbers, complex classes, raw buffers and bitmap images are examples of information that may be transferred between processes. One thing that is important to remember, is that two different programs will most likely structure the data differently. Even though the information is the same, the difference in compilers, languages, operating systems and hardware platforms may represent data in a different way than the program that originally created the data. Therefore, it is very important to convert the data into a well-defined format shared between the two endpoints of the IPC session. A packet format definition is a standard method that is commonly used to define these serialized message formats.

Interpret Serialized Data

Once the data has been transferred and received by the destination endpoint, it must be interpreted. This process is the opposite process of serialization. However, the consumer of the data does not necessarily need to be the same author of the source endpoint that created the data. Therefore, the packet format definition should be used to interpret the data that has been received. A common implementation is to take the data from the binary buffer and assign the values into fields of a structure for ease of access. This sort of approach makes the code much clearer to understand.

Translate Data Messages

Another common operation is to take an incoming message in one format and convert it to a different message format before further processing. This is usually a simple task, however if you have a large number of messages to convert, the task can quickly become mundane. In my experience, mundane tasks that require a large amount of repetitive code are very error prone done to copy/paste errors.

This is a great example of a problem that occurs frequently and a brute force approach is used to solve the problem. This should be a major concern from a maintenance perspective, because this solution generates an enormous amount of code to maintain. There is high probability that errors will be introduced if fundamental changes are ever made to this body of code. to this body of code. I plan on creating a simple and maintainable solution to this problem as part of network alchemy.

Transmit Data

Transmitting the data is the reason we started this journey. Transmission of data is simple in concept, but in practice there are many potential pitfalls. The problems that must be handled depends on the medium used to transfer the data. Fortunately, many libraries have already been written to tackle these issues. Therefore, my primary focus will be to prepare the data for use with an API designed to transfer serialized data. Because of this, it will most likely be necessary to research a few of these APIs to be sure that our library can be integrated with relative ease.

Common Mistakes

We have identified the primary goals of our library. However, I also would like to take into account experience gained from similar projects. If I don't have the personal experience related to a project I at least try to heed the wisdom offered to me by others. I always have the option to choose later whether the advice is useful or relevant to my situation. In this particular situation I have both made these mistakes myself as well as found and fixed similar bugs causes by others. I would like to what I can to eliminate these issues from appearing in the future.

Byte Order Conversions

Byte order conversion is necessary when data is transferred between two different machines with different endianess. As long as your application or library will only run on a single platform type this should not be a concern. However, because of the nature that software tends to outlive its original purpose, I would not ignore this issue. Your application will work properly for the original platform, but if it is ever to be run on two different types of platforms, the issues will begin to appear.

Standard convention is to convert numbers to Network byte order (Big Endian format) when transferring over the wire. Data extracted from the wire should then be converted to Host byte order. For Big Endian systems the general operation is a no-op, and the work really only needs to be performed for Little Endian platforms. A few functions have been created to minimize the amount of duplicate code that must be written:

C++

// Host to Network Conversion | |

unsigned short htons(unsigned short hostShort); // 16-bit | |

unsigned long htonl(unsigned long hostLong); // 32-bit | |

| |

// Network to Host Conversion | |

unsigned short ntohs(unsigned short netShort); // 16-bit | |

unsigned long ntohl(unsigned long netLong); // 32-bit | |

| |

// No consistent and portable implementations exist for | |

// 64-bit integers, float and double values. | |

// However, it is important that these numbers | |

// be converted as well. |

The issue will appear if the data you transfer uses number fields with two or more bytes for representation when the correct endianess is not used for data transfer. The symptoms that appear can be as subtle as incorrect numbers are received, to more immediate and apparent issues such as the program or thread crashes. To further obfuscate the problem, it is quite common to use the correct conversion functions in most places, however, a single instance is incorrectly converted in the one of potentially hundreds of uses of these functions. During maintenance this often occurs when changing the size of a field but failing to change the conversion function used to swap the byte order before transport or interpretation.

Abuse of the Type System

Serializing data between different processes requires a predefined agreement, protocol, to dictate what should be sent and received. Data that is read from any source should be questioned and verified before it is accepted and further processed. This is true whether the source is a network communication stream, a file, and especially user input. The type information for the data structures we work with can not be statically enforced by the compiler until we are able to verify it, and put it back into a well-defined and reliable type. More often than not, I have observed implementations that are content to pass around pointers to buffers and simply trust the contents encoded in the buffer.

Here is a very simple example to illustrate the concept. An incoming message is received and assumed to be one of the types of messages that can be decoded by the switch statement. The first byte of the message indicates the type of message, and from there the void* is blindly cast to the 1 of 256 possible message types indicated by the first byte.

C++

void ProcessMsg(void* pVoid) | |

{ | |

if (!pVoid) | |

{ | |

return; | |

} | |

| |

char* pType = (char*)pVoid; | |

switch (*pType) | |

{ | |

case k_typeA: | |

ProcessMessageA((MessageA*)pVoid); | |

break; | |

case k_typeB: | |

ProcessMessageB((MessageB*)pVoid); | |

break; | |

// ... | |

} | |

} |

Any address could be passed into this function, which would then be interpreted as if it were a valid message. This particular problem cannot be completely eliminated simply because eventually the information is reduced to a block of encoded bytes. However, much can be done to both improve the robustness of the code as well as continue to provide convenience to the developer serializing data for transport. It is important that we keep as much type information around through as much of the code as possible. When the type system is subverted, even more elusive bugs can creep into your system. It is possible to create code that appears to work correctly on one system but becomes extremely difficult to port to other platforms, and although the program works on the original system, it may be running at a reduced performance level.

Properly Aligned Memory Access

One of the key features of the C++ is its ability to allow us to develop at a high layer of abstraction or drop down and code right on top of the hardware. This allows it to run efficiently in all types of environments. Unfortunately when you peek below the abstractions provided by the language it is important that you understand what you are now responsible for that the language normally provides for you. A memory access restriction is one of these concepts that are often hidden by the language. When these rules are broken, the best you can hope for is decreased performance; more likely an unaligned memory access exception will be triggered by the processor.

Generally a processor will require a address to be aligned along the same granularity based on the size of data to be read. By definition, reading a single byte is always aligned with the appropriate address. However, a 16-bit (2 byte) value must be located at an address that is divisible by a power of two. Similarly a 32-bit (4 byte) value must be aligned at an address divisible by 4. This rule is not absolute due to the different ways processors perform memory access. Many processors will automatically make the adjustment to create a 4 byte read as a series of smaller single or double byte operations and combine the results in the expected 4 byte address. This convenience comes at a cost of efficiency. If a processor does not handle misaligned access, an exception will be raised.

Running into this issue often confounds developers when they run into this issue. Thinking, "It works on system A, why does it crash on system B?" There is nothing syntactically wrong with the code, and even a thorough inspection of the code would not reveal any glaring issues with the code. I demonstrate two common implementations that I have run across when working with serialized data communications that can potentially lead to a misaligned memory access violation.

Both examples use this structure definition to provide a simplified access to the fields of the message. This structure is purposely designed to create a layout where field2 is not aligned on a 4-byte boundary:

C++

struct MsgFormat | |

{ | |

unsigned short field1; // offset 0 | |

unsigned long field2; // offset 2 | |

unsigned char field3; // offset 6 | |

}; // Total Size: 7 bytes |

Blind Copy

The code below assumes that the MsgFormat structure is layer out in memory exactly as the fields would be if you added up the size of each field and offset it from the beginning of the structure. Note also that this example ignores byte-order conversion.

C++

// Receive data... | |

const size_t k_size = sizeof(MsgFormat); | |

char buffer[k_size]; | |

| |

// Use memcpy to transfer the data | |

// into structure for convenience. | |

MsgFormat packet; | |

::memcpy(&MsgFormat, buffer. k_size); | |

| |

short value_a = packet.field1; | |

| |

// May cause an unaligned memory access violation | |

// Or | |

// The expected may not be held in field2. | |

long value_b = packet.field2; |

The problem is the compiler is allowed to take certain liberties with the layout of data structures in memory in order to produce optimal code. This usually done by adding extra padding bytes throughout the structure to ensure the individual data fields are optimally aligned for the target architecture. This is what the structure will most likely look like in memory:

C++

struct MsgFormat | |

{ | |

unsigned short field1; // offset 0 | |

unsigned short padding; // offset 2 | |

unsigned long field2; // offset 4 | |

unsigned char field3; // offset 8 | |

}; // Total Size: 9 bytes |

Dereferencing Unaligned Memory

This example demonstrates a different way to trigger the unaligned memory access violation. We recognize that byte-order conversion has not been properly handled in the previous example, so lets rectify that:

C++

// Receive data... | |

const size_t k_size = sizeof(MsgFormat); | |

char buffer[k_size]; | |

| |

// Appropriately convert the data | |

// to host order before assigning it | |

// into the message structure. | |

MsgFormat packet; | |

| |

Packet.field1 = ntohs(*(unsigned short*) buffer); | |

| |

// The pointer assigned to p_field2 | |

// is aligned on a 2-byte boundary rather than 4-bytes. | |

// Dereferencing this address will cause an | |

// access violation on architectures that | |

// require 4-byte alignment. | |

char* p_field2 = buffer + sizeof(unsigned short); | |

Packet.field2 = ntohl (*(unsigned long *)p_field2); |

Even more insidious memory alignment issues can appear when message definitions become large sets of nested structures. This is a good design decision in many ways, it will help organize and abstract groups of data. However, care must be taken to ensure adding fields in a deeply nested child does not cause memory alignment issues. One must always be aware of the possibility for pointers passed into a function may not be properly aligned. In practice I think this is a responsibility that should fall upon the function caller as this is a rare but very real possibility to be aware of.

One final practice that could be a cause of future pain is pointers embedded in the message structure. It is common to see offset fields indicate the location of a block of data in a message structure. This can be done safely, as long as validity checks are made along the way.

Message Buffer Management

Much of the code that I have worked with did a fine job of managing buffers appropriately. Allocations are freed properly as expected. Buffer lengths are verified. However, it appears that much of the misused techniques that I described above, are actually driven by the developers attempt to reduce memory allocations and eliminate copying. Therefore, another goal that I will try to integrate into Alchemy, is efficient and flexible buffer management. Specifically aiming towards minimal allocations, minimal copying of buffers, and robust memory management.

Summary

We have identified a handful of common problems that we would like to address for the Network Alchemy library. The next step is to explore some strategies to determine what could feasibly solve these problems in a realistic and economical way. I will soon add an entry that describes the unit-test framework that I prefer, as well as the tools I have developed over the years to help improve my productivity of developing in an independent test harness.

using and namespace are two of the most useful C++ keywords when it comes to simplifying syntax, and clarifying your intentions with the code. You should understand the value and flexibility these constructs will add to your software and it maintenance. The benefits are realized in the form of organization, readability, and adaptability of your code. Integration with 3rd party libraries, code from different teams, and even the ability to simplify names of constructs in your programs are all situations where these two keywords will help. Beware, these keywords can also cause unnecessary pain when used incorrectly. There are some very simple rules to keep in mind, and you can avoid these headaches.

The Compiler and Linker

At its core, The Compiler, is an automaton that works to translate our code that is mostly human readable, a form understood by your target platform. These programs are works of art in and of themselves. They have become very complex to address our complex needs in both our languages and the advances in computing in the last few decades. For C/C++, the compiled module is not capable of running on the computer yet, the linker needs to get involved.

The compiler create a separate compiled module for each source file (.c, .cpp, .cc) that is in your program. Each compiled module contains a set of symbols that are used to reference the code and data in your program. The symbols created in these modules will be one of three different types:

- Internal Symbol: An element that is completely defined and used internally in the module.

- Exported. Symbol: An element this is defined internally to this module, and advertised as accessible for other modules.

- Imported Symbol: An element that is used within a module, however the definition is contained with another module. This is indicated with the

externqualifier.

Now it's time for The Linker to take each individual module and link them together; similar to stitching together the individual patches in a quilt. The Linker combines all of the individual modules, resolving any dependencies that were indicated by The Compiler. If a module is expecting to import a symbol, the linker will attempt to find that symbol in the other set of modules.

If all works out well, every module that is expecting to import a symbol will now have location to reference that symbol. If a symbol cannot be found, you will receive a linker error indicating "Missing Symbol". Alternatively, if a symbol is defined in multiple modules The Linker will not be able to determine which symbol is the correct symbol to associate with the import module. The Linker will issue a "Duplicate Symbol" error.

Namespaces

The duplicate symbol linker error can occur for many reasons, such as:

- A function is implemented in a header file without the

inlinekeyword. - A global variable or function with the same name is found in two separate source code modules.

- Adding a 3rd party library that defines a symbol in one of its modules that match a symbol in your code.

The first two items on the list are relatively easy to fix. Simply change the name of your variable or function. Generally a convention is adopted, and all of the names of functions and variables end up with a prefix that specifies the module. Something similar to this:

C++

// HelpDialog.cpp | |

| |

int g_helpDialogId; | |

int g_helpTopic; | |

int g_helpSubTopic; | |

| |

int HelpCreateDialog() | |

{ | |

// ... | |

} |

This solution works. However, it's cumbersome, won't solve the issue of a 3rd party library that creates the same symbol and finally, it's simply unnecessary in C++. Place these declarations in a namespace. This will give the code a context that will help make your symbols unique:

C++

// HelpDialog.cpp | |

| |

namespace help | |

{ | |

| |

int g_dialogId; | |

int g_topic; | |

int g_subTopic; | |

| |

int CreateDialog() | |

{ | |

// ... | |

} | |

| |

} // namespace help |

The symbols in the code above no longer exist in the globally scoped namespace. To access the symbols, the name must be qualified with help::, similarly to referencing a static symbol in a class definition. Yes, it is still entirely possible for a 3rd party library to use the same namespace. Namespaces can be nested. Therefore to avoid a symbol collision such as this, place the help namespace into a namespace specified for your library or application:

C++

namespace netlib | |

{ | |

namespace help | |

{ | |

| |

// ... Symbols, Code, | |

| |

} // namespace help | |

} // namespace netlib |

Namespaces Are Open

Unlike a class definition, a namespace's declaration is open. This means that multiple blocks can be defined for a namespace and the combined set of declarations will live in a single namespace. Multiple blocks can appear in the same file and separate blocks can be in multiple files. It is possible for a namespace block to spread across two library modules, however, the separate libraries would need to be compiled by compiler that uses the same name-mangling algorithm. For those that are unaware, name-mangling is the term used to describe the adornments the C++ compiler gives to a symbol to support a feature such as function overloading.

C++

namespace code | |

{ | |

namespace detail | |

{ | |

// Forward declare support functions symbols | |

int VerifySyntax(const string &path); | |

} | |

| |

// Main implementation | |

| |

namespace detail | |

{ | |

// New symbols can be defined and added | |

bool has_error = false; | |

// Implement functions | |

| |

int VerifySyntax(const string &path) | |

{ | |

// ... | |

} | |

| |

} // namespace detail | |

} // namespace code |

The Unnamed Namespace

The static keyword is used In C to declare a global variable or a function, and limit its scope to the current source file. This method is also supported in C++ for backward compatibility. However, there is a better way hide access to globally scoped symbols; use the unnamed namespace. This is simply a defined namespace that is given a unique name, only accessible to the compiler. To reference symbols in this namespace, access it as if it lived in the global namespace. Each module is given their own unnamed namespace. Therefore it is not possible to access unnamed namespace symbols defined in a different module.

C++

namespace // unnamed | |

{ | |

int g_count; | |

} // namespace (unnamed) | |

| |

// Access a variable in the unnamed namespace | |

// as if it were defined in the globally scoped namespace | |

int GetCount() | |

{ | |

return g_count; | |

} | |

| |

} // namespace (unnamed) |

The code above is an example for how to protect access to global variables. If you desire a different source module to be able to access the variable, create a function for other modules to call to gain access to the global variable. This helps keep control of how the variable is used, and control how the value of the variable is changed.

Alias a Namespace

Namespaces share the same rules defined for naming functions and variables. Potentially long namespace names could be created to properly/uniquely describe a set of code. For example:

C++

namespace CodeOfTheDamned | |

{ | |

namespace network | |

{ | |

enum Interface | |

{ | |

k_type1 = 1, | |

k_type2, | |

k_type3 | |

} | |

| |

class Buffer | |

{ | |

// ... | |

} | |

| |

} // namespace network | |

} // namespace CodeOfTheDamned |

The fully scoped names that these definitions create could become quite cumbersome to deal with.

C++

CodeOfTheDamned::network::Interface intf = CodeOfTheDamned::network::k_type1; | |

| |

If (CodeOfTheDamned::network::k_type1 == intf) | |

{ | |

CodeOfTheDamned::network::Buffer buffer; | |

// ... | |

} |

Compare and contrast this with the code that did not use namespaces:

C++

Interface intf = k_type1; | |

| |

If (k_type1 == intf) | |

{ | |

Buffer buffer; | |

// ... | |

} |

Possibly the top item on my list for creating maintainable software is to make using existing declarations easy to understand and use. Typing a long cumbersome prefix is not easy. I like to keep my namespace names between 2 to 4 characters long. Even still the effort required to specify a fully qualified path becomes painful again once you hit the second nested namespace; 3 namespaces or more is just sadistic. Enter, the namespace alias. This syntax allows you to redeclare an existing namespace with an alias that may be simpler to use. For Example:

C++

// Namespace Alias Syntax | |

namespace cod = CodeOfTheDamned; | |

namespace dnet = cod::network; | |

| |

// Example of new usage | |

If (dnet::k_type1 == intf) | |

{ | |

dnet::Buffer buffer; | |

// ... | |

} |

This is much nicer, simple, convenient. We can do better though. There is one other keyword in C++ that helps simplify the usage of namespaces when organizing your code, using.

Using

using allows a name that is defined in a different declarative region to be defined in the same declarative region, which using appears. More simply stated, using adds a definition from some other namespace to the same namespace using is declared.

C++

// Syntax for using | |

// Bring a single item into this namespace | |

using std::cout; | |

using CodeOfTheDamned::network::Buffer; | |

| |

// Now these symbols are in this namespace as well as their original namespace: | |

cout << "Hello World"; | |

Buffer buffer; |

Using with Namespaces

The ability to bring a symbol far far away from another namespace is greatly simplified with using. using can also bring the contents of an entire namespace into the current declarative region. However, this particular usage should be used sparingly because to avoid defeating the purpose of namespaces. The contents of two namespaces are combined together. One absolute rule that I would recommend for your code guidelines, is to prohibit the use of using in header files to bring namespaces into the global namespace.

C++

// Syntax for using | |

// Bring a single item into this namespace | |

using namespace std; | |

using namespace CodeOfTheDamned; | |

| |

// The entire std namespace has been brought into this scope | |

cout << "Hello World" << endl; | |

// The CodeOfTheDamned namespace was brought to us. | |

// However, qualifying with the network sub-namespace | |

// will still be required. | |

network::Buffer buffer; |

My preferred use of using simple declarations at the top of the function allows me to quickly see which symbols I am pulling in to use within the function, and I simplify the code at the same time. Only the specific symbols I intend to use are brought into the scope of the function. I limit what is imported, to the set of symbols that are actually used.:

C++

// using within a function definition | |

// Forward declaration | |

void Process(int number); | |

| |

void ProcessList(NumberList &numbers) | |

{ | |

using std::for_each; | |

| |

// Preparations ... | |

| |

for_each(numbers.begin(), | |

numbers.end(), | |

Process); | |

// ... | |

} |

using Within a Class

using can be used within a class declaration. Unfortunately it cannot be used to bring namespace definitions into the class scope. using is used within a class scope to bring definitions from a base class into the scope of a derived class without requiring explicit qualification. Another feature to note, is the accessibility of a declaration can be modified in a base class with using.

C++

// Syntax for using | |

// Bring a symbol from a base class into this class scope. | |

class Base | |

{ | |

public: | |

int value; | |

| |

// ... | |

}; | |

| |

class Derived | |

: private Base | |

{ | |

public: | |

// Base::value will continue to be accessible | |

// in the public interface, even though all | |

// of the Base classes constructs are hidden. | |

using Base::value; | |

| |

}; |

This feature becomes necessary when working heavily with templates. If you have a template class that derives from a base class template, the compiler will not look in the base class for a symbol. This is to error on the side of caution and generate errors sooner in the compile process rather than later. The using keyword is one way to provide a hint to the compiler that it can find a symbol in the base template type.

Summary

using and namespace are two very useful declarations to be aware of in C++ to help create a balance between portability, adaptability and ease of coding. The ability to define namespaces allows code symbols from separate libraries to be segregated to prevent name collisions when using libraries developed by multiple development teams. The keyword using allows the developer to bring specific elements from a namespace into the current declarative scope for convenience.

A little care must be taken to ensure that over-zealous use of the using keyword does not undermine any organizational structure created with namespace. However, with the introduction of a few conventions to your coding standards, the effort required to properly organize your code into logical units that avoid name collisions can be kept to a minimum. The importance that you invest in a namespace structure increases with likelihood that your code is to be ported across multiple platforms, to use 3rd party libraries, or to be sold as a library. I believe the results are well worth little effort required.

Code of The Damned

This is a journal for those who feel they have been damned to live in a code base that has no hope. However, there is hope. Hope comes in the form of understanding how entropy enters the source code you work in and using discipline, experience, tools and many other resources to keep the chaos in check. Even software systems that have the most well designed plans, and solid implementations can devolve into a ball of mud as the system is maintained.

For more details read the rest from the Introduction.

Summary:

Up to this point I have primarily written about general topics to clarify current definitions, purposes, or processes in use. There are three essays that I think are particularly important to bring to your attention. I would also like to add that it appears these are topics that are on many other minds as well. Because of all of the positive feedback I have received with regards to these entries:

Many of the topics that I discuss are agnostic to which language and tools you use. However, the majority of the examples I post on this site are with C++. This is the language I am most proficient with, and can demonstrate my intentions most clearly. Some of the essays will be written specifically for C++ developers. I would like mention these two C++ essays as well because they are important for what I plan to focus on for the next few months. I would like to make sure that you have some basic context and knowledge regarding these topics:

Learn by Example

I am an ardent believer that good programming examples lead to better quality code. I have written quite a few articles and given many presentations related to better software development. It is difficult to create a meaningful and relevant example in 10 lines of code. This is especially true if you want to avoid using foo and bar. Limited space is the problem with so many books and articles written about programming topics.

There are two types of programming reference material that are difficult to consume and apply to meet your own needs:

| 1) | Small examples that lack context | |

| These resources may teach a concept or development pattern. A small program is generally provided to demonstrates the concept. However, no context is provided for how to effectively apply the construct. Consider the classic example used to demonstrate C++ template meta-programming, a math problem that can be solved recursively, such as factorial or Fibonacci. | ||

| 2) | Simplified examples built with a framework | |

One way to be able to concentrate more information into a smaller space, especially for print, is to encapsulate complexity. Unless the book is specifically written about a particular framework such as MFC or Qt, the complexity will be encapsulated with a framework developed by the author. This allows the samples to be simplified as well as writing new applications with the framework. I think frameworks are a very important tool to consider to improve the quality of your code. However, it may not always be possible to build upon the framework provided by the author for various restrictions. Therefore, a developer is left with digging through the implementation of the framework, to see how knowledge gained from that resource can be applied elsewhere. |

||

The Road Ahead

I intend to develop a small library in C++ over the next few months. Yes, these are with good intentions and this will be another author developed library. However, my goal is not to teach you how to use a technology built upon my own framework. I will be demonstrating how to build a reliable and maintainable library or framework. In each entry related to this project I will explain what, how and why; occasionally it may be prudent to also explain when and where.

I will continue to post entries that clarify general concepts and topics that concern a more general audience. These entries will be intermixed with the entries that further the progress of the library I will be developing. Before I can implement a portion of the library, it may be necessary for me to introduce a new concept. In this situation I will create an educational entry, followed by an entry that applies the concept in the development of the library. I believe this applied context is what is missing many times when we learn something, and left with no clue as to how it is supposed to be applied; similar to learning algebra in school.

The Library

I created this site to document and educate how better software can be written the first time, even if all of the requirements are not known at design time. Software should be flexible, that is why it is so valuable. My ultimate goal is to help developers write code that is correct, reliable, robust and most of all maintainable. With all of these thoughts in mind, I think the library that I build should solve a problem that appears over and over. I would also like the resulting library to be simple to use and therefore demonstrate good design principles as well as techniques to the code retain its architectural integrity over time.

I am going to build a library that is comprised of a set of smaller tools for network communication abstraction. This is not another wrapper for sockets; there are plenty of implementations to choose from. I mean the little bit of error prone logic that occurs right before and after any sort of message passing occurs in a program. Here is a list of goals for the desired library:

- Expressive or Transparent Usage Syntax

- Host / Network Byte Order Management

- Handles byte-alignment access

- Low overhead / Memory Efficient

- Typesafe

- Portable

This is a modest list. However, that is what makes writing code in this area of an application so deceptively difficult. The devil is in the details. Unless you run your program on different platform types, you will not run into the byte order issues. Tricks that developers seem to get away with stuffing structures into raw buffers, then directly reading them out again may appear to work until you use a different compiler, or even upgrade the CPU.

The Approach

There are plenty of issues that we will tackle through the development of this library. I will include unit tests for each addition. This will give me an opportunity to use the library as it is in development, rather than waiting until the end to find that what I built was garbage. I generally develop with a TDD approach because it helps me discover what is necessary. I rarely consider what is unnecessary because I don't usually run into it with TDD. This will help create a minimal and complete library.

The solution will largely be developed with template meta-programming. I would like to incorporate C++ 11 feature support where possible, but the primary target will be any modern compiler with robust support for the C++ 03 standard with TR1 support. If you use Visual Studio, this means VS2008 with SP1 and greater. The features in C++ 11 primarily will make some of the implementation aspects simpler. However, I do like my code to be portable as well as reusable. So when there is a benefit, I will demonstrate an implementation with both versions of C++.

One last thing I plan to do is to provide an implementation of some of the constructs provided in the Standard C++ Library. I will also provide an explanation for why the construct is valuable, and when, how and where to use it. The shared_ptr will be the first construct that I will tackle. Many of the meta-programming constructs that will be needed for this library are provided in the standard library already. Therefore, I will show how some of these constructs are built as well. Understanding how these objects are built, will give you a better appreciation for how you can apply the methods to your own projects.

The Schedule

I will continually progress, however, there will be no schedule. I also have a list of topics that I would like to cover as well as the initial components that will be required for this library. These are the topics I plan to discuss in the near future, in no particular order:

- std::shared_ptr overview

- C++ namespace / using keywords

- The C++ type system

- Functional Programming with C++

- The <concepts> header file in C++

In the next few weeks I will publish the first module to this library. This will give you a better idea of what to expect from the remainder of the library. I also want to do this to keep a healthy variety of academic, editorial, and practical content.

I would like to clarify the purpose and intention of a unit test for every role even tangentially related to the development of software. I have observed a steady upward trend, over the last 15 years, for the importance and value of automating the software validation process. I think this is fantastic! What I am troubled by is the large amount of misinformation that exists in the attempts to describe how to unit test. I specifically address and clarify the concept of unit testing in this entry.

There is no doubt the Agile Programming methodologies have contributed to the increase of awareness, content and focus of unit tests. The passion and zeal developers gain for these processes is not surprising. Many of these methodologies make our lives easier, our jobs become more enjoyable. There are many of us that like to pass on what we have learned. Unfortunately, there is a large discrepancy in what each person believes is a unit test, and the information that is written regarding unit tests frightens me.

The Definition of a Unit Test

This definition of a unit test is the most clear and succinct definition that I have found so far: Unit Test:

A unit test is used to verify a single minimal unit of source code. The purpose of unit testing is to isolate the smallest testable parts of an API and verify that they function properly in isolation.

API Design for C++, p295; Martin Reddy

I would leave it at that, however, I don't think that simple definition of what a unit test is will resolve all of the discrepancies, misunderstandings, and misleading advice that exists. I believe that it will require a little bit of context, and answering a few fundamental questions to ensure everybody understands unit testing and software verification in general.

The Goal of Testing Software

We test software to manage risk. Risk is the potential for a problem to be realized. A lower level of risk implies fewer problems. A patch of ice on the sidewalk is not a problem. It merely creates the risk for someone to slip and fall. The problem is realized when someone travels the path that takes them over the ice, and they slip and fall. Poorly written code is like ice on the sidewalk. It may not exhibit any problems. However, when the right set of inputs sends execution down the path with the ice-like code, a problem may occur.

There are many forms risk with software. I believe these risks can be categorized into one of the categories below.

Correct Behavior

A dry, safe sidewalk is useless for us to travel on if it will not lead us to our intended destination. Therefore, an important aspect of software verification is to prove correctness. We want to verify what we wrote, does both what we intended, and expected to create. The computer always does what I instruct it to do, but did I instruct it to do what I intended? We want to verify the software operates as it was designed to function.

Robustness

We want to ensure that our software is robust. Robust software properly manages resources and handles errors gracefully. Software that uses proper resource management is free of memory leaks, avoid deadlock situations, and responsibly manages system resources so the rest of the system can continue to operate properly. Graceful error handling simply means the application does not crash or continue to operate on invalid data.

The Software Unit Test

Now remember, the goal of a software unit test is to manage risk at the smallest unit possible. The target sizes to consider for a unit of code are single objects, their public member functions and global functions. I think we have covered enough definitions to start to correct misunderstandings that many people have regarding software unit tests. When I say people, I am including all of the roles that have any input or direction as to how the software is developed: Architects, programmers, software testers, build configuration managers, project managers and potentially others.

Unit Tests Do Not Find Bugs

Unit tests verify expected behavior based on what they are created to test. Seems obvious now that I stated it right?! It can be so easy to fall into that trap, especially when the word automated is used so freely with "unit test". When the person responsible for the schedule discoverers that the tests are not free, they begin to argue that the tests are unnecessary because we have Software Test verify the software before we "Ship It!" It is much more efficient and cost effective to prevent creation of defects, than to try to find them after they have been created. I would like to give some context to where a unit test fits into the overall development process, and how they can improve the predictability for when your product will be ready for release.

One Size Does Not Fit All

It is important to keep in mind that there are many different forms of testing. This holds true whether we are discussing the physical world or the realm of software. Imagine a state of the art television that is on the assembly line. Before the components have made their way to the manufacturing floor, most likely some sort of quality control test was performed to verify these components met specifications. Next these basic pieces are assembled into larger components, such as the LCD display, or encoder/decoder module. These components may be validated as well. Finally the television is assembly is completed by integrating the larger components to the final system.

The unit test phase is similar to the very first quality control check in the television analogy. The objects and the functions created by the developer are verified, in isolation, for quality and that they meet the specified requirements. Compare this phase to the definition that I presented at the beginning of the essay. These unit tests should verify the smallest unit of testable code possible.

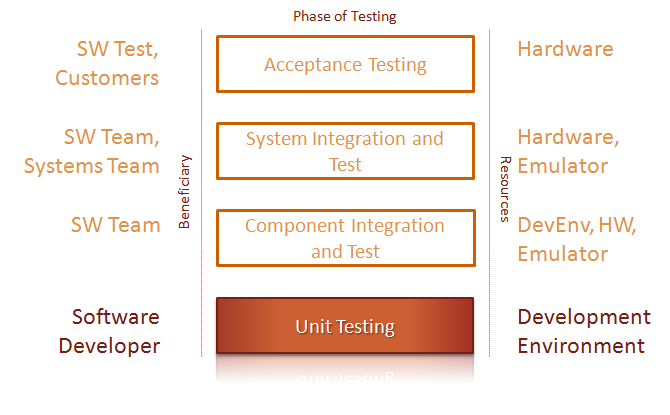

At the moment we are only concerned with unit tests. The graphic below illustrates the different types of testing that I described above. The image correlates the primary beneficiaries and the type of resources that are the most effective for each phase.

Unit Tests Are For the Software Developer

Verifying every individual component of the final television will not guarantee that the final television will meet specifications or even work properly. The same holds true for software and unit tests. Unit tests verify the software building blocks that the programmers will use to build more complex components, and then combine the components into a final system or application.

The unit tests can also be organized and run automatically as part of the build process. Each time the software is built, the unit tests will be run along with any other regression and verification tests that have been put in place. Unit tests will continue to provide value throughout the development lifetime of the software. However, unit tests are the most beneficial to the software developers, because they verify the units of logic in isolation before integration.

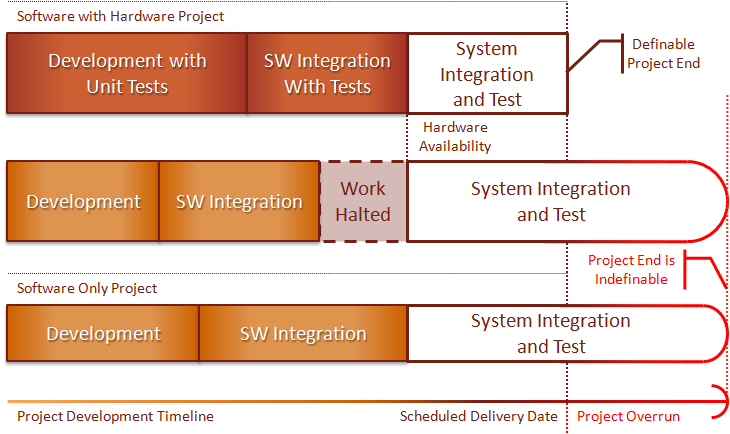

Unit Tests Will Affect the Schedule

The schedule is always a touchy subject, because it is directly tied to the budget, and indirectly tied to profits. The common perception is that adding the extra task of writing a test along with the code, will extend the amount of time needed to complete the project. This would be true if we developed perfect code, did not re-introduce bugs that we have previously fixed, and always had a complete set of requirements at the beginning of the project. Unfortunately, all three of those are rarely true. These are the circumstances where unit tests will reduce the amount of time required from your schedule.

The diagram below is inspired by a highly esteemed engineer I work with. He simply drew a timeline for two different versions of a project, one that develops unit tests, and one that doesn't. While it may be true that developing unit tests will require more development time, unit tests help ensure that the quality level stays constant or increases, but never decreases. With quality checks like this put in place during each phase of development, the schedule will be more deterministic. When the quality is allowed to waver through the development process, the end of the schedule becomes less predictable.

The situations remain similar regardless of the type of project you are creating, a project with hardware, or a software only product. Unit tests help keep the software portable and adaptable. This means the possibility of developing the logic on different hardware than the intended target and emulators is more of a possibility. This allows dependencies on hardware to be eliminated until the final system integration phase is planned. If your hardware is delayed, or limited in supply, software engineers can continue to work. For software only project, the end of the schedule is simply more predictable.

The Software Developer Writes the Unit Tests

The programmer that creates the software should also write the unit test. I know what many of you are thinking at this point, "The person that created the product should not be the person to inspect its quality." This is absolutely correct. However, remember, we are simply at the smallest possible scale for code at this point. The code units that we are referring to are not products; they are building blocks for what will become the final product. The Software Test team will develop the test methodologies for Acceptance Testing. Therefore a different group is still responsible for verifying the quality of the product.

A process like Test Driven Development (TDD) requires the same person to write both the code and the tests. Software products of even moderate size are too complex to account for design detail before the software itself is developed. The unit tests become much like a development sandbox in which the engineer can experiment. This part of the process happens regardless. To have a separate person write the tests would impose another restriction on the developer.

The developers are responsible for maintaining the unit tests throughout the lifetime of the products development. With an entire set of unit tests in place, changes that break expected behavior can be caught immediately. Running the entire set of unit tests before new code is delivered back to the repository should be made a requirement. This makes each developer to take responsibility for all of their changes, even if the changes break tests from other units of code. I think the developer who made the change is the most qualified to determine why the other tests broke, because they know what they changed.

Unit Test Frameworks

If you give each developer the direction to "Write unit tests, I don't care how! Just do it!" You will end up with many tests, that will generally only be usable by the developer that wrote the tests. That is why you should select a unit test framework. A unit test framework provides consistency for how the unit tests for your project are written. There are many test frameworks to choose from for just about any language you want to program with, including Ada. Just like programming language, coding guidelines and caffeinated beverage, almost every programmer has a strong opinion which test framework is the best. Research what's out there and use the one that meets the needs of your organization.

The framework will provide a consistent testing structure to create maintainable tests with reproducible results. From a product quality and business view-point, those are the most valuable reasons to use a unit test framework. When I am writing code, I think the most valuable reason is a quick and simple way to develop and verify your logic in isolation. Once I know I have it working solidly by itself, I can integrate it into the larger solutions with confidence. I have saved an enormous amount of time during component and integration phases because I was able to pare down the code to search through when debugging issues.

Unit Tests Are an Asset

I would like to emphasize to anyone in software development, unit tests are an asset. They are an extremely valuable asset, almost as valuable as the code that they verify. These should be maintained just as if they were part of the code required to compile your product. The last thing you want is an uninitiated programmer commenting out or deleting unit tests so they can deliver their code. Because the unit tests can be carried forward, they become a part of your automated regression test set. If you lose any of the tests, a previous bug may creep back in.

How to Unit Test

I'm sorry, it's not that simple. I am not going to profess that I have The Silver Bullet process to unit testing or any other part of software development, because there isn't one. If there were a such a process, we wouldn't still be repeating the same mistakes as described by Fred Brooks, in The Mythical Man Month. For those of you that have not read or even heard of this book, it was first printed in 1975. The book is a set of essays based on Brooks' experiences while managing the development of the IBM OS/360. This may possibly be on of the reasons why a new development process emerges and gains traction every 5-8 years.

I will revisit this topic in the near future. There is much knowledge and experience for me to share with you, as well as the techniques that have been the most successful for me. Each new project has brought on new challenges. Therefore, I will be sure to relay the context in which the techniques were successful and when they caused trouble.

Summary

Unit testing is such a broad subject that multiple books are required to properly cover the topic. I have chosen to focus only on the intended purpose of software unit tests. I wanted to clarify many of the misconceptions associated with unit tests. Quality control should exist at many levels in the development process. It is very important for everyone in the development process to understand that unit tests alone are not enough to verify the final product. Moreover, having a sufficient set of unit tests in place should significantly reduce the amount of time required to verify and release the final product.

Software unit tests provide a solid foundation on which to build the rest of your product. These tests are small, verify tiny units of logic in isolation, and are written by the programmers that wrote the code. Unit tests can be automated as part of the build process and become your products first set of regression tests. Unit tests are very valuable, and should be maintained long with the code for your product. Keep these points in mind for the next strategy that you develop to verify a product that requires software.

Improve Code Clarity with Typedef

portability, reliability, CodeProject, C++, maintainability Send feedback »The concept of selecting descriptive variable names is a lesson that seems to start almost the moment you pick up your first programming book. This is sound advice, and I do not contest this. However, I think that the basis could be improved by creating and using the most appropriate type for the task at hand. Do you believe that you already use the most appropriate type for each job? Read on and see if there is possibly more that you could do to improve the readability, maintainability or your programs, as well as more simply express your original intent.

Before I demonstrate my primary point, I would like to first discuss a few of the other popular styles that exist as an attempt to introduce clarity into our software.

Hungarian Notation

First a quick note about the Hungarian Notation naming convention. Those of us who started our careers developing Windows applications are all aware of this convention. This convention encodes the type of the variable in the name using the first few letters to mean a variable type code. Here is an example list of the prefixes, the types they represent and a sample variable:

C++

bool bDone | |

char cKey; | |

int nLen; | |

long lStyle; | |

float fPi; | |

double dPi; | |

| |

// Here are some based on the portable types defined | |

// and used throughout the Win32 API set. | |

BYTE bCount; | |

WORD wParam; | |

DWORD dwSize; | |

SIZE szSize; | |

LPCSTR psz; | |

LPWSTR pwz; |

Some of the prefixes duplicate, such as the bool and byte types, which both use b. It's quite common to see n used as the prefix for an integer when the author would like to create a variable to hold a count. Then we reach the types that have historical names, that no longer apply. LPCSTR, LPWSTR and all of the other types that start with LP. The LP stands for Long Pointer, and was a necessary discriminator with 16-bit Windows and the segmented memory architecture of the x86 chips. This is an antiquated term that is no longer relevant with the 32-bit and 64-bit systems. If you want more details this article on x86 memory segmentation should be a good starting point.

I used to develop with Hungarian Notation. Over time I found that variables were littered through the code marked with the incorrect type prefix. I would find that a variable would be better suited as a different type. This meant that a global search and replace was required to properly change the type, because the name of the variable would need to be changed as well.

Why is the Type Part of the Name?

This thought finally came to mind when I was recovering from a variable type change. Why do I need to change every instance of the name, simply because I change its type? I suppose this makes sense when I think back to what the development tools were like when I first started programming. IDE's were a little more than syntax highlighting editors that also had hooks to compile and debug software.

It was not until the last decade that features like Intellisense and programs like VisualAssist appeared that improved our programming proficiency. We now have the ability to move the cursor over a variable and have both its type and value be displayed in-place in the editor. This is such a simple and yet valuable addition. These advancements have made the use of Hungarian Notation an antiquated practice. If you still prefer notepad, may God have mercy on your soul.

Naming Conventions

Wow! Naming conventions huh?! My instinct desperately inclines me to simply skip this topic. This is a very passionate subject for almost every developer. Even people that do not write code feel the need to weigh in with an opinion. Let's simply say for this discussion, variable conventions should be simple with a limited number of rules.

Even thought I no longer use Hungarian Notation, I still like to lightly prefix variables in specific contexts, such as a 'p' prefix to indicate a pointer, 'm_' for my member variables, and 'k_' for constants. The 'm_' gives a hint to ownership in an object context, and it simplifies the naming of sets of variables. Anything that helps eliminate superfluous choices can help me focus on the important problems that I am trying to solve. One last prefix I almost forgot is the use of 'sp' for a smart or shared pointer. These are nice little hints for how the object will be used or behaviors that you can expect. The possibility always remains that these types will change, however I have found, in fact, that variables in these contexts rarely do change.

Increase the Clarity of Intent

Developing code is the expression of some abstract idea that is translated into a form the computer can comprehend. Before it even reaches that point, we the developers need to understand the intention of the idea that has been coded. Using simple descriptive names for variables is a good start. However there are other issues to consider as well.

C++

double velocity | |

double sampleRate |

Unit Calculations

There is a potential problem lurking within all code that performs physical calculations. The unit type of a variable must be carefully tracked, otherwise a function expecting meters may receive a value in millimeters. This problem can be nefarious, and generally elusive. When your lucky, you catch the factor of 1000 error, and make the proper adjustment. When things do not work out well, you may find that one team used the metric system and the other team used the imperial system for their calculations, then an expensive piece of equipment could crash onto mars. Hey! It can happen.

One obvious solution to help avoid this is to include the units in the name of the variable.

C++

double planeVelocityMetersPerSecond | |

double missileVelocity_M_s | |

long planeNavSampleRatePerSsecond | |

long missileNavSampleRate_sec |

I believe this method falls a bit short. We are now encoding information in the variable once again. It definitely is a step in the right direction, because the name of the variable is less likely to be ignored compared to a comment at its declaration that indicates the units of the variable. It is also possible for the unit of the variable to change, but the variable name is not updated to reflect the correct unit.

Unfortunately, the best solution to this problem is only available in C++ 11. It is called user-defined specifiers. The suffixes that we are allowed to add to literal numbers to specify the actual type that we desire, can now be defined by the user. We are no longer limited to unsigned, float, short, long etc... This sort of natural mathematical expression is possible with user-defined specifiers:

C++

// based on the user specifiers appended to each value. | |

// The result type will be Meters per Second; | |

// A user-defined conversion has been implemented | |

// for this to become the type Velocity. | |

| |

Velocity Speed = 100M / 10S; | |

| |

// The compiler will complain with this expression. | |

// The result type will be Meter Seconds. | |

// No conversion has been created for this calculation. | |

| |

Velocity invalidSpeed = 100M * 10S; |

It is now possible to define a units-based system along with conversion operations to allow a meter type to be divided by a time type, and the result is a velocity type. I will most likely write another entry soon to document the full capabilities of this feature. I should also mention that Boost has a units library that provides very similar functionality for managing unit data with types. However, the user-defined specifiers are not part of the Boost implementation, it's just not possible without a change to the language.

Additional Context Information for Types

The other method to improve your type selection for your programs is to use typedef to create types that are descriptive and help indicate your intent. I have come across this simple for loop statement thousands of times:

C++

int maxCount = max; | |

for (int index = 0; | |

index < maxCount; | |

int++) | |

{ | |

DoWork( data[index]); | |

} |

Although there is nothing logically incorrect with the previous block, because of the type of index is declared as a signed integer, future problems could creep in over time with maintenance changes. In this next sample, I have added two modifications that are particularly risky when using a signed variable for indexing into an array. One of these examples modifies the index counter, which is always dangerous. The other change does not initialize the index explicitly, rather a function call a function call whose return value is not verified initializes the index. Both changes are demonstrated below:

C++

for (int index = find(data, "start"); | |

index < maxCount; | |

index++) | |

{ | |

if (!DoWork( data[index])) | |

{ | |

index += WorkOffset(data[index]); | |

} | |

} |

The results could be disastrous. If the find call were to return a negative value, an out of bounds access would occur. This may not crash the application, however it could definitely corrupt memory in a subtle way. This creates a bug that is very difficult to track down because the origin of the cause is usually no where near the actual manifestation of the negative side-effects. The other possibility is that modifying the counting index could also result in a negative index based on how WorkOffset is defined. A corollary conclusion to take away from this example is that is it not good practice to modify the counter in the middle of an active loop.

If a developer was stubborn and wanted to keep the integer type for their index, the loop terminator test should be written to protect from spurious negative values from corrupting the data:

C++

for (int index = 0; | |

index < maxCount && index >= 0; | |

int++) | |

{ | |

... | |

} |

Improved Approach