Alchemy is a collection of independent library components that specifically relate to efficient low-level constructs used with embedded and network programming.

The latest version of Embedded Alchemy[^] can be found on GitHub.The most recent entries as well as Alchemy topics to be posted soon:

- Steganography[^]

- Coming Soon: Alchemy: Data View

- Coming Soon: Quad (Copter) of the Damned

This is an entry for the continuing series of blog entries that documents the design and implementation process of a library. This library is called, Network Alchemy[^]. Alchemy performs data serialization and it is written in C++.

I discussed the Typelist with greater detail in my previous post. However, up to this point I haven't demonstrated any practical uses for the Typelist. In this entry, I will further develop operations for use with the Typelist. In order to implement the final operations in this entry, I will need to rely on, and apply the operations that are developed at the beginning in order to create a simple and elegant solution.

Useful Operations

The operations I implemented in the previous entry were fundamental operations that closely mimic the same operations you would find on a traditional runtime linked-list. More sophisticated operations could be composed from those basic commands, however, the work would be tedious, and much of the resulting syntax would be clumsy for the end user. These are two things that I am trying to avoid in order to create a maintainable library. I will approach the implementation of these operations with a different tack, focused on ease of use and maintenance.

We need operations to navigate the data format definitions defined in Alchemy. My goal is to be able to iterate through all of the data fields specified in a network packet format, and appropriately process the data at each location in the packet. This includes identifying the offset of a field in the packet, performing the proper action for a field entry, and also performing static-checks on the types used in the API. There are the operations that I believe I will need:

- Length: The number of type entries in the list.

- TypeAt: The defined type at a specified index.

- SizeAt: The

sizeofof type at a specified index. - OffsetOf: The byte offset of the type at a specified index.

- SizeOf: The total size in bytes for all of the types specified in the list.

This list of operations should give me the functionality that I need to programmatically iterate through the well defined fields of a message packet structure. I have already demonstrated the implementation for Length[^]. That leaves four new operations to implement. As you will see, we will use some of these new operations as blocks to build other more complex operations. This is especially important in the functional programming environment that we are working within.

Style / Guidelines

I have a few remarks before I continue. It is legal to use integral types in parameterized-type declarations such as size_t, however floating-point values, string literals, and pointers are not legal syntax. struct types are typically used when creating meta-functions because they use public-scope by default, and this saves a small amount of verbosity in the code definitions.

The keyword typename and class are interchangeable in template parameter declarations. As a matter of preference I choose typename to emphasize that the required type need not be a class-type. Finally, it is legal to provide default template values using the same rules for default function parameters; all of the parameters after an entry with a default type must also have default values. However, this can only be used for class and struct templates, but not for function templates.

TypeAt

Traditionally nodes in linked-lists are accessed by iterating through the list until the desired node is found, which makes this a linear operation, O(n). Something to keep in mind when meta-programming is many times operations will reduce to constant runtime, O(1). However, the traditional linked-list iteration method required to traverse the Typelist is inconveniently verbose and will still require O(n) compile-time operations. The code below demonstrates the explicit extraction of the three types for our example Typelist.

C++

// This syntax is required to access the three types: | |

integral_t::type::head_t charVal = 0; | |

integral_t::type::tail_t::head_t shortVal = 0; | |

integral_t::type::tail_t::tail_t::head_t longVal = 0; |

Because the message definitions in Alchemy may have 20-30 type entries I want to create access methods that rely on node index rather than explicit initialization. The underlying implementation will be basically the same as a linked-list iteration. However, because the compiler will reduce most of these operations to O(1) time, we will not pay the run-time cost of providing random access to a sequential construct.

If you are worried about creating an enormous increase in your compile times, stop worrying, for now at least. Compilers have become very efficient at instantiating templates, and remembering what has already been generated. If a recursive access of the 25th node has been generated, then later access to the 26th node must be generated, modern compilers will detect they have already generated up to the 25th node. Therefore, only one new instantiation is generated to reach the 26th element. Unfortunately, this cost must be paid for each compilation unit that these templates are instantiated. There is no need to pre-maturely optimize your template structures until you determine the templates have become a problem.

The first step is to define the function signature for TypeAt. This code is called a template declaration, the definition has not been provided yet.

C++

/// Return the type at the specified index | |

template < size_t IdxT, | |

typename ContainerT, | |

typename ErrorT = error::Undefined_Type > | |

struct Type At; |

Generic Definition

We will provide a catch-all generic definition that simply declares the specified Error Type. This is the instance that will be generated for any instance of TypeAt defined with the ContainerT parameter that does not have a defined specialization:

C++

/// Return the type at the specified index | |

template < size_t IdxT, | |

typename ContainerT, | |

typename ErrorT = error::Undefined_Type > | |

struct TypeAt | |

{ | |

typedef ErrorT type; | |

}; |

The Final Piece

The first step is to determine what the correct implementation would look like for a type at a specified index. This code shows what the usage would look like to access the third element in our integral_t Typelist TypeAt < 2, integral_t >::type It's important to remember that integral_t is actually a typedef for the template instantiation with our defined types. Also, initially there will be 32 available entries in the Typelist definition for an Alchemy message.

The definition of our Typelist contains every type in the index that it is defined. Rather than writing a recursive loop and terminator to count and extract the correct type, we can refer to the indexed type directly. The only catch, is that this method of implementation will require a template definition for each possible index. Therefore, the actual template definition for this operation must be defined like this:

C++

template | |

< typename | |

TypeList | |

< typename T0, typename T1, typename T2, typename T3, | |

typename T4, typename T5, typename T6, typename T7, | |

typename T8, typename T9, typename T10, typename T11, | |

typename T12, typename T13, typename T14, typename T15, | |

typename T16, typename T17, typename T18, typename T19, | |

typename T20, typename T21, typename T22, typename T23, | |

typename T24, typename T25, typename T26, typename T27, | |

typename T28, typename T29, typename T30, typename T31 | |

>, | |

typename ErrorT = error::Undefined_Type | |

> | |

struct TypeAt | |

< (2), | |

TypeList < T0, T1, T2, T3, T4, T5, T6, T7, | |

T8, T9, T10, T11, T12, T13, T14, T15, | |

T16, T17, T18, T19, T20, T21, T22, T23, | |

T24, T25, T26, T27, T28, T29, T30, T31 | |

> | |

> | |

{ | |

typedef T2 type; | |

}; |

After I reached this implementation I decided that I had to find a simpler solution. To implement the final piece of the TypeAt operation, we will rely on MACRO code generation[^]. The work will still be minimal with the MACROs that I introduced earlier. This is the MACRO that will define the template instance for each index.

// TypeAt Declaration MACRO

#define tmp_ALCHEMY_TYPELIST_AT(I) \

template < TMP_ARRAY_32(typename T), \

typename ErrorT > \

struct TypeAt< (I), \

TypeList< TMP_ARRAY_32(T) >, \

ErrorT \

> \

{ \

typedef TypeList < TMP_ARRAY_32(T) > container; \

typedef T##I type; \

}

The declaration of the MACRO in this way will define the entire structure above: tmp_ALCHEMY_TYPELIST_AT(2);

Therefore, I declare an instance of this MACRO for as many indices are allowed in the Typelist.

C++

// MACRO Declarations for each ENTRY | |

// that is supported for the TypeList size | |

tmp_ALCHEMY_TYPELIST_AT(0); | |

tmp_ALCHEMY_TYPELIST_AT(1); | |

tmp_ALCHEMY_TYPELIST_AT(2); | |

tmp_ALCHEMY_TYPELIST_AT(3); | |

// ... | |

tmp_ALCHEMY_TYPELIST_AT(30); | |

tmp_ALCHEMY_TYPELIST_AT(31); | |

| |

// Undefining the MACRO to prevent its further use. | |

#undef tmp_ALCHEMY_TYPELIST_AT |

Boost has libraries that are implemented in similar ways, however, they have expanded their code to actually have MACROs define each code generation MACRO at compile-time based on a constant. I have simply hand defined each instance because I have not created as sophisticated of a pre-processor library as Boost has. Also, at the moment, my library is a small special purpose library. If it becomes more generic with wide-spread use, it would probably be worth the effort to make the adjustment to a dynamic definition.

SizeOf

Although there already exists a built-in operator to report the size of a type, we will need to acquire the size of a nested structure. We are representing structure formats with a Typelist, which does not actually contain any data. Therefore we will create a sizeof operation that can report both the size of an intrinsic type, as well as a Typelist. We could take the same approach as TypeAt with MACROs to generate templates for each sized Typelist, and a generic version for intrinsic types. However, if we slightly alter the definition of our Typelist definition, we can implement this operation with a few simple templates.

Type-traits

We will now introduce the concept of type-traits[^] into our implementation of the Typelist to help us differentiate the different types of objects and containers that we create. This is as simple as creating a new type.

struct container_trait{};

Now derive the Typelist template from container_trait. The example below is from an expansion of the 3 node declaration for our Typelist:

C++

template< typename T0, typename T1, typename T2> | |

struct TypeList< T0, T1, T2> | |

: container_trait | |

{ | |

typedef TypeNode< T1, | |

TypeNode< T2, | |

TypeNode< T3, empty> | |

> > type; | |

}; |

We now need a way to be able to discriminate between container_trait types and all other types. We will make use of one of the type templates found in the < type_traits > header file, is_base_of. This template creates a Boolean value set to true if the type passed in derives from the specified base class.

C++

// Objects derived from the container_trait | |

// are considered type containers. | |

template < typename T > | |

struct type_container | |

: std::integral_constant | |

< bool, std::is_base_of< container_trait, T >::value > | |

{ }; |

This type discriminator can be used to discern and call the correct implementation for our implementation of sizeof.

C++

template < typename T > | |

struct SizeOf | |

: detail::SizeOf_Impl | |

< T, | |

type_container< T >::value | |

> | |

{ }; |

That leaves two distinct meta-functions to implement. One that will calculate the size of container_types, and the other to report the size for all other types. I have adopted a style that Boost uses in its library implementations, which is to enclose helper constructs in a nested namespace called detail. This is a good way to notify a user of your library that the following contents are implementation details since these constructs cannot be hidden out of sight.

C++

namespace detail | |

{ | |

| |

// Parameterized implementation of SizeOf | |

template < typename T, bool isContainer = false > | |

struct SizeOf_Impl | |

: std::integral_constant< size_t, sizeof(T) > | |

{ }; | |

| |

// SizeOf implementation for type_containers | |

template < typename T> | |

struct SizeOf_Impl< T, true > | |

: std::integral_constant< size_t, | |

ContainerSize< T >::value | |

> | |

{ }; | |

| |

} // namespace detail |

The generic implementation of this template simply uses the built-in sizeof operator to report the size of type T. The container_trait specialization calls another template meta-function to calculate the size of the Typelist container. I will have to wait and show you that after a few more of our operations are implemented.

SizeAt

The implementation for SizeAt builds upon both the TypeAt and sizeof implementations. The implementation also returns to the use of MACRO code generation to reduce the verbosity of the definitions. This implementation queries for the type at the specified index, then uses sizeof to record the size of the type. Here is the MACRO that will be used to define the template for each index:

// SizeOf Declaration MACRO

#define tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(I) \

template < TMP_ARRAY_32(typename T) > \

struct SizeAt< (I), TypeList< TMP_ARRAY_32(T) > > \

{ \

typedef TypeList< TMP_ARRAY_32(T) > Container; \

typedef typename TypeAt< (I), Container >::type TypeAtIndex; \

enum { value = SizeOf< TypeAtIndex >::value }; \

}

Once again, there is a set of explicit MACRO declarations that have been made to define each instance of this meta-function.

C++

// MACRO Declarations for each ENTRY that is supported for the TypeList size ** | |

tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(0); | |

tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(1); | |

tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(2); | |

// ... | |

tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(30); | |

tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY(31); | |

| |

// Undefining the declaration MACRO | |

// to prevent its further use. | |

#undef tmp_ALCHEMY_TYPELIST_SIZEOF_ENTRY |

OffsetOf

Now we're picking up steam. In order to calculate the offset of an item in a Typelist, we must start from the beginning, and calculate the sum of all of the previous entries combined. This will require a recursive solution to perform the iteration, as well as the three operations that we have implemented up to this point. Here's the prototype for this meta-function:

C++

// Forward Declaration | |

template < size_t Index, | |

typename ContainerT > | |

struct OffsetOf; |

If you have noticed that I do not always provide a forward declaration, it is because it usually depends on if my general implementation will be the first instance encountered by the compiler, dependencies, or if I have a specialization that I would like to put in place. In this case, I am going to implement a specialization for the offset of index zero; the offset will always be zero. This specialization will also act as the terminator for the recursive calculation.

C++

/// The item at index 0 will always have an offset of 0. | |

template < typename ContainerT > | |

struct OffsetOf< 0, ContainerT > | |

{ | |

enum { value = 0 }; | |

}; |

One of the nasty problems to tackle when writing template meta-programs, is that debugging your code becomes very difficult. The reason being, many times by the time you are actually able to see what is generated, the compiler has reduced your code to a single number. Therefore, I like to try and write a traditional function that performs a similar calculation, then convert it to a template. Pretty much the same as if I were trying to convert a class into a parameterized object. This is essentially the logic we will need to calculate the byte offset of an entry from a Typelist definition.

C++

// An array of values to stand-in | |

// for the Typelist. | |

size_t elts[] = {1,2,3,4,5,6}; | |

| |

size_t OffsetOf(size_t index) | |

{ | |

return OffsetOf(index - 1) | |

+ sizeof(elts[index-1]); | |

}; |

This code adds the size of the item before the requested item, to its offset to calculate the offset if the requested item. In order to get the offset of the previous item, it recursively performs this action until index 0 is reached, which will terminate the recursion. This is what the OffsetOf function looks like once it is converted to the template and code-generating MACRO.

// OffsetOf Declaration MACRO

#define tmp_ALCHEMY_TYPELIST_OFFSETOF(I) \

template < TMP_ARRAY_32(typename T) > \

struct OffsetOf< (I), TypeList< TMP_ARRAY_32(T) > > \

{ \

typedef TypeList< TMP_ARRAY_32(T) > container; \

\

enum { value = OffsetOf< (I)-1, container >::value \

+ SizeAt < (I)-1, container >::value }; \

}

This operation also requires the series of MACRO declarations to properly define the template for every index. However, this time we do not define an entry for index 0 since we explicitly implemented a specialization for it.

C++

// Offset for zero is handled as a special case above | |

tmp_ALCHEMY_TYPELIST_OFFSETOF(1); | |

tmp_ALCHEMY_TYPELIST_OFFSETOF(2); | |

// ... | |

tmp_ALCHEMY_TYPELIST_OFFSETOF(31); | |

| |

// Undefining the declaration MACRO to prevent its further use. | |

#undef tmp_ALCHEMY_TYPELIST_OFFSETOF |

ContainerSize

Only one operation remains. This operation is one that we had to put aside until we completed more of the operations for the Typelist. The purpose of ContainerSize is to calculate the size of an entire Typelist. This will be very important to be able to support nested data structures. Here is the implementation:

C++

template < typename T > | |

struct ContainerSize | |

: type_check< type_container< ContainerT >::value > | |

, std::integral_constant | |

< size_t, | |

OffsetOf< Hg::length< T >::value, T >::value | |

> | |

{ }; |

I will give you a moment to wrap your head around this.

The first think that I do is verify that the type T that is passed into this template is in fact a type container, the Typelist. type_check is a simple template declaration that verifies the input predicate evaluates to true. There is no implementation for any other type, which will trigger a compiler error. In the actual source I have comments that indicate what would cause an error related to type_check and how to resolve it.

Next, the implementation is extremely simple. A value is defined to equal the offset at the item one passed the last item defined in the Typelist. This behaves very much like end interators in STL. It is ok to refer to the element passed the end of the list, as long as it is not dereferenced for a value. The last item will not be dereferenced by OffsetOf because it refers to the specified index minus one.

Summary

This covers just about all of the work that is required of the Typelist for Alchemy. At this point I have a type container that I can navigate its set of types in order, determine their size and offset in bytes, and I can even support nested Typelists with these operations.

What is the next step? I will need to investigate how I want to internally represent data, provide access with value semantics to the user in an efficient manner. I will also be posting on more abstract concepts that will be important to understand as we get deeper into the implementation of Alchemy, such as SFINAE and ADL lookup for templates.

I would like to devote this entry to further discuss the Typelist data type. Previously, I explored the Typelist[^] for use in my network library, Alchemy[^]. I decided that it would be a better construct for managing type info than the std::tuple. The primary reason is the is no data associated with the types placed within the. On the other hand, std::tuple manages data values internally, similar to std::pair. However, this extra storage would cause some challenging conflicts for problems that we will be solving in the near future. I would not have foreseen this, had I not already created an initial version of this library as a proof of concept. I will be sure to elaborate more on this topic when it becomes more relevant.

Linked-Lists

Linked lists are one of the fundamental data structures used through-out computer science. After the fixed-size array, the linked-list is probably the simplest data structure to implement. The Typelist is a good structure to study if you are trying to learn C++ template meta-programming. While the solutions are built in completely different ways, the structure, operations and concepts are quite similar between the two.

Any class or structure can be converted to a node in a linked list by adding a member pointer to your class, typically called next or tail, to refer to the next item in the list. Generally this is not the most effective implementation, however, it is common, simple, and fits very nicely with the concepts I am trying to convey. Here is an example C struture that we will use as a basis for comparison while we develop a complete set of operations for the Typelist meta-construct:

C++

// An integer holder | |

struct integer | |

{ | |

long value; | |

}; |

This structure can become a node in a linked-list by adding a single pointer to a structure of the same type:

C++

// A Node in a list of integers | |

struct integer | |

{ | |

long value; | |

integer *pNext; | |

}; |

Given a pointer to the first node in the list called, head, each of the remaining nodes in the list can be accessed by traversing the pNext pointer. The last node in the list should set its pNext member to 0 to indicate the end of the list. Here is an example of a loop that prints out the value of every point node in a list:

C++

void PrintValues (integer *pHead) | |

{ | |

integer *pCur = pHead; | |

while (pCur) | |

{ | |

printf("Value: %d\n", pCur->value); | |

pCur = pCur->pNext; | |

} | |

} |

This function is considered to be written with an imperative style because of pCur state variable that is updated with each pass through the loop. Recall that template meta-programming does not allow mutable state; therefore, meta-programs must rely on functional programming techniques to solve problems. Let's modify the C function above to eliminate the use of mutable state. This can be accomplished with recursion.

C++

void PrintValues (integer *pHead) | |

{ | |

if (pHead) | |

{ | |

printf("Value: %d\n", pHead->value); | |

PrintValues(pHead->pNext); | |

} | |

} |

This last function makes a single test for the validity of the input parameter, then performs the print operation. Afterwards it will call itself recursively for the next node in the list. When the last node is reached, the input test will fail, and the function will exit with no further actions. Since that is the last operation in the function, each instance of the call will pop off of the call stack until the stack frame the call originated from is reached. Incidentally, this type of recursion is called tail recursion. As we saw earlier, this form of recursion can easily be written as a loop in imperative style programs.

Typelists

Let's turn our focus to the main topic now, Typelists. Keep in mind that the goal of using a construct like a Typelist is to manage and process type information at compile-time, rather than process data at run-time. Here is the node definition I presented in a previous post to build up a Typelist with templates:

C++

template < typename T, typename U > | |

struct Typenode | |

{ | |

typedef T head_t; | |

typedef U tail_t; | |

}; | |

| |

// Here is a sample declaration of a Typelist. | |

// Refer to my previous blog entry on | |

// Typelists for the details. | |

typedef Typelist < char, short, long > integral_t; | |

| |

// This syntax is required to access the type, long: | |

integral_t::type::tail_t::tail_t::head_t longVal = 0; |

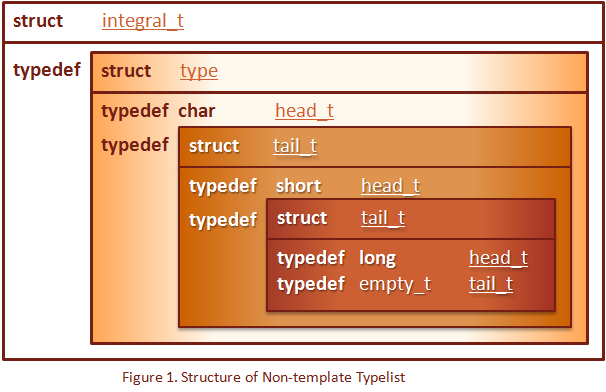

Object Structure

The concepts and ideas in computer science are very abstract. Even when code is presented, it is merely a representation of an abstract concept in some cryptic combination of symbols. It may be helpful to create a visualization of the structure of the objects that we are dealing with. Figure 1 illustrates the nested structure that is used to construct the Typelist we have just defined:

Another way to relate this purely conceptual type defined with templates, is to define the same structure without the use of templates. Here is the definition of the Typelist above defined using C++ without templates:

C++

struct integral_t | |

{ | |

typedef struct type | |

{ | |

typedef char head_t; | |

typedef struct tail_t | |

{ | |

typedef short head_t; | |

typedef struct tail_t | |

{ | |

typedef long head_t; | |

typedef empty_t tail_t; | |

}; | |

}; | |

}; | |

}; |

This image depicts the nested structure of this Typelist definition.

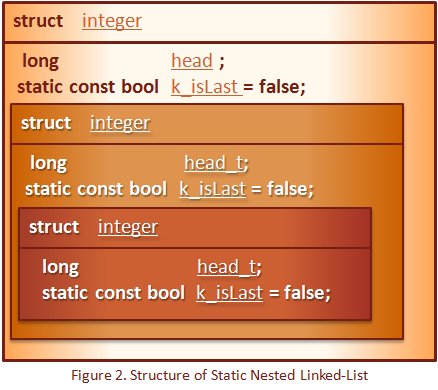

There is one final definition that I think will be helpful to demonstrate the similarities shared between the structures of the linked-list and the Typelist. It may be useful to think about how you would solve a problem with the nested linked-list definition when trying to compose a solution for the templated Typelist. Imagine what the structure of the linked-list would look like if the definition for the next node in the list was defined in place, inside of the current Integer holder rather than a pointer. We will replace the zero-pointer terminator with a static constant that indicates if the node is the last node. Finally, I have also changed the names of the fields from value to head and next to tail. Here is the definition required for a 3-entry list.

C++

// A 3-node integer list | |

struct integer | |

{ | |

long head; | |

struct integer | |

{ | |

long head; | |

struct integer | |

{ | |

long head; | |

static const bool k_isLast = true; | |

} tail; | |

static const bool k_isLast = false; | |

} tail; | |

static const bool k_isLast = false; | |

}; |

Here is an illustration for the structure of this statically defined linked-list.

Take note of the consequences of the last change in structure that we made to the linked-list implementation. It is no longer a dynamic structure. It is now a static definition that is fixed in structure and content once it is compiled. Each nested node does contain a value that can be modified, unlike the Typelist. However, in all other aspects, these are two similar constructs. Hopefully these alternative definitions can help you gain a better grasp of the abstract structures we are working with as we work to create useful operations for these structures.

Basic Operations

Let's run through building a few operations for the Typelist that are similar to operations that are commonly used with a linked-list. The structure of the Typelist that we have defined really only leaves one useful goal for us to accomplish, to access the data type defined inside of a specific node. This is more complicated than it sounds because we have to adhere to the strict rules of functional programming; ie. No mutable state or programming side-effects.

Error Type

Before we continue, it might be useful to define a type that can represent an error has occurred in our meta-program. This will be useful because the Error Type will appear in the compiler error message. This could help us more easily deduce the cause of the problem based on the Error Type reported in the message. We will simply define a few new types, and we can add to this list as necessary:

C++

namespace error | |

{ | |

struct Undefined_Type; | |

struct Invalid_Type_Size; | |

} |

The type definitions do not need to be complete definitions because they are never intended to be instantiated. Remember, type declarations are the mechanism we use to define constants and values in meta-programming.

Syntactic Sugar

I wrote my previous entry on using the preprocessor for code generation[^]. I demonstrated how to use the preprocessor to simplify declarations for some of the verbose Typelist definitions that we have had to use up to this point. I make use of these MACROs to provide a syntactic sugar for the definition of some of the implementations below. For example, the regular form of a Typelist declaration looks like this:

C++

template < T0 , T1 , T2 , T3 , T4 , T5 , T6 , T7 , | |

T8 , T9 , T10, T11, T12, T13, T14, T15, | |

T16, T17, T18, T19, T20, T21, T22, T23, | |

T24, T25, T26, T27, T28, T29, T30, T31> |

The previous declaration can be shortened to the following form with the code-generation MACRO:template < TMP_ARRAY_31(typename T) > There will be an additional specialization defined for many of the function implementations below that match this format. That is because this form of the definition is the outer wrapper that contains the internally defined TypeNode. All of the implementations below are developed to work upon the TypeNode. If we did not provide this syntactic sugar, a different implementation of each operation would be required for each Typelist of a different size. For 32 nodes, that would require 32 separate implementations.

Length

I showed the implementation for the Length operation in the blog entry that I introduced the Typelist. Here is a link to that implementation for you to review Length[^]. With the Length operation we now have our first meta-function to extract information from the Typelist. Here is what a call to Length looks like:

C++

// Calls the meta-function Length | |

// to get the number of items in integral_t. | |

size_t count = Length < integral_t >::value; | |

| |

// count now equals 3 |

front

Because of the nested structure used to build up the Typelist, accessing the type of the first node will be imperative for us to be able to move on to more complex tasks. There are two fields in each Typenode, the head_t, the current type, and tail_t, the remainder of the list. The name the C++ standard uses to access the first element in a container is, front. Therefore, that is what we will name our meta-function.

The implementation of front is probably the simplest function that we will encounter. There are only two possibilities when we go to access the head_t type in a node; 1) it will contain a type, 2) it will contain empty. Furthermore, the first node is always guaranteed to exist. To implement front, a general template definition will be required, as well as a specialization to account for the empty type.

C++

/// General Implementation | |

template < TMP_ARRAY_32(typename T) > | |

struct front < Typelist < TMP_ARRAY_32(T) > > | |

{ | |

// The type of the first node in the Typelist. | |

typedef T0 type; | |

}; |

Here is the specialization definition to handle the empty case:

C++

template < > | |

struct front < empty > | |

{ }; |

Why is there not a definition for the type within the empty specialization? That is because the type of code that we are developing will all be resolved at compile-time. A compiler error will be generated if front < empty > ::type is accessed, because it's invalid. However, if we had defined a type definition for the empty specialization, we would need to then write code to detect this case at run-time. Detecting potential errors at compile-time eliminates unnecessary run-time checks that would add extra processing. The final result is that we are detecting programming logic errors in our code, and the use of our code, by making the logic-errors invalid syntax.

back

Just as we were able to access the first element in the list, we can extract the type from the last node. This is also relatively simple since we have already implemented a method to count the length of the Typelist.

C++

/// This allows the last type of the list to be returned. | |

template < TMP_ARRAY_32(typename T) > | |

struct back < TypeList < TMP_ARRAY_32(T) > > | |

{ | |

typedef | |

TypeList < TMP_ARRAY_32(T) > container; | |

typedef | |

TypeAt < length < container >::value-1, | |

container | |

> type; | |

}; | |

| |

// This is the specialization for the empty node. | |

template < > | |

struct back < empty > | |

{ }; |

pop_front

To navigate through the rest of the list we will need to dismantle it node-by-node. The simplest way to accomplish this is to remove the front node until we reach the desired node. A new Typelist is created from the tail of the current Typelist node as a result of the pop_front operation. Because the meta-functions are built from templates, this new Type list will be completely compatible with all of the operations that we develop for this type. Here is the forward-declaration of the meta-function:

C++

template < typename ContainerT > | |

struct pop_front; |

Up to this point, the meta-functions that we have developed only extracted a single type. The implementation for pop_front differs slightly in structure from the other templates that we have created up to this point. Remember that a new Typelist must be defined as the result. In order to do this, the instantiation of a new Typelist type must be defined within our meta-function definition. The primary implementation is actually a specialization of the template definition that we forward declared.

C++

template < typename TPop, TMP_ARRAY_31(typename T) > | |

struct pop_front < TypeList < TPop, TMP_ARRAY_31(T) > > | |

{ | |

typedef TypeList < TMP_ARRAY_31(T) > type; | |

}; | |

| |

template < typename T1, typename T2 > | |

struct pop_front < TypeNode < T1, T2 > > | |

{ | |

typedef T2 type; | |

}; |

I realize that there are two template parameters in this implementation, as opposed to the single type parameter in the forward declaration. I believe this is perplexing for two reasons

1. Why is there a second parameter?

Our ultimate goal is to decompose a single node into it's two parts, and give the caller access to the interesting part of the node. In this case, the tail, the remainder of the list.

2. Why create a function if we know both types?

The short answer is: Type Deduction.

We will not call this version of the function directly. In fact, we most likely will not know the parameterized types to use in the declaration. This is an important concept to remember when programming generics. We want to focus on the constraints for the class of types to be used with our construct, rather than implementing our construct around a particular type. To design for a particular type, often leads to assumptions about the data that will be used, which in turn leads to a rigid implementation. I will be sure to revisit the topic of genericityin a future post. For now, suffice it to say that most of generic programming would not be possible if the compiler were not capable of type deduction.

All calls to the pop_front function will use the single parameter template. While the compiler is searching it's set of template instantiations for the best fit, it will deduce the two types from the Typelist that we provide. This function becomes a helper method, and is called indirectly by the compiler to create the final type. A direct instantiation would equate to the verbose syntax of the nested linked-list example from above. We use templates to put the compiler to work and generate all of this tedious code.

A specialization to handle the empty node is all that remains to complete the pop_front method.

C++

template < TMP_ARRAY_31(typename T) > | |

struct pop_front < TypeList< empty, TMP_ARRAY_31(T) > > | |

{ | |

typedef empty type; | |

}; |

push_front

One final operation that I would like to demonstrate is how to implement is push_front. This will allow use to programmatically build a Typelist in our code. This operation appends a new type at the front of and existing list. Here is the forward declaration of the meta-function defined with the form the caller will use:

C++

// forward declaration | |

template < typename ContainerT, | |

typename T> | |

struct push_front; |

The primary implementation of this template also contains one more parameter type than we expect. This gives the compiler mechanism to recursively construct the Typelist from a set of nodes. Eventually the existing Typelist sequence will be constructed, and finally the new type T that we specify will be added to the node at the front of the final list.

C++

template < typename T1, typename T2, typename T > | |

struct push_front < TypeNode < T1, T2 >, T > | |

{ | |

typedef TypeNode < T, TypeNode < T1, T2 > > type; | |

}; | |

| |

// The syntactic sugar definition of this operation. | |

template < TMP_ARRAY_32(typename T), typename T > | |

struct push_front < TypeList < TMP_ARRAY_32(T) >, T > | |

{ | |

typedef TypeList < T, TMP_ARRAY_31(T) > type; | |

}; |

Finally, we must provide a specialization that contains the empty terminator.

C++

template < typename T > | |

struct push_front < empty, T > | |

{ | |

typedef TypeNode < T, empty > type; | |

}; |

Summary

The Typelist will be the basis of my implementation for alchemy. In this entry I demonstrated in more detail how a Typelist is constructed, and design rationale for implementing generic programming constructs. I showed how the Typelist itself is not that much different compared to a traditional link-linked list that you most likely have worked with at some point in your career. In truth, these operations barely scratch the possibilities for what is possible for operating on the Typelist. You will find even more Typelist operations in Andrei Alexandrescu's book, Modern C++ Design. Such as rotating the elements of the list, and pruning the list to remove all of the duplicate types.

We are not done with our study of Typelists. In my next Typelist entry, we will move beyond the abstract and academic into the practical. I will explain new operations that I will need to implement Alchemy, and I will demonstrate how they can be applied to accomplish something useful.

Preprocessor Code Generation

general, CodeProject, Boost, C++, maintainability, Alchemy 2 feedbacks »I really do not like MACROs in C and C++, at least the way they have been traditionally used starting with C. Many of these uses are antiquated because of better feature support with C++. The primary uses are inline function calls and constant declarations. With the possibility of MACRO instantiation side-effects, and all of the different ways a programmer can use a function, it is very difficult to write it correctly for all scenarios. And when a problem does occur, you cannot be certain what is being generated and compiled unless you look at the output from the preprocessor to determine if it is what you had intended.

However, there is still one use of MACROs, that I think makes them too valuable for the preprocessor to be removed altogether. This use is code generation in definitions. What I mean by that, is hiding cumbersome boiler-plate definitions that cannot easily be replicated without resorting to manual cut-and-paste editing. Cut-and-Paste is notorious for its likelihood of introducing bugs. I want to discuss some of the features that cannot be matched without the preprocessor, and set some guidelines that will help keep problems caused by preprocessor misuse to a minimum.

Beyond Function MACROs and Constants

There are two operators that are unique to the preprocessor, and allow so much more to be accomplished with MACROs than implement inline functions. The first operator converts the input parameter to a string, and the second operator allows tokens to be pasted together to create a new symbol or declaration based on the input.

String Conversion Operator: #

40-years before that #-tag became a cultural phenomenon, this operator was available in the C preprocessor. This operator is used with MACROs that take arguments. When the # prepends an argument in the MACRO definition, the argument passed to the MACRO will be converted to a string literal. This means it will be enclosed with quotation marks. Any whitespace before and after the string argument will be ignored. Therefore, strings will automatically be concatenated if two or more are adjacent to each other.

Example

#define PRINT_VALUE( p ) \ std::cout < < # p < < " = " < < p < < std::endl; // usage: int index = 20; PRINT_VALUE(index); // output: // index = 20;

As the example above illustrates, the string conversion operator provides us with a way to insert the name of symbols that we are programming with into output messages. This allows one argument to provide the name of the parameter as well as the value in the output message.

The C++ Way

If we were solely interested in getting the name of an argument, C++ provides a mechanism to do this, if you have type information enabled. You must include the typeinfo header file. Then you can use the typeid operator to construct an instance of the type_info class. This class has a member called type_info::name that will return the human-readable string name of the symbol

C++

#include < typeinfo > | |

// ... | |

| |

int index = 20; | |

cout < < typeid (index)::name() < < " = " < < index; | |

| |

// output | |

// index = 20 |

Wait There's More

If all I wanted was to print the name of symbols, I would have sucked it up and adopted the C++ way years ago. However, anything that you place in the argument will be converted to a string. This allows this very well known MACRO sequence to be possible:

#define STR2_(x) #x

#define STR1_(x) STR2_(x)

#define TODO(str) \

__pragma(message (__FILE__"("STR1_(__LINE__)"): TODO: " str))

// usage: TODO("Complete task.")

What does this do? This implementation is specific to Microsoft compilers; it will invoke the #pragma(message) directive, to print a message to the compiler output. Notice that it is not our message that we are converting to a string, but the line number where the message is declared.

That is accomplished with this statement: STR1_(__LINE__). You may be wondering why there are two STR_ MACRO definitions, with one nested inside of the other. That is because __LINE__ itself is a preprocessor MACRO. If we simply made this call STR2_(__LINE__), the literal output "__LINE__" would be created rather than the intended line number. The nested MACRO is a way to force the processor to resolve __LINE__ before converting the result to a string. See, MACROs can be tricky to get correct.

Finally, It will also prepend the text "TODO:" before the supplied message is finally printed. This allows the Task Manager in Visual Studio to recognize that line as a point of code that still requires attention. The final result is a message is printed to the compiler output window, that is clickable, and will take the user directly to the file and line where the TODO message was left.

file.cpp(101): TODO: Complete task

Since I first came across this MACRO for inserting messages on Microsoft compilers, I have adapted it to work for GCC compilers as well. At this point for all other compilers, the MACRO will do nothing.

// Add Compiler Specific Adjustments

#ifdef _MSC_VER

# define DO_PRAGMA(x) __pragma(x)

#elif defined(__GCC__)

# define DO_PRAGMA(x) _Pragma(x)

#else

# define DO_PRAGMA(x)

#endif

// Adjusted Message MACRO

#define MESSAGE(str) \

DO_PRAGMA(message(__FILE__"("STR1_(__LINE__)"): NOTICE: " str))

I find the # operator is very useful for creating terse statements that improve readability and maintainability. As with any type of solution, always in moderation.

Token Pasting Operator: ##

When you want to create programmatically create repetitive symbols in code, the token-pasting operator, ## is what you need. When this operator precedes a MACRO argument, the whitespace between the previous symbol and the MACRO argument will be removed, joining the two parts together to create one token. If this is not obvious, the ## cannot be the first or last token in a MACRO definition. Here is a simple example to demonstrate:

Example

#define PRINT_OFFSET( n ) \ std::cout << "offset" #n << " = " << offset ## n << std::endl; // usage: int offset2 = 20; int offsetNext = 30; PRINT_OFFSET(2); PRINT_OFFSET(Next); // output: // offset2 = 20; // offsetNext = 30;

This is only a demonstration for how the token-paste operator works. I will demonstrate more valuable uses in the next section.

Generating Code

My philosophy when writing code is, "The best code, is the code that is not written". Meaning, strive for simple elegant solutions. At some point, some code must exist in order to have a program. Generated code is a close second in my preferences. We can use the preprocessor with great effect towards achieving this goal. However, once again, code generation is not a panacea, because it is generally restricted to boilerplate type definitions.

Generally in practice, your software will be easier to build when there are fewer tools involved in the build process. I have written and used code generation programs, and when it is the right tool, it works fantastically. However, there is a threshold of effort that is required for a task to exceed before it becomes worth the trouble of developing and maintaining a new tool, and adding complexity to your build system. In these cases, code generation with the preprocessor is a great solution.

Before I continue, I would like to mention that Boost contains a preprocessor library[^], which is capable of some amazing things. Such as, identifying the number of arguments in a MACRO expression, iterating over the list of arguments, counting and many others. Think of it as meta-programming with the preprocessor. Many of the examples below are simplified and less general solutions to the same sort of capabilities provided by Boost Preprocessor.

Indexing with Recursion

Working with the preprocessor is yet another example of functional-programming hidden inside of C++. We are restricted from using state or mutable data (variables). Therefore, many of the solutions with the preprocessor become solutions that involve recursion, especially if a task must be repeated a number of times.

When I was exploring the Typelist construct a few days ago, I came across this cumbersome declaration:

C++

// Typelist primary template definition. | |

template < typename T0, typename T1 = empty, | |

typename T2 = empty, typename T3 = empty, | |

typename T4 = empty, typename T5 = empty, | |

typename T6 = empty, typename T7 = empty, | |

typename T8 = empty, typename T9 = empty > | |

struct Typelist | |

{ | |

typedef Typenode< T0, | |

Typenode< T1, | |

Typenode< T2, | |

Typenode< T3, | |

Typenode< T4, | |

Typenode< T5, | |

Typenode< T6, | |

Typenode< T7, | |

Typenode< T8, | |

Typenode< T9, empty > > > > > > > > > > type; | |

}; |

This will be tedious to maintain, and error prone as we will need to update the type definitions anywhere the Typelist is used. For brevity, I only included 10 entries in the demonstration implementation. For my solution in Alchemy, I planned on starting with a minimum support for up to 32 entries. It would be nice if we could take advantage of the preprocessor to generate some of this repetitive code for us. Especially since these are hidden definitions in a library. The user will have no need to explore these header files to determine how to interact with these constructs. That makes me feel even more confident with a solution that depends on the preprocessor.

The first challenge that we will need to overcome is how do we build something that can repetitively process something until a given end-point is reached? This sounds like a recursive solution. To create this effect, we will need a utility file with predeclared MACROs. Boost actually has MACROs create these MACROs. For what I am after, I can get by with a small set MACROs specifically built to suit my purpose.

C++

#define ALCHEMY_ARRAY_1(T) T##0 | |

#define ALCHEMY_ARRAY_2(T) ALCHEMY_ARRAY_1(T), T##1 | |

#define ALCHEMY_ARRAY_3(T) ALCHEMY_ARRAY_2(T) , T##2 | |

// ... | |

#define ALCHEMY_ARRAY_32(T) ALCHEMY_ARRAY_31(T), T##31 | |

| |

// Repeats a specified number of times, 32 is the current maximum. | |

// Note: The value N passed in must be a literal number. | |

#define ALCHEMY_ARRAY_N(N,T) ALCHEMY_ARRAY_##N(T) |

This will allow us to create something like this:

C++

ALCHEMY_REPEAT_N(4, typename T); | |

// Creates this, newlines added for clarity | |

typename T0, | |

typename T1, | |

typename T2, | |

typename T3 |

This will eliminate most of the repetitive template definitions that I would have had to otherwise write by hand. How does the chain of MACROs work? The result of each MACRO is a call to the MACRO of the previous index, followed by a comma, and the input T, token-pasted with the current index. Basically, we are constructing a MACRO as part of the resolution of the current MACRO. This forces the preprocessor to make another pass and resolve this instance. The process continues until the terminating case is reached.

This first version helps in two of the declarations, however, the declaration with the nested Typenodes cannot be solved with the MACRO in this form. The right-angle brackets are nested at the end of the definition. Pasting the index at the end of this expression would no longer be correct. Rather than trying to be clever, and create a MACRO that would allow me to replace text inside of a definition, I will just create a second simpler MACRO that repeats a specified token, N times.

C++

#define ALCHEMY_REPEAT_1(T) T | |

#define ALCHEMY_REPEAT_2(T) ALCHEMY_REPEAT_1(T) T | |

#define ALCHEMY_REPEAT_3(T) ALCHEMY_REPEAT_2(T) T | |

// ... | |

#define ALCHEMY_REPEAT_32(T) ALCHEMY_REPEAT_31(T) T | |

| |

// Repeats a specified number of times, 32 is the current max | |

// Note: The value N passed in must be a literal number. | |

#define ALCHEMY_REPEAT_N(N,T) ALCHEMY_REPEAT_##N(T) |

With these two MACROs, we can now simplify the definition of a Typelist to the following:

// I have chosen to leave the template definition

// fully expanded for clarity.

// Also would have to solve the challenge of

// T0 not having a default value.

template < typename T0, typename T1 = empty,

// ...

typename T30 = empty, typename T31 = empty >

struct TypeList

{

#define DECLARE_TYPE_LIST \

ALCHEMY_ARRAY_32(TypeNode< T ), empty ALCHEMY_REPEAT_32( > )

typedef DECLARE_TYPE_LIST type;

};

If you remember I mentioned that an implementation will need to be defined for each specialization of the base template above. We have already developed all of the helper MACROs to help us define those specializations.

#define tmp_ALCHEMY_TYPELIST_DEF(S) \

template < ALCHEMY_ARRAY_ ##S(typename T)> \

struct TypeList < ALCHEMY_ARRAY_##S(T) > \

{ \

typedef ALCHEMY_ARRAY_##S(TypeNode < T ), empty \

ALCHEMY_REPEAT_##S( > ). type; \

}

// Define specializations of this array from 1 to 31 elements *****************

tmp_ALCHEMY_TYPELIST_DEF(1);

tmp_ALCHEMY_TYPELIST_DEF(2);

// ...

tmp_ALCHEMY_TYPELIST_DEF(31);

// Undefined the MACRO to prevent its further use.

#undef tmp_ALCHEMY_TYPELIST_DEF

All-in-all, the little bit of work replaces about 500 lines of definitions. We will end up using those a few more times to implement the Typelist utility functions.

Guidelines

These are a few rules that I try to follow when working on a solution. MACROs in-and-of themselves are not bad. However, there are many unknown factors that can affect how a MACRO behaves. The only way to know for sure what is happening, is to compile the file, and inspect the output from the preprocessor. If I ever need to resort to using a MACRO function, I try to hide its use away within another function, and isolate that function as much as possible so that I can be sure it is correct. The list of guidelines below is not a complete rule set, nor are these rules absolute, but they should help build more reliable and maintainable solutions when you need to resort to the power of the preprocessor.

Make them UGLY

I think the convention of ALL_CAPS_WITH_UNDERSCORES is ugly. I also think it helps easily identify MACROs in your code, and as you saw in my definitions, I don't mind creating very long names for MACROs. Hopefully their use remains hidden in implementation files.

Define constants_all_lowercase

This one only comes to mind because I have been bitten by it when trying to port code between platforms. This is another convention that has migrated from the original C days of using #define to declare MACRO constants. However, this could leave you vulnerable to some extremely difficult to find compiler errors in the best case, or code that incorrectly compiles in the worst case.

What do I mean? Think about the result if there was a MACRO defined deep within platform or library header files that clashes with the name you have declared using the CAPITAL_SNAKE_CASE naming convention. Your constant value would be overwritten with the code defined behind the MACRO. In most cases I imagine this would result in a weird compiler error stating cannot assign a literal to a literal. However, the syntax could result in something that is valid for the compiler, but not logically correct. This is one of the potential downsides of the preprocessor.

Restrict MACROs to Declarations

If you limit the context when your MACROs are used, you will have much more control over the conditions that your MACRO can be used, and ensure that it works properly. There is no longer any reason to use a MACRO as a function with inline functions in C++. However, using a MACRO to help generate a complex definition should be much less of a risk, and improves the readability and maintainability tremendously.

One of my favorite examples of this use for MACROs is in Microsoft's ATL and WTL libraries to define Windows event dispatch functions. For those of you that are not familiar with Windows programming with C/C++, each window has a registered WindowProc. This is the dispatch procedure that inspects events, and calls the correct handler based on event type. Here is an example of the WindowProc generated by the default project wizard:

C++

LRESULT CALLBACK WndProc( | |

HWND hWnd, | |

UINT message, | |

WPARAM wParam, | |

LPARAM lParam | |

) | |

{ | |

int wmId, wmEvent; | |

PAINTSTRUCT ps; | |

HDC hdc; | |

| |

switch (message) | |

{ | |

case WM_COMMAND: | |

wmId = LOWORD(wParam); | |

wmEvent = HIWORD(wParam); | |

// Parse the menu selections: | |

switch (wmId) | |

{ | |

case IDM_ABOUT: | |

DialogBox(hInst, MAKEINTRESOURCE(IDD_ABOUTBOX), hWnd, About); | |

break; | |

case IDM_EXIT: | |

DestroyWindow(hWnd); | |

break; | |

default: | |

return DefWindowProc(hWnd, message, wParam, lParam); | |

} | |

break; | |

case WM_PAINT: | |

hdc = BeginPaint(hWnd, &ps); | |

// TODO: Add any drawing code here... | |

EndPaint(hWnd, &ps); | |

break; | |

case WM_DESTROY: | |

PostQuitMessage(0); | |

break; | |

default: | |

return DefWindowProc(hWnd, message, wParam, lParam); | |

} | |

return 0; | |

} |

You can already see what a potential disaster is looming there. The code for each event is implemented in-line within the switch statement. I have run across WindowProc functions with up to 10000 lines of code in them. Ridiculous! Here is what a WindowProc looks like in ATL:

C++

BEGIN_MSG_MAP(CNewWindow) | |

COMMAND_ID_HANDLER(IDM_ABOUT, OnAbout) | |

COMMAND_ID_HANDLER(IDM_EXIT, OnExit) | |

MESSAGE_HANDLER(WM_PAINT, OnPaint) | |

MESSAGE_HANDLER(WM_DESTROY, OnDestroy) | |

END_MSG_MAP() |

This table declaration basically generates a WindowProc procedure, and maps the event handlers to a specific function call. Not only is this easier to read, it also encourages a maintainable implementation by hiding the direct access to the switch statement, and promoting the use of function handlers. I have successfully used this model many times to provide the required registration or definition of values. The result is an easy to read table that is compact and can easily serve as a point of reference for what events are registered, or definitions configured into your system.

Summary

I have demonstrated (ever notice the word demon starts out the word demonstrate) how to use some of the lesser used preprocessor features. I then went on to solve some cumbersome code declarations with these techniques, so now I have a fully declared Typelist type. Coming up, you will see how we will be able to take advantage of these utility MACROs to greatly simplify declarations that we use in the core of our library as we develop. In the end, the user of the library will be unaware that these MACROs even exist. While any type of MACRO can be risky to use based on what is included before your definition, I feel that this type of scenario gives the developer enough control over the definitions to minimize the potential for unexpected problems.

The link to download Alchemy is found below.

- The Typelist declaration can be found in the file: /Alchemy/Meta/type_list.h.

- The utility MACROs are located in: /Alchemy/Meta/meta_macros.h.

For this entry, I would like to introduce the type_traits header file. This file contains utility templates that greatly simplify work when writing template-based libraries. This is especially true for libraries that employ template meta-programming. The header file is available with C++ TR1 and above. This library provides tools to identify types, their qualifying properties and even peel-off properties one-by-one programmatically at compile-time. There are also transformation meta-functions that provide basic meta-programming operations such as a compile-time conditional.

The definitions in type_traits will save us a lot of time implementing Alchemy. As I introduce some of the tools that are available in the header, I will also demonstrate how these operations can be implemented. This will help you understand how to construct variations of the same type of solution when applying it in a different context. As an example of this, I will create a construct that behaves similarly to the tertiary operator, but is evaluated at compile-time.

Types and Values

Creating C++ template meta-programs are essentially functional programs. Functional programs compute with mathematical expressions. You will receive the same result each time you call a function with a specific set of parameters. As expressions are declarative, state and mutable data are generally avoided. Meta-programs are structured in a way to require the compiler to calculate the results of the expressions as part of compilation. The two constructs that we have to work with are integer constants, to hold calculation results, and types, which we can use like function calls.

Define a Value

Meta-program constants are declared and initialized statically. Therefore, these values are limited to integer-types. We cannot use string literals or floating-points because these types of static constants cannot be initialized in place with the class or struct definition. Sometimes you will see code declared with enumerations. I believe this is to prevent meta-programming objects from using space. It is possible for users to take the address of static constants, and if this occurs, the compiler must allocate space for the constant, even if the object is never instantiated. It is not possible to take the address of an enumeration declaration. Therefore no memory must be allocated when an enumeration is used.

Since I started using type_traits, I don't worry about it so much. I use the integral_constant template to define values. By convention, values are given the name value. This is important in generic programming to allow the object development to remain loosely coupled and independent of the design of other objects. The example below demonstrates how the integral_constant is typically used. Please note that all of these constructs live in the std:: namespace, which I will be omitting in these examples.

C++

// Implement the pow function to calculate | |

// exponent multiplication in a meta-function. | |

template < size_t BaseT, size_t ExpT > | |

struct Pow | |

: integral_constant< size_t, | |

BaseT * Pow< BaseT , ExpT-1>::value > | |

{ }; | |

| |

// Specialization: Terminator for exp 1. | |

template < size_t BaseT > | |

struct Pow< BaseT, 1 > | |

: integral_constant< size_t, BaseT > | |

{ }; | |

| |

// Specialization: Special case for exp 0. | |

template < size_t BaseT > | |

struct Pow< BaseT, 0 > | |

: integral_constant< size_t, 1 > | |

{ }; |

Call (Instantiate) our meta-function:

C++

int x = Result< 3,3 >::value; // 27 |

integral_constant

All that is required to implement the integral_constant, is a definition of a static constant in the template struct. Structs are generally used because of their default public member access. Here is the most basic implementation:

C++

template < typename T, | |

T ValT> | |

struct integral_constant | |

{ | |

static const T value = ValT; | |

}; |

Yes it's that simple. The implementation in the C++ Standard Library goes a little bit further for convenience. Just as with the STL Containers, typedefs are created for the value_type and type of the object. There is also a value conversion operator to implicitly convert the object to the value_type. Here is the complete implementation:

C++

template < typename T, | |

T ValT> | |

struct integral_constant | |

{ | |

typedef T value_type; | |

typedef integral_constant< T, ValT> type; | |

| |

static const T value = ValT; | |

| |

operator value_type() const | |

{ | |

return value; | |

} | |

}; |

Two typedefs have been created to reduce the amount of code required to perform Boolean logic with meta-programs:

C++

typedef integral_constant< bool, true > true_type; | |

typedef integral_constant< bool, false > false_type; |

Compile-time Decisions

With meta-programming there is only one way to define a variable, and there are many ways to create decision making logic. Let's start with one that is very useful for making decisions.

is_same

This template allows you to test if two types are the same type.

C++

template < typename T, | |

typename U > | |

struct is_same | |

: false_type | |

{ }; | |

| |

// Specialization: When types are the same | |

template < typename T > | |

struct is_same< T,T > | |

: true_type | |

{ }; |

The compiler always looks for the best fit. That way, when multiple templates would be suitable, only the best match will be selected, if that is possible. In this case, the best match for when both types are the exact same, is our specialization that indicates true.

conditional

It's time to define a branching construct to enable us to make choices based on type. The conditional template is the moral equivalent of the if-statement for imperative C++.

C++

// The default implementation represents false | |

template < bool Predicate, | |

typename T, | |

typename F > | |

struct conditional | |

{ | |

typedef F type; | |

}; | |

| |

// Specialization: Handles true | |

template < typename T, | |

typename F > | |

struct conditional < true, T, F > | |

{ | |

typedef T type; | |

}; |

Applying These Techniques

I have just demonstrated how three of the constructs defined in the type_traits header could be implemented. The techniques used to implement these constructs are used repeatedly to create solutions for evermore complex problems. I would like to demonstrate a construct that I use quite often in my own code, which is both built upon the templates I just discussed, and implemented with the same techniques used to implement those templates.

value_if

While the conditional template will define a type based on the result a Boolean expression, I commonly want to conditionally define a value based on the result of a Boolean expression. Therefore, I implemented the value_if template. This makes use of the integral_constant template and a similar implementation as was used to create the conditional template. This gives me another tool to simplify the complex parametric expressions that I often encounter.

C++

template < bool Predicate, | |

typename T, | |

T TrueValue, | |

T FalseValue > | |

struct value_if | |

: integral_constant< T, FalseValue > | |

{ }; | |

| |

// Specialization: True Case | |

template < typename T, | |

T TrueValue, | |

T FalseValue > | |

struct value_if < true, T, TrueValue, FalseValue > | |

: integral_constant< T, TrueValue > | |

{ }; |

Summary

I just introduced you to the type_traits header in C++. If you have not yet discovered this header, you should check it out. It can be very useful, even if you are not creating template meta-programs. Here is a reference link to the header from cppreference.com[^].

With the basic constructs that I introduced in this entry, I should now be able to create more sophisticated ways to interact with the Typelist[^] that I previously discussed for Alchemy. With the simple techniques used above, we should be able to implement template expressions that will query a Typelist type by index, get the size of the type at an index, and similarly calculate the offset in bytes from the beginning of the Typelist. The offset will be one of the most important pieces of information to know for the Alchemy implementation.

Previously I had discussed the tuple data type. The tuple is a general purpose container that can be comprised of any sequence of types. Both the types and the values can be accessed by index or traversing similar to a linked list.

The TypeList is a category of types that are very similar to the tuple, except no data is stored within the type list. Whether the final implementation is constructed in the form of a linked list, an array, or even a tree, they are all typically referred to as Typelists. I believe that the Typelist construct is credited to Andrei Alexandrescu. He first published an article in the C/C++ Users Journal, but a more thorough description can be found in his book, Modern C++ Design.

Note:

For an implementation of a TypeList with Modern C++, check out this entry:

C++: Template Meta-Programming 2.0[^]

There is No Spoon

What happens when you instantiate a Typelist?

Nothing.

Besides, that's not the point of creating a Typelist container. The overall purpose is to collect, manage, and traverse type information programmatically. Originating with generic programming methods in C++, type lists are implemented with templates. Type lists are extremely simple, and yet can be used to solve a number of problems that would require enormous amounts of hand-written code to solve. I will use the Typelist in my solution for Alchemy.

Let's start by defining a Typelist.:

C++

template < typename T, typename U > | |

struct Typelist | |

{ | |

typedef T head_t; | |

typedef U tail_t; | |

}; |

There are no data or functions defined in a Typelist, only type definitions. We only need these two elements, because as we saw with the Tuple, more complex sets of types can be created by chaining Typelists together with the tail. Here is an example of more complex Typelist definition:

C++

// Typelist with an 8, 16, 32, and 64 bit integer defined. | |

typedef | |

Typelist< char , | |

Typelist< short, | |

Typelist< int, long int > > > integral_t; |

When I first saw this, I thought two things:

- How can this be useful, there is no data

- The syntax is extremely verbose, I wouldn't want to use that

Remember in The Matrix when Neo goes to meet The Oracle, and he is waiting with the other "potentials"?! While he waits, he watches a young prodigy bending a spoon with his mind. He urges Neo to try, and offers some advice:

Spoon boy: Do not try and bend the spoon. That's impossible. Instead... only try to realize the truth.

Neo: What truth?

Spoon boy: There is no spoon.

Neo: There is no spoon?

Spoon boy: Then you'll see, that it is not the spoon that bends, it is only yourself.

Hmmm, maybe that's a bit too abstract, but, that's pretty much what it's like working with a Typelist. There is no data. Now take your red pill and let's move on.

Simplify the Definition

Let's make the definition simpler to work with. Working with a single Typelist definition of variable length seems much simpler to me than having to enter this repeated nesting of Typelist structures. Something like this:

C++

typedef Typelist< char, short, int, long int > integral_t; | |

// Or format like a C struct definition: | |

typedef Typelist | |

< | |

char, | |

short, | |

int, | |

long int | |

> integral_t; |

This could be usable, and it is easily achieved with template specialization or variadic templates. The solution based on template specialization is much more verbose, however, it is also more portable. I have also seen comments on compiler limits placed on the number of fields supported by variadic templates, but I do not have any personal experience with hitting limits myself. This is something I will probably explore in the future. For now, I will start the implementation with a template specialization solution.

Specialization

For this type of solution, we must select a maximum number of elements that we want or expect to be used. This is one of the drawbacks of specialization compared to the variadic approach. The forward declaration of the full Typelist would look like this:

C++

template < typename T0, typename T1, ..., typename Tn > | |

struct Typelist; |

We cannot go much further until we resolve the next missing concept.

The Terminator

Similar to a linked-list, we will need some sort of indicator to mark the end of the Typelist. This terminator will also be used in the specialized definitions of Typelist to give us a consistent way to define the large number of definitions that will be created. With meta-programming, we do not have variables, only types and constants. Since a Typelist is constructed entirely from types, we should use a type to define the terminator:

C++

struct empty {}; |

With a defined terminator, here is what the outer definition for a 10-node Type list:

C++

template < typename T0, typename T1 = empty, | |

typename T2 = empty, typename T3 = empty, | |

typename T4 = empty, typename T5 = empty, | |

typename T6 = empty, typename T7 = empty, | |

typename T8 = empty, typename T9 = empty > | |

struct Typelist | |

{ | |

// TBD ... | |

}; |

Here is the outer definition for a specialization with two items:

C++

template < typename T0, typename T1 > | |

struct Typelist< T0, T1 > | |

{ | |

// TBD ... | |

}; |

Implementation

Believe it or not, we have already seen the implementation that goes inside of the template definitions shown above. The only exception is we will rename what we previously called a Typelist to a Typenode. Otherwise, the implementation becomes the typedef that we created. By convention, we will name the typedef, type. For reference, constant values are called, value, in template meta-programming. This consistency provides a very generic and compatible way for separate objects that were not designed together, to still inter-operate.

C++

template < typename T, typename U > | |

struct Typenode | |

{ | |

typedef T head_t; | |

typedef U tail_t; | |

}; | |

// Typelist primary template definition. | |

template < typename T0, typename T1 = empty, | |

typename T2 = empty, typename T3 = empty, | |

typename T4 = empty, typename T5 = empty, | |

typename T6 = empty, typename T7 = empty, | |

typename T8 = empty, typename T9 = empty > | |

struct Typelist | |

{ | |

typedef Typenode< T0, | |

Typenode< T1, | |

Typenode< T2, | |

Typenode< T3, | |

Typenode< T4, | |

Typenode< T5, | |

Typenode< T6, | |

Typenode< T7, | |

Typenode< T8, | |

Typenode< T9 > > > > > > > > > > type; | |

}; |

Here is the implementation for the two node specialization:

C++

template < typename T0, typename T1 > | |

struct Typelist < T0, T1 > | |

{ | |

typedef Typenode< T0, | |

Typenode< T1> > type; | |

}; |

Simple? Yes. Verbose? Yes. Is it worth it? I believe it will be. Besides, since this definition will be hidden away from users, I would feel comfortable defining some code-generating MACROs to take care of the verbose and repetitive internal definitions. However, I will demonstrate that another time.

Using a Typelist

We have simplified the interface required of the user to define a Typelist, however, interacting with one at this point is still cumbersome. For example, consider the definition of the integral_t Typelist defined above. If we wanted to create a variable using the type in the 3rd slot (index 2, int), this syntax would be required:

C++

integral_t::type::tail_t::tail_t::head_t third_type = 0; |

These are the stories that grown-up C programmers tell little C++ programmers growing up to prevent the use of some of the most effective aspects of the language. The next few entries will be focused on extracting data out of the Typelist in a clean and simple way. However, let's tackle one solution right now.

How Many Elements?

Length is a common piece of information that is desired from containers. This is no different for type-containers. How can we determine the number of elements in the integral_t we have been using in this entry? We will write a meta-function. Unfortunately, I have not yet demonstrated many of the techniques in meta-function development. This means we will need to develop a Length function that matches the signature for every specialization that was created for the Typelist definition itself. Otherwise, we could create a meta-function that actively traverses the Typenodes searching for a terminator. We will revisit and solve this problem with a more elegant solution.

The solution will be very simple, and very verbose, but still, very simple. Since we must define a Length implementation for each Typelist specialization that we created, we know in each specialization how many parameters types were defined. We can take advantage of that information to create our simple solution:

C++

template < typename ContainerT > | |

struct Length; | |

| |

template <> | |

struct Length < empty > | |

{ | |

enum { value = 0 }; | |

} | |

| |

template < typename T0 > | |

struct Length < Typelist < T0 > > | |

{ | |

enum { value = 1; } | |

} | |

| |

template &amp< typename T0, typename T1 > | |

struct Length &amp< Typelist < T0, T1 > > | |

{ | |

enum { value = 2; } | |

} |

Again, with some preprocessor MACROS, generating all of the variations could be greatly simplified. For now, I would like to get enough infrastructure in place to determine how effective this entire approach will be at solving the goals of Alchemy. Here is a sample that demonstrates querying the number of elements in the integral_t type.

C++

// Calls the meta-function Length | |

// to get the number of items in integral_t. | |

size_t count = Length< integral_t >::value; | |

| |

// count now equals 4 |

Summary